Product updates

– 8 min read

Introducing actions

with Palmyra X4

Setting the industry standard for LLM tool calling

Building the infrastructure for enterprise AI to work well with an entire ecosystem of tools, databases, and systems is not only arduous but also prone to error and inefficiency, making it nearly impossible to scale. Without a streamlined way for an LLM to interact with other systems, innovation slows down.

The release of our newest top-ranking model, Palmyra X4, does just that, setting the industry standard for what AI apps can do with new tool calling capabilities, also known as function calling capabilities.

Palmyra X4 boasts state-of-the-art reasoning through novel training techniques. By leveraging synthetic data, we’ve trained our model more efficiently and at a fraction of the cost reported by major AI labs. Palmyra X4’s suite of new features and capabilities include:

- Taking action in systems external to the LLM via tool calling

- Automatic data integration with a built-in RAG tool

- Code generation

- A 128k context window

- Structured output generation for simpler system integration (coming in a few weeks)

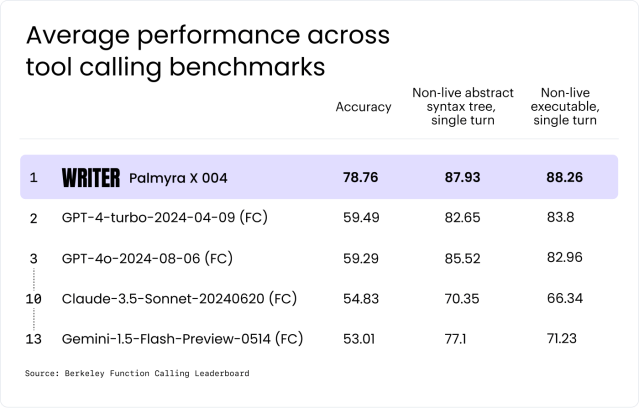

Early results on tool calling benchmarks put our new frontier model as the leader on the Berkeley Function Calling Leaderboard by a significant margin, besting model providers including OpenAI, Anthropic, Meta, and Google, and is top-ranked on Stanford HELM.

Palmyra X4 is available today on AI Studio and Ask Writer, our prebuilt chat experience. You can also use apps powered by Palmyra X4 in Slack via our new Slack integration.

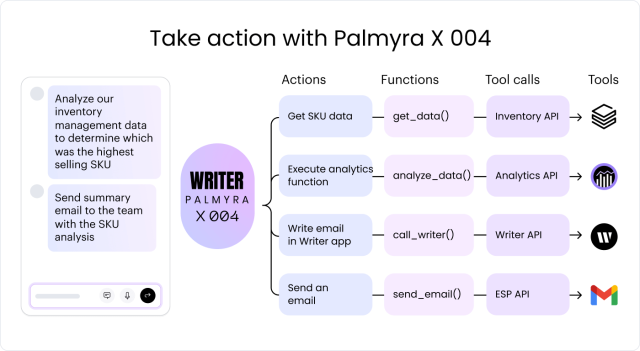

The power of tool calling on a full-stack platform

Taking action via tool calling enables AI apps to do more work on behalf of a developer with increased capabilities and less repetitive code. Palmyra X4 is also unique in the expanded variety of tools it can call. Unlike the platform design of other model providers, in-house builds, and AI companies, our full-stack design and low-code development tools in AI Studio let you build apps with Palmyra X4 that use built-in RAG and call other no-code WRITER apps.

Tool calling works by providing the model with a set of tools, like third-party services, algorithms, or RAG, that the LLM can use to extend its built-in knowledge. This could be fetching real-time information, like stock market data, but can also include acting in your tools, like sending an email, initiating a prebuilt workflow, or updating a database. Palmyra X4 determines when a function needs to be called and interacts with the user to make sure they supply all the necessary fields for running the tool.

Just like other components of our stack, we’ve integrated tool calling with core WRITER features, providing you with powerful out-of-the-box capabilities that no other platform offers. These include:

- Built-in graph-based RAG: Palmyra X4 comes with a predefined, self-executing RAG tool, enabling it to pull real-time data from your company’s Knowledge Graph without custom development. It also shows its chain of thought and cites sources behind outputs, ensuring each tool call is more context-aware and explainable. Learn more in docs.

- The WRITER applications endpoint: Palmyra X4 can trigger no-code WRITER apps to act as tools. This opens up an ability for multi-step workflows to be executed by a single AI app, using other WRITER apps as microservices. This level of integration is only possible with a full-stack platform with no-code developer tools, where each layer works in concert. Learn more in docs.

We’ll dive into both of these WRITER capabilities in our upcoming tool calling series. Stay tuned for guides, sample apps, cookbooks and more.

Building the highest-ranking model for tool calling in the industry

Early results indicate Palmyra X4 is the number one-ranked model for tool calling when benchmarked against models on Berkeley’s Function Calling Leaderboard (listing coming soon). Not only is it the top performing model for tool calling and API selection over all GPT, LLama, Claude, and Gemini models, it’s also the largest model of its size to be trained on synthetic data, at a fraction of the cost reported by major AI labs. This cements our commitment to building affordable AI solutions that meet the accuracy and reliability needs of the enterprise.

We believe that larger datasets aren’t a scalable way forward in model training — precision training and architectural innovation are. To do this, we curate and manufacture structured data via a proprietary LLM, Instruct-Adapt-X, which leverages an early stopping mechanism we developed to achieve proficiency with a small percentage of the data used to train other frontier models.

Our cost-effective training techniques also cement us as the most accurate and affordable model for tool calling and API selection on the market. Berkeley’s benchmarks put LLMs through real-world scenarios to evaluate their ability to select the correct tools, determine which API to call, and successfully execute a function. The result is an accurate, fast, reliable model that can execute multiple tool calls in sequence or in parallel in one interaction with the user.

- Top accuracy (acc): Palmyra X4 achieves 78.76% in overall accuracy in identifying and executing the correct tool call, leading the industry by a nearly 20% margin.

- Leading ability to structure a call (AST): Palmyra X4 achieves the highest average performance of 87.93% on correctly planning and organizing tool call(s) before execution, demonstrating its ability to accurately interpret a user input, generate the correct parameters, and sequence the steps for a tool call.

- Leading ability to execute a call (Exec): Palmyra X4 achieves 88.27% performance on executing tool call(s), ranking highest against all models to efficiently carry out actions across enterprise systems.

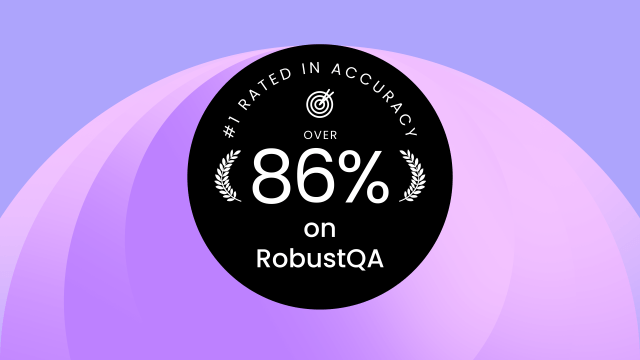

Palmyra X4 also ranks in the top 10 models on both HELM Lite, a holistic framework for evaluating foundation models, and HELM MMLU, which tests understanding across 57 subjects, scoring 86.1% and 81.3% respectively.

A closer look: building tools with functions

Before using tools, you’ll need to define the functions in your application and describe them to the LLM. For example, imagine you have a function get_product_info() that calls your company API to retrieve product data.

To use this product data retrieval tool and call the get_product_info() function, you’d first describe this function to Palmyra using a JSON schema in what we call a tools array.

tools = [

{

"type": "function",

"function": {

"name": "get_product_info",

"description": "Get information about a product by its id",

"parameters": {

"type": "object",

"properties": {

"product_id": {

"type": "number",

"description": "The unique identifier of the product to retrieve information for",

}

},

"required": ["product_id"],

},

},

}

]Then, you can pass the tools array to Palmyra for it to use.

messages = [{"role": "user", "content": "what is the name of the product with id 12345?"}]

response = client.chat.chat(

model="palmyra-X4", messages=messages, tools=tools, tool_choice="auto"

)Once tools are defined and passed to Palmyra, the LLM acts as a conductor. It processes the user query, determines what function needs to be called, and then creates the parameters to invoke the function.

Your code then executes the function and appends the response to the chat history. You can return the response in plain text, or with Palmyra’s upcoming structured output feature, a formatted JSON schema — allowing you to pass the output on to another tool.

To get hands-on with tool calling, check out our guide with detailed step-by-step instructions.

Reinventing AI app development

In today’s enterprise, developers need to quickly and easily build AI apps that can work in tandem with existing enterprise tools and workflows and can adapt to evolving end user needs.

With the release of Palmyra X4 and its tool calling capabilities on our full-stack platform, we’re not just adding another feature — we’re empowering devs to build, scale, and deploy powerful AI apps that push LLMs into a new era of enterprise AI integration and action.

Explore the full capabilities of Palmyra X4 in Writer AI Studio, and see how you can start building the next generation of AI-powered workflows.