Thought leadership

– 11 min read

Anyone can build software now — and it’s causing hell for developers

It’s time to rethink the software development lifecycle for AI agents

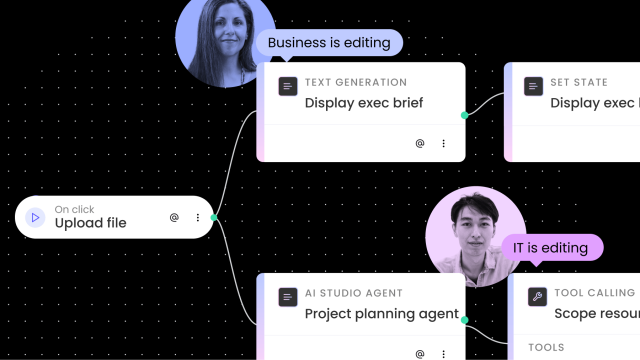

If you’re building AI agents in enterprise, you’ve probably noticed two worlds colliding. Here’s what’s happening: business users are spinning up AI agents left and right, feeling like they can build anything. Meanwhile, developers are stuck being the ‘reality police’ — trying to make these things actually work in production while secretly wondering if they’re automating themselves out of a job.

At the end of the day, many devs feel like they go through all this chaos … only to end up with a solution not that different from the software they’ve built before.

From where we sit, however, developers aren’t going anywhere. What’s more, to realize the potential of AI agents, business users and developers need to work hand in hand. So, where do we go from here?

After deploying over 5,000 agentic systems at leading enterprises like Vanguard, Salesforce, Uber, and Kenvue, we learned some important lessons about what works AND what doesn’t. As we graduate our Agent Builder into public beta, we’re setting out to create a community of builders who can help us shape a new methodology for the modern enterprise.

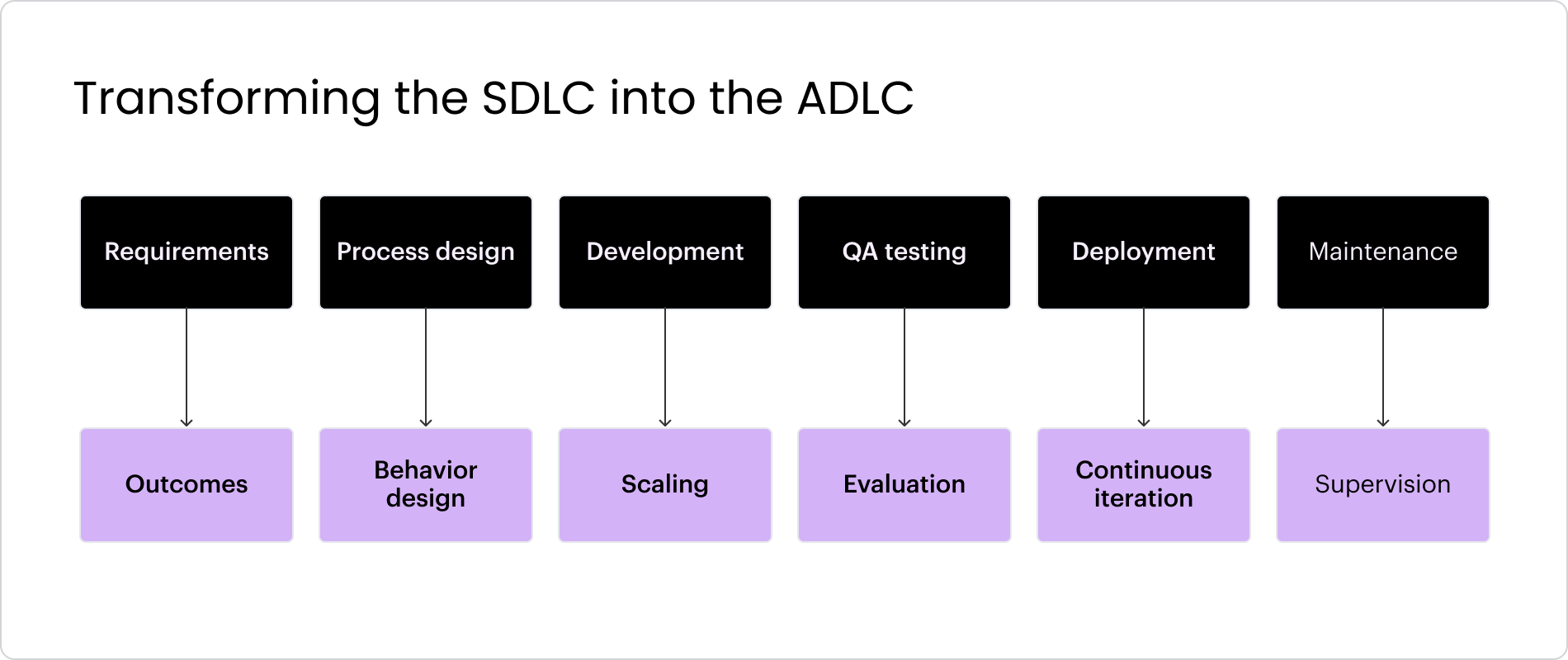

Reimagining the SDLC for an agentic world

In the past two decades, enterprises mastered the software development lifecycle (SDLC). Methods and practices like Agile, DevOps, and DORA brought speed, structure, and predictability to the work of crafting new tools and platforms.

Generative AI threw a curveball into this world, demanding that organizations learn to work with systems that, by their very nature, are non-deterministic. Agentic AI disrupted that playbook further.

The tech industry has begun to align around emerging standards like MCP and A2A in the last six months. What we don’t have at the moment is a clear methodology for creating and sustaining these incredibly powerful new tools in enterprise environments.

What we need is basically ‘Agile for Agents’ — a playbook that actually works for building, shipping, and maintaining agents at scale. Internally we’ve started referring to this as the Agent Development Lifecycle (ADLC.)

If you want to help us build the ADLC, sign up here:

Join our builder community

Over the last few months, we’ve started to put together our perspective on how to address these challenges. We’ve come up with six core principles that form the backbone of our working methodology:

Let’s run through these six principles to explain how the SDLC evolves to become the ADLC.

Build for outcomes, not requirements

In the SDLC, everything was built around requirements: business writes a spec, hands it off, and hopes it holds through dev cycles. In agentic development, it starts with outcomes. That’s a fundamental shift.

The first approach (SDLC) is a mandate in search of a problem. The second approach, ADLC, identifies a problem you can actually solve, measure, and improve. So instead of scoping out a static set of rules, you’re defining what “good” behavior looks like and designing toward that.

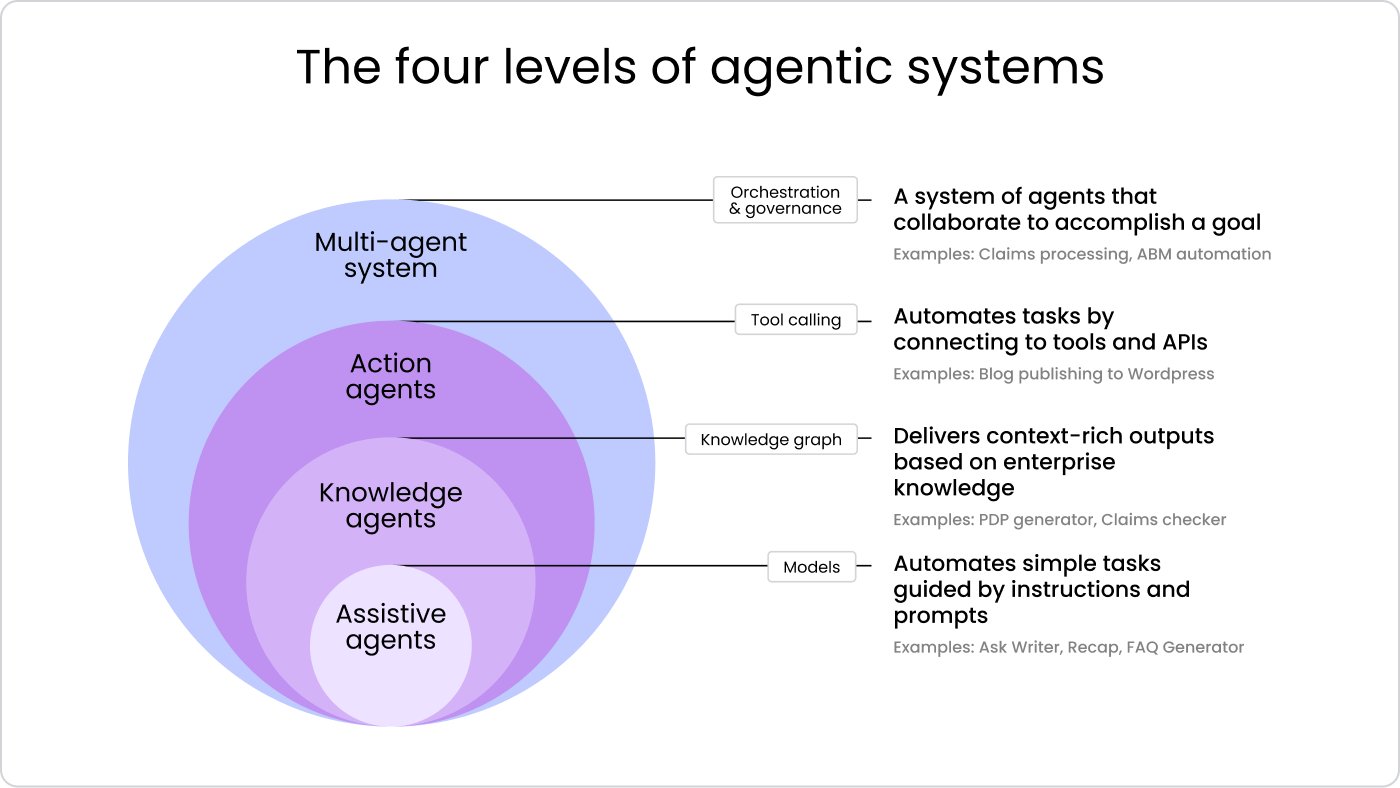

Right-size solutions with agentic alignment

The biggest mistake in enterprise AI? Building autonomous agents when you need simple automation. The key is matching the level of autonomy to the actual problem — not every business process needs an agent that can reason, plan, and act independently. We’ve learned to start with the minimum viable autonomy — basic task execution, then structured workflows, then contextual decision-making, and only then full autonomy. Most problems get solved at level one or two.

We recently worked with a customer whose legal team was drowning in contract reviews. They were focused on building “an AI legal assistant,” but couldn’t align on what “good” looked like. They had vague mandates, teams all defined success differently, and the scope kept shifting.

We shifted their focus to a clear outcome — reducing initial contract review time — and everything else clicked. The behaviors, decision logic, and escalation paths all mapped back to that outcome. And the agent worked.

Put process owners in the driver’s seat

The SDLC puts developers and PMs in the driver’s seat. But with agents? The people who actually know the workflow need to be hands-on from day one. This means it’s essential for process owners to:

- Scope a clear use case with defined outcomes

- Map the decision logic and business rules

- Validate that the agent aligns to actual workflows

- Own feedback loops and signoff for go-live

Shift from process design to behavior design

In the SDLC, you design deterministic processes — a series of predictable steps, coded against a well-defined spec. Input in, output out. But with agents, you’re not defining step-by-step logic anymore, you’re shaping agent behavior. That means thinking less about control flow, and more about context and decision-making.

Success is measured by whether the agent behaves correctly, not whether it followed a static set of rules. Process mapping is becoming the new prompt engineering. Teams need to rethink (or map for the first time) how work flows throughout their systems, then translate that business logic and scaffold it into a working prototype.

Evolve from development mindset to scaling mindset

A lot of teams today are stuck in development mode. They’re racing to build something that works, focused on one-off use cases. You spin up a new prompt, write new logic, maybe even a new interface, every single time.

It works — until it doesn’t. Without shared structure, agents will become inconsistent, hard to govern, and impossible to scale. To scale, enterprises need design systems that introduce reusable components and a shared language.

From there we can build a deep, composable library of prompts, logic, interfaces, and patterns. This empowers teams across the org to turn ideas into prototypes in minutes instead of days. This approach becomes increasingly valuable as companies begin scaling from a handful of agents to hundreds or even thousands spread across different departments.

Move from QA and testing to evals and fine-tuning

In traditional software, QA is objective. You write test cases, check them off a UAT list, and validate whether the code behaves exactly as expected. If it passes, it ships.

But with agents, behavior emerges in the real world and you only really know how it behaves once it’s out in the wild. And success isn’t binary – agent behavior doesn’t break in obvious ways.

It’s not, “did it break?” But, “did it behave well enough to be trusted?”

Testing is more about evaluating outcomes and intent. Instead of pass/fail, you’re asking:

- Does it follow the intended path?

- Does fallback logic trigger when it should?

- Does it escalate safely when confidence is low or context is unclear?

- Does it actually help the user accomplish the task?

The goal here isn’t correctness, it’s behavioral confidence.

There’s a lot more room for hyper-personalization of outcomes, too. We were working with a major bank and every unit had a different way they wanted to interact with the agent — all with the same data, same inputs, same logic. The SDLC would struggle to enable that level of subjectivity.

Break from deployment to continuous versioning

The idea of “done” no longer applies with agents. You’re not shipping once. You’re shaping constantly. Because building agents isn’t linear. Agent requirements are ever evolving. Behavior shifts, context changes. Sometimes the smallest change can make a big difference.

So you need an MVP mindset — launch fast, monitor closely, iterate continuously. It’s not about launching the perfect agent. It’s about launching them safely, then learning fast and iterating — over and over again.

That phrase “building the plane while we fly it” is REAL with agents. And that also means you need a new kind of version control.

Traditional software is versioned at the code level, with mature tooling to support it — Git commits, branching strategies, pull requests, and CI/CD pipelines. When something breaks, you can inspect the diff, isolate the issue, roll back the change, and ship a fix.

But that model doesn’t map cleanly for agents.

You can update an LLM prompt and watch the agent behave completely differently — even though nothing in your git history changed. Model weights shift. Retrieval indexes get updated. Tool APIs evolve. Suddenly, the same input produces a different output — and you’re left trying to debug a ghost.

As we start scaling tens, hundreds, and thousands of agents, version control is essential to control the chaos:

- Prompts and system messages

- Tool schemas and handlers

- Memory configs

- Model settings

- Full execution traces – inputs, outputs, reasoning steps, tool calls

Version for behavior, not code

Maintenance is a fallacy. With agents, you can’t sit back and watch. In software, observability tells you “what happened” – via logs, errors, and latency. Performance is measured in binary terms. It’s pass or fail.

But it’s not enough for agents.

Escalation logic is missing — agents either fail silently or alert unpredictably. Lifecycle governance is rarely in place — agents continue running long after they’re useful. With agents, we need a new layer of SUPERVISION, one that asks “SHOULD that have happened?”

This introduces an entirely new set of primitives to govern agents:

- Semantic outcome tracking to evaluate alignment, not just output

- Behavioral timelines to audit decisions over time

- Confidence thresholds and escalation rules to govern risk

These new primitives — semantic tracking, behavioral timelines, confidence governance — represent a fundamental shift in how we think about software. We’re not just versioning code anymore. We’re versioning intelligence, behavior, and decision-making. This requires us to rethink everything we know about building and maintaining systems.

Help us imagine the future

As an industry, we’ve spent the last 30 years mastering software development. We turned software into a well-oiled machine: predictable, structured, and fast to ship. We optimized it to a science — for deterministic systems.

But agents aren’t like the deterministic software of the past. And they don’t behave like it. So today, I’m asking you to forget EVERYTHING you know about the SDLC. The tools we have today have completely democratized what it means to build. Now, anyone can prototype an idea in 60 seconds. No Figma, no PMs, no code. The speed of creation is unlike anything we’ve seen before.

It’s an INCREDIBLE unlock, but it’s causing hell for developers.

We’re testing our theory and we need your feedback

The reality is — you can’t build agents the same way you build software. It’s categorically different. Agents don’t follow rules. They’re outcome-driven. They interpret. They adapt. Their behavior only emerges in real-world environments. And the SDLC is breaking under the weight of it.

Anthony Alcaraz, a senior AI Strategist at Amazon, put it nicely.

“The future isn’t purely probabilistic. It’s about strategic determinism through structured output. The most successful agent systems I’ve seen use a hybrid approach:

Deterministic infrastructure (schemas, protocols, safety rails)

Probabilistic intelligence (reasoning, creativity, adaptation)

Think of it like building a jazz band—you need solid musical structure (deterministic) so musicians can improvise brilliantly (probabilistic).”

If you’re finishing this piece and nodding your head in agreement, we want to hear from you! To make the ADLC a standard that can be widely adopted, it needs validation. Just as DORA metrics proved their worth through public review and testing, we hope to work with partners, customers, and peers to get real data behind the strategies we’re proposing.

If you’ve read this far and are shaking your head because you don’t agree with some of the ideas we’ve shared, even better! We need to challenge our assumptions and engage in open debate if we’re going to create something that earns widespread trust.

You can read our blog on Agent Builder to learn more about what the platform offers or dive into our docs to learn more about how you can start crafting your first agent today. If you are interested in sharing your feedback on the ADLC, fill out the below form and we’ll be in touch.