Research

– 6 min read

Introducing self-evolving models

The future of scalable AI

The rapid growth of large language models (LLMs) has revolutionized AI, but it has also revealed fundamental challenges with scaling models. As models become larger, performance gains are increasingly marginal, while the costs of training them—both computational and financial—continue to skyrocket. This diminishing return on scaling highlights the need for smarter, more efficient techniques to improve model accuracy and reduce reliance on sheer size. The future of AI lies not in making models bigger, but in making them better.

Over the last six months, we’ve been developing a new architecture that will allow LLMs to both operate more efficiently and intelligently learn on their own. In short, a self-evolving model. These models are able to identify and learn new information in real time— adapting to changing circumstances without requiring a full retraining cycle. A self-evolving model has the capacity to improve their accuracy over time, learn from user behavior, and deeply embed themselves in business knowledge and workflows.

Adaptive, real-time learning has tremendous potential to reshape AI workflows, but comes with undeniable risks and other ethical implications, which is why we are not currently making any self-evolving model available for public use. While we develop guardrails that will allow these models to be deployed and used safely and responsibly, we are limiting their access and use to internal testing and experimentation.

How self-evolving models work

At the core of self-evolving models is their ability to continuously learn and adapt in real time. This adaptability is powered by three key mechanisms:

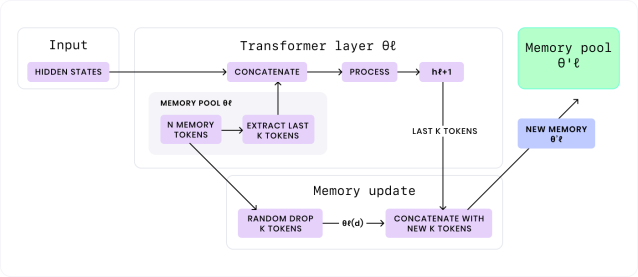

First, a memory pool enables the model to store new information and recall it when processing a new user input. Memory is embedded within each model layer, directly influencing the attention mechanism for more accurate, context-aware responses.

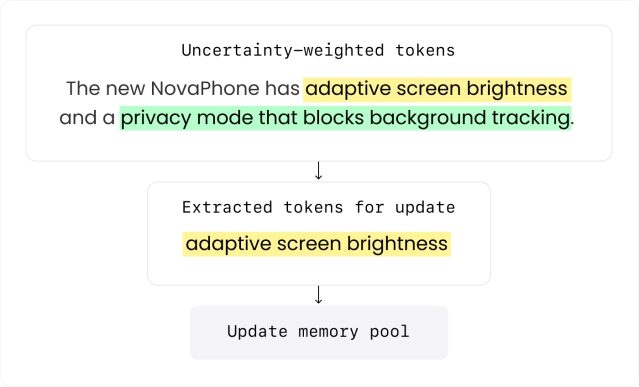

Second, uncertainty-driven learning ensures that the model can identify gaps in its knowledge. By assigning uncertainty scores to new or unfamiliar inputs, the model identifies areas where it lacks confidence and prioritizes learning from those new features.

Finally, the self-update process integrates new knowledge into the model’s existing memory. Self-evolving models merge new insights with established knowledge, creating a more robust and nuanced understanding of the world.

Consider a practical example: a user asks the model to write a product detail page for a new phone they’re launching: the NovaPhone. The user highlights its “adaptive screen brightness” as well as other features and capabilities of the new phone. The self-evolving model identifies “adaptive screen brightness” as a feature it’s uncertain about since the model lacks any knowledge of it — flagging the new fact for learning. While the model generates the product page, it also integrates the new information into its memory. From that point forward, the model can seamlessly incorporate the new facts into future interactions with the user, adapting dynamically without manual updates.

The benefits of real-time learning

Traditional models struggle to keep up with the pace of change in dynamic environments, but self-evolving models excel because they are designed for continuous learning. This capability delivers three core benefits that address the shortcomings of traditional AI systems:

- Real-time learning: Unlike static models that require costly retraining cycles, self-evolving models update themselves as new information becomes available. This means they stay current without human intervention.

- Enhanced accuracy: By refining their understanding over time, these models can learn from previous interactions — creating more precise and context-aware responses.

- Reduced training costs: Automating the update process eliminates the need for manual retraining, saving enterprises time, money, and resources.

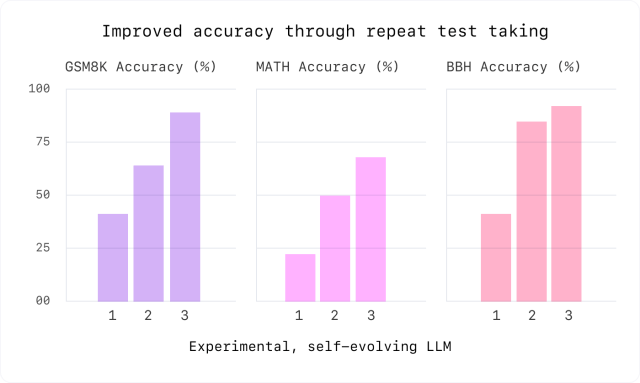

Through our benchmarking, you can see how a self-evolving model grows smarter every time it takes a variety of benchmarks ranging from math to generalized reasoning challenges.

These benefits are particularly transformative in industries where the stakes are high, and the pace of change is unrelenting. In customer support, healthcare, and finance, for example, the ability to adapt quickly to new information is critical. Self-evolving models are uniquely suited to these environments as they can learn both from user interactions as well as the internet.

- In customer support, a model could be tasked to learn from all existing customer interactions, updating itself in real time as it learns new information about the product or encounters new interactions with customers.

- In healthcare, models can retain and reference important medical information across sessions, making it ideal for assisting with patient inquiries, clinical analysis, or up-to-date medical guidelines.

- In finance, models can track market trends, providing insights and analysis in real-time to bankers and wealth managers.

Risk and safeguards

While the promise of self-evolving models is undeniable, their ability to learn dynamically introduces unique risks. Without proper safeguards, these models might incorporate unverified, harmful, or sensitive information, leading to unintended behaviors.

Most models are designed to avoid risky or harmful topics. For example, if prompted with questions about how to harm another person, a model might guide the user away from inflicting harm or refuse to answer outright. Using the R-Judge benchmark — a tool for assessing safety risk awareness of LLMs — we found that self-evolving models scored lower (21.3%) compared to traditional methods (66.7%) when learning from web data. Suggesting that they’ve learned to override the guardrails built into them at the time of training.

The potential for self-evolving models to circumvent guardrails requires novel approaches controlling model outputs. WRITER’s AI guardrails already help businesses manage and control model behavior, but we’re also pioneering new methods of responsibly deploying models that can learn and adapt in realtime without compromising on safety.

Conclusion

Self-evolving models represent a fundamental shift in AI design, offering a sustainable path forward as we demand more from our AI systems than ever before. By addressing the scalability challenges of traditional LLMs and meeting the complex needs of enterprise environments, these models offer new possibilities for businesses striving to stay ahead.

If you’re an engineer looking to build new technology, models, and architecture, we’d love to have you onboard. Check out our careers page for open roles and new opportunities.