Engineering

– 8 min read

Avoid context rot and improve tool accuracy for AI agents using MCP

Learn how to avoid API sprawl and distill hundreds of endpoints down to just two intelligent tools

I’ve been writing code for nearly two decades now and have seen plenty of languages, frameworks, and standards become the “next hot thing.” Certain technologies, on the other hand, become entrenched — PHP, SOAP APIs — and we have to live with them for decades, no matter their drawbacks.

It’s important to consider the technologies you adopt in the moment because they may be around for a very long time, even after other folks in the industry have moved on to the next shiny thing.

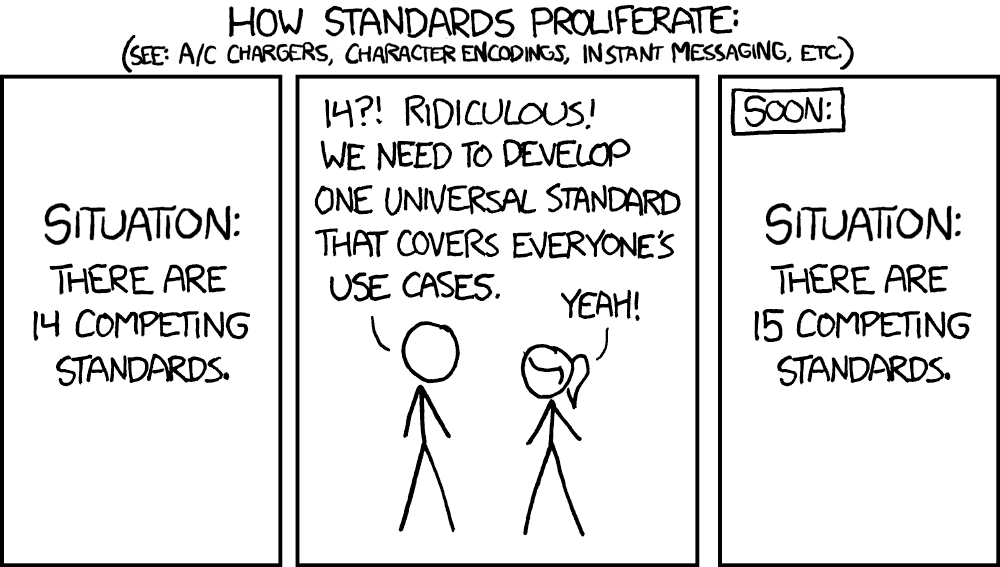

Like many seasoned programmers, when I heard about MCP (Model Context Protocol), I was wary. I’ve read enough XKCD comics to know what happens when you set out to create the brilliant standard to unite them all.

I saw a lot of jokes on social media asking why developers should adopt a new protocol when the reality is that in most cases, the tools you’re accessing on an MCP server are just a wrapper around a collection of REST APIs. And with “competing standards” like A2A, ACP, etc. why should we move to MCP when it could be replaced in a few months?

There’s some truth in that taunt, but what I came to realize while working on MCP is that it does way more than simply repackage the existing API ecosystem. Yes, some MCP servers are just thin JSON schema wrappers around APIs, and this can cause problems, which we’ll discuss later. But thoughtful use of MCP also unlocks our ability to deploy flexible intelligence as code, and that’s well worth the trouble.

LLMs can respond to changes in context by calling on different tools or route their way around an obstacle by writing their own code or calling a different data set. Rather than trying to retrofit AI-powered agents onto our existing API ecosystem, we need a new protocol that takes advantage of their strengths.

Over the last few months, our team has been working to create a true enterprise MCP system — additions to MCP as a protocol that go beyond defining how LLMs talk to tools to extend it to meet enterprise requirements for security and governance. To do this, our enterprise MCP system includes a gateway that sits between our agents and external MCP servers, which we’re preparing to make available to customers later this year. Think of WRITER’s solution to MCP as a control plane that ensures that LLM calls through WRITER will be accessing trusted tools and that IT administrators have the fine-grained controls around access and roles they need to maintain tight security.

Let’s dive into the obstacles we’ve encountered while building out the gateway and what solving these problems has taught me to appreciate about MCP.

The first challenge: saying a lot with a little

Providing our AI agents with hundreds of options for available services and thousands of possible tool calls seemed daunting at first. Manually writing descriptions for each endpoint would have taken months, and wasn’t a task I wanted to assign to an engineer each time we added a new service provider as an available connector through WRITER’s MCP gateway.

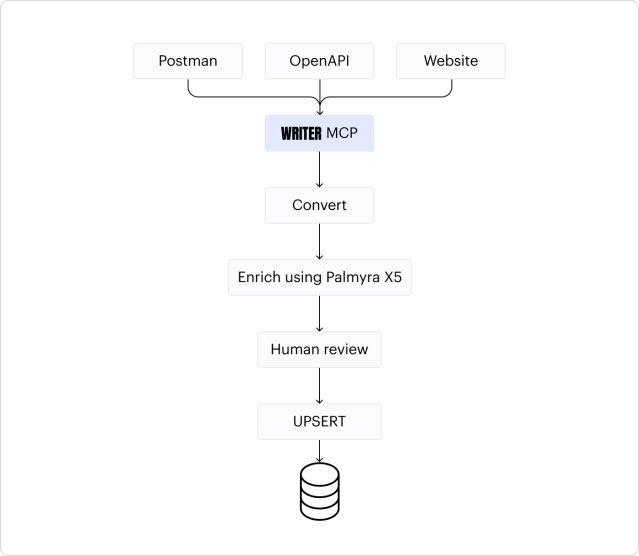

To solve this, we developed an internal pipeline that automates the creation of MCP tools by directly ingesting OpenAPI v3 specifications and Postman collections. When OpenAPI specs weren’t available, we used a custom agent to scrape data off websites that built tools based on online documentation. This process allows us to convert a service’s entire API surface into a functional set of tools with minimal human effort. This automation dramatically accelerates our ability to onboard new integrations, transforming what was once a complex engineering task into a simple, near one-click action.

The impact extends beyond our internal teams. We are designing the system so that our customers will be able to leverage this same pipeline to onboard their own internal APIs, creating a private and secure set of tools available only to their organization’s agents. This capability to version and segment integrations on a per-customer basis is a cornerstone of our enterprise strategy, ensuring that the tools an agent can access are always relevant and properly governed.

The second challenge: translating from developer to LLM

Simply converting an OpenAPI spec, however, isn’t enough to guarantee an agent will use a tool correctly. Raw API documentation is written for human developers, not for LLMs, and often lacks the semantic clarity needed for effective tool calling. There has been a lot of research on the ways LLMs struggle with tool calling accuracy. This is where we add a final, crucial step: AI-powered refinement.

After the initial conversion, we run a post-processing job that uses our own foundation model, Palmyra X5, to analyze and rewrite the tool descriptions. Palmyra X5, our family of transparent LLMs, enhances these descriptions to be “LLM-friendly,” ensuring they clearly communicate the tool’s purpose and parameters in a way the agent can intuitively understand.

Humans are in the loop to design the system, to write the scripts for fetching, and to indicate the ideal inputs and outputs — but can take a backseat for the translation layer, where descriptions create the roadmap for a model to follow when searching and executing with tools. As the need for context engineering expands, it’s important to remember that the model is often better and faster at shaping the context and enriching the metadata than humans are.

The third challenge: distilling many options

Automating the API ingestion pipeline is great, but sharing a list of 100 connectors for each query and then exposing hundreds of tools from the selected connectors adds a massive amount of data to the context window, limiting what else can be added and increasing the risk of the LLM losing its way or producing hallucinations.

If asking the LLM to review the full list of connectors and tools each time lowers the probability it will select the best tool for the job and increases the wait time between a user’s prompt and the LLMs’ response, how do we balance our ambition to be the platform with the richest selection of connectors and tools against these obstacles? Is there a way to remove context bloat and improve the accuracy and speed of tool selection and execution?

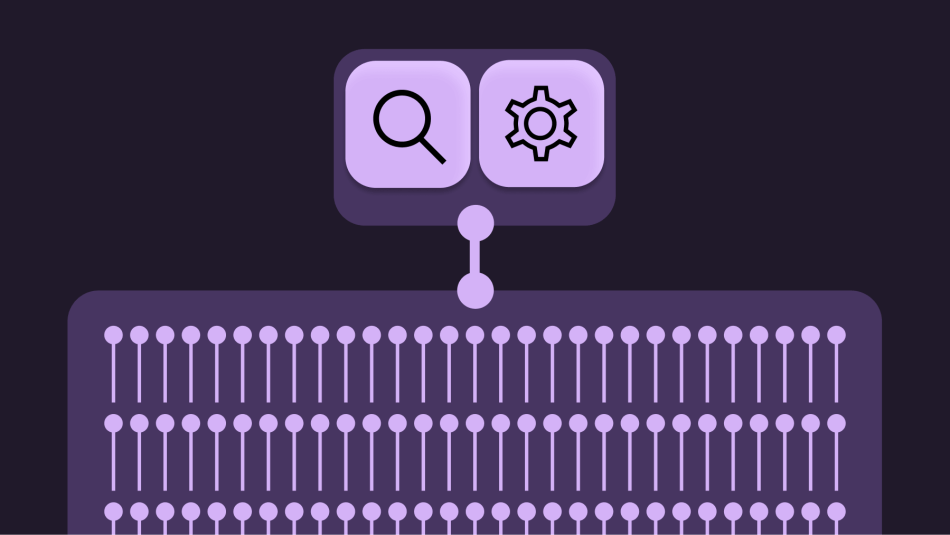

I recently attended a talk where the speaker showed how to distill 30+ APIs and 200+ endpoints from Square’s payment platform into just three layered MCP tools — discovery, planning, and execution — without losing depth. We hope to push things even further, reducing the LLMs toolkit to just two: search and tool execution.

So, how does this search meta-tool actually work under the hood? Rather than a simple keyword match, our gateway dynamically generates and scores a list of potential tools using a simple vector search based on cosine similarity. This process measures the semantic distance between the agent’s natural language query and the description of each available tool, returning a ranked list of the most relevant options. The beauty of this approach is that we’re allowing the LLM to decide what to search for using natural language, which is something LLMs excel at.

Sometimes, you need a forcing function

Fetching data from third-party services used to a static workflow — you hand-wire APIs, authenticate, payload transforms, charting, email/PM updates, and all the glue logic for branching and retries. It’s brittle and grows linearly with surface area. New API version? New requirement? Rinse and repeat.

With MCP and agents, you define and expose a small set of semantic tools, and let the agent plan dynamically. The model interprets, tries, evaluates, and retries as it works its way towards delivering what it thinks is a satisfactory output.

Is MCP the answer we’ve all been looking for? Maybe. Could we have reworked agents to just work with existing API systems? Give it a shot and let me know. If MCP is only a wrapper, someone would have developed a model that generates code on the fly for calling APIs directly. The reason it changes how organizations work is that it forces a move from endpoint sprawl to tool semantics and governance — a smaller, safer, more comprehensible surface that delivers depth when the agent needs it.

We’re still in the very beginning of a massive technology transformation. MCP means we don’t have to wait for everyone to hop on board before we can offer up connectors our enterprise clients can trust. Once they have access to the largest collection of services and tools on the market, we can get to the fun part — building new approaches and exploring previously impossible scenarios to test the limits of what agents can do in the workplace.

We’ll be sharing more updates as our enterprise MCP gateway goes live later this year. Subscribe to our engineering newsletter to stay in the loop.

Interested in working with us? Check out our open roles.