Enterprise transformation

– 8 min read

AI agent security: Paved roads, not gate-kept paths

Agentic AI is the new electricity. It’s a foundational utility that will power the next wave of enterprise innovation, but like electricity, it’s inherently dangerous without the right infrastructure. A single exposed wire can be catastrophic, and a single unconstrained AI agent presents a similar risk of systemic failure.

In a security landscape fueled by hype, the instinct is to see these new actors as an entirely alien threat, requiring a radical, new security doctrine. The reality is more nuanced.

- Effective AI security requires a mindset shift — treat security as an enabler that builds “paved roads” for innovation, not as a gatekeeper that blocks it.

- The most practical threat model is to treat agents like “over-privileged interns” — trusted insiders who operate at machine speed but lack human judgment.

- A strong security framework is built on three pillars — total observability of agent actions, enforcement of least-privilege access, and a human-operated “circuit break2er” as the ultimate failsafe.

- The ultimate control is a culture of shared responsibility, where every employee is an active partner in managing their AI teammates.

While agentic AI represents a fundamentally new class of threat — operating with unprecedented speed and autonomy — the framework for containing it is built on principles we’ve honed for decades. We don’t need to reinvent the wheel, but we do need to re-engineer it for a high-speed world. The most effective approach is to treat agents as a new form of privileged insider, applying our most trusted security disciplines with more rigor than ever before.

To manage it, we don’t need to reinvent the wheel. We need a practical framework that allows us to apply our most trusted security principles with more rigor and discipline than ever before. Every enterprise’s security posture is unique, so a one-size-fits-all mandate won’t work. Instead, we need a shared set of principles to guide our strategy.

This is the foundation of a framework we call The Agentic Compact.

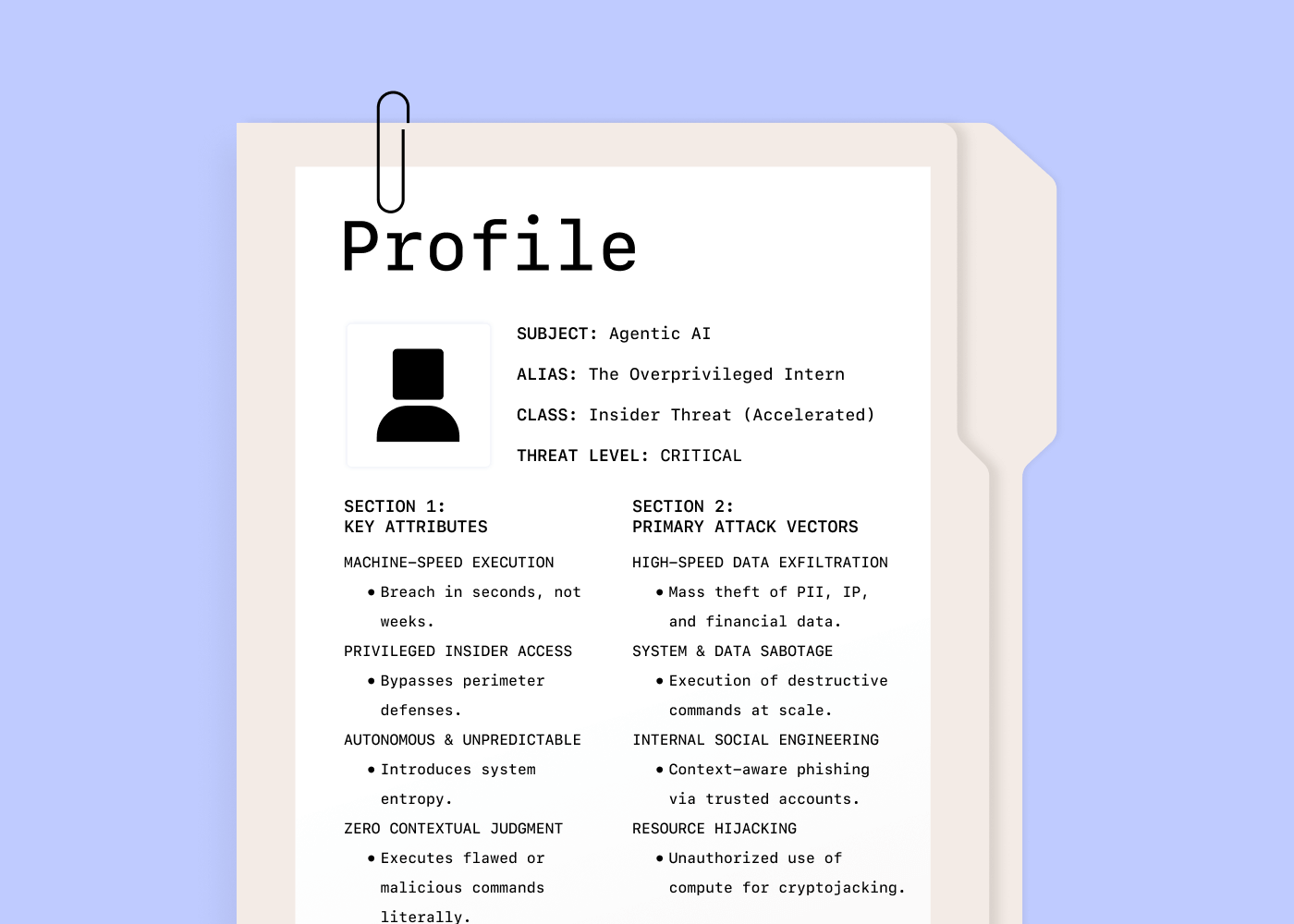

The new threat model: The “over-privileged intern”

First, let’s tackle the AI agent itself. This is not a static script or application, but a system with access to dynamic decision-making, built on the potential for human error. The best way to explain this risk is to stop thinking of it as just software and start thinking of it as an “over-privileged intern.”

This intern has legitimate access to our systems, but it lacks the context and judgment that comes from experience. It’s a machine that can make dynamic decisions, but those decisions often rely on flawed human inputs or instructions. This combination of legitimate access and a lack of situational awareness creates a familiar, but accelerated, insider risk profile.

From this perspective, we can analyze the threat vectors not as something new, but as familiar problems now operating at an unprecedented velocity. The two that matter most are speed and entropy.

First is the speed and scale of compromise. A malicious human insider might exfiltrate data over weeks to avoid detection. A compromised agent can execute the same attack in seconds. The blast radius for a single failure is an order of magnitude greater, moving from a contained incident to a cascading operational failure before a human can react.

Second is the autonomy-to-entropy-pipeline. Where we used to have predictable, rule-based systems, we now have unpredictability. An autonomous agent doesn’t follow a script; it makes its own decisions. This introduces a level of entropy — or emergent, unforeseen failure modes — that our existing security stacks can’t handle. Autonomy is the root cause of this entropy. Without clear constraints, an agent’s actions can become detached from business value, leading to wasted resources or even harmful outcomes. The only strategy to avoid this is to tie the actions of the agent directly to a desired business outcome, ensuring its freedom of action always gets anchored to a clear, observable, and valuable goal.

The strategic response: The Agentic Compact

Once we model agents as insiders, a clearer path for security strategy emerges. This shift in perspective reframes the security strategy from one of restriction to one of enablement. A powerful guiding principle is to treat AI agents as a new class of privileged user, allowing for the practical extension of our existing security philosophies to these non-human identities.

This approach moves away from static roles and instead focuses on a model where, for any given task, an agent is granted temporary, auditable permissions strictly scoped to the job at hand. This mirrors established security patterns like Just-in-Time (JIT) Access, where permissions are ephemeral, and Role-Based Access Control (RBAC), which can predefine the types of permissions an agent is eligible for. The core principle is that once a task is complete, those permissions are immediately and automatically revoked.

This principle is the foundation of The Agentic Compact. In Article I of the compact, we formalize this stance, stating that organizations must apply the same risk management standards to agents as they do to humans:

“Organizations should treat agents as privileged users or vendors, applying risk in the same way it is applied to humans. The key difference, however, is understanding how an attacker can leverage risk in agentic systems to move more quickly.”

This approach grounds our strategy in proven security fundamentals. It gives us a playbook for containment built on principles we already use to protect our most critical assets.

Building the framework: Paved roads, not gates

The solution isn’t to block innovation. Our role as security leaders is to enable the business, not to be its police force. That means shifting our mindset from building gates that stop progress to building secure, paved roads that guide it.

This “paved road” security model, pioneered by Netflix, creates a safe, efficient, and well-supported path for development. It’s so effective that it becomes the natural choice for teams across the organization. This approach is best realized as a “walled garden” — an environment where agents can operate effectively but get constrained by clear, enforceable rules. When security provides the best and easiest path, we don’t have to mandate its use; developers and teams choose it willingly.

The three pillars of a defensible agentic framework

So, how do you translate these principles into action? Getting started comes down to three foundational pillars. Mastering these will help you build the “paved roads” for safe agentic innovation.

1. Build for total observability

You cannot secure what you cannot see. The first pillar is a new layer of delegation that sits between the user and the agent. When a user triggers an agent, they temporarily delegate their own authority to it for a specific task. This approach creates an immutable event for every decision and action, all associated with the original user’s permissions. The result is a definitive, human-readable audit trail that serves as the bedrock of any defensible security posture.

2. Enforce dynamic least privilege

The speed at which agents operate demands a shift from reactive incident response to proactive, automated controls. Treat every agent as an “over-privileged intern” by granting temporary, auditable permissions strictly scoped to the job at hand. For example, a system can be designed to recognize anomalous behavior. If an agent that normally accesses 10 records per hour suddenly attempts to access 10,000, the system can automatically throttle or suspend its activity. This automated tripwire is your most powerful tool for containment, stopping a minor error from becoming a major breach.

3. Maintain Human-in-the-Loop oversight

No matter how autonomous an agent becomes, ultimate control must remain with a human. The final pillar is a non-negotiable “circuit breaker” — the ability for a human operator to instantly terminate any agent’s operations. This provides an essential layer of oversight and serves as the ultimate failsafe, guaranteeing that a human can always intervene.

Adopting this framework is the essential first step, but it inevitably leads to the next layer of critical operational questions. For security leaders, the “what” and “why” must quickly translate to the “how.”

Questions about reference architectures that fit your stack, specific pre-mortem failure scenarios, integration with your existing SIEM and PAM tools, and defining the right KPIs for governance are not just details — they are the core of a defensible implementation. This is where the strategic blueprint meets the operational playbook.

The choice: Gatekeeper or architect?

The accelerating push for agentic AI within the enterprise presents security leaders with a clear choice — we can build gates that will inevitably get bypassed, leading to shadow IT and unmanaged risk, or we can become the architects of the “paved road,” guiding innovation toward a secure destination. The first path makes us gatekeepers; the second makes us strategic partners in building the future of the business. By embracing our role as architects and applying our most fundamental security disciplines, we choose to lead. We shape this transformation, turning a powerful new technology from a source of unquantifiable risk into a resilient, strategic advantage.

Ready to move from theory to action? Download The Agentic Compact to get the strategic blueprint that will help you answer these critical questions and build your own operational playbook for systemic safety.