AI in the enterprise

– 13 min read

Beyond SEO: The triple-threat optimization strategy for visibility in the AI era

Did you catch Gartner’s bombshell prediction that “by 2026, traditional search engine volume will drop 25%, with search marketing losing market share to AI chatbots and other virtual agents”? Their 2024 report sent shockwaves through the marketing world, with implications for how all of us approach content strategy.

The old playbook of ranking #1 on Google simply isn’t enough anymore. As Alan Antin, Gartner’s VP Analyst, notes, “Generative AI solutions are becoming substitute answer engines,” forcing companies to rethink their entire marketing approach. With AI Overviews reaching nearly a billion searchers and tools like Google AI Mode, ChatGPT, Perplexity, and Claude reshaping how people find information, we’re dealing with an entirely new game requiring a triple-threat optimization approach.

- Gartner predicts a 25% drop in traditional search engine volume by 2026, highlighting the importance of optimizing for SEO, AEO, and GEO to maintain visibility in the AI era.

- The triple-threat optimization strategy includes SEO for search rankings, AEO for featured snippets and AI Overviews, and GEO for influencing AI-generated responses.

- The E-E-A-T framework (Expertise, Experience, Authoritativeness, Trustworthiness) is crucial for all three optimization methods, ensuring credible and authoritative content.

- Effective content creation strategies involve question-based keyword research, logical heading structures, a mini table of contents, an FAQ section, and semantic HTML to boost visibility and engagement.

- To adapt to the declining influence of traditional search, implement technical strategies like structured data, optimized crawler access, and enhanced speed and performance.

The triple threat: Understanding AEO, GEO, and SEO

The digital content ecosystem has fundamentally shifted. As marketers, we need to optimize for three distinct but interconnected systems. Let’s break them down:

Search Engine Optimization (SEO) remains our foundation for getting content to rank well in traditional search results pages (SERPs). But while it’s still crucial, it’s now just one piece of a much larger puzzle. The competition for finite SERP positions (rank #1, #2, #3) follows a different model than optimization for AI systems.

Answer Engine Optimization (AEO) focuses on becoming the source for direct answers in featured snippets, knowledge panels, and AI Overviews. This is about structuring content to be easily extracted and presented without requiring users to click through to your site. Recent studies show that featured snippets and AI Overviews now appear in nearly half of all Google searches, making this a high-priority channel.

Generative Engine Optimization (GEO) influences how AI tools like ChatGPT, Claude, and Perplexity use your content to generate original responses based on indexed web content. Rather than competing for ranking positions, GEO is about “influencing what an AI engine thinks and says” when responding to relevant queries. It’s less about links and more about being recognized as an authoritative source worthy of citation.

The real challenge? These three systems are rapidly converging. ChatGPT now displays clickable links similar to search results. Google increasingly delivers AI-generated answers directly in SERPs. And emerging platforms like Perplexity blend aspects of both traditional search and generative AI.

Why E-E-A-T is your secret weapon

At WRITER, we’ve found that the E-E-A-T framework isn’t just for SEO — it’s essential for all three optimization approaches. But let’s break down what E-E-A-T means:

Expertise: Demonstrating deep knowledge in your subject area through accurate, comprehensive content. This means having content creators who actually understand the topic or consulting with subject matter experts. For example, healthcare professionals should write or review a medical article, not just general copywriters.

Experience: Showing firsthand practical experience with the subject matter. This could be case studies, personal accounts, or practical applications of concepts. Experience signals to users and algorithms that you’ve “been there, done that,” not just researched it.

Authoritativeness: Establishing your brand or organization as a recognized authority in your field. This comes from credentials, citations from other reputable sources, media mentions, and consistent quality content publication in your niche.

Trustworthiness: Building credibility through transparent practices, accurate information, clear sourcing, and up-to-date content. This includes having visible author bios, clear contact information, privacy policies, and quickly correcting errors.

Here’s why this framework matters: AI systems and search engines alike prioritize content from trusted sources that demonstrate real expertise. When algorithms evaluate content — whether for search rankings, featured snippets, or AI-generated answers — they’re increasingly sophisticated at detecting these E-E-A-T signals.

By focusing on these four elements, you’re setting yourself up for success across all content surfaces and platforms.

Content creation strategies that work triple-duty

Let’s get practical. Here are specific strategies we’ve found at WRITER that help content perform better across SEO, AEO, and GEO simultaneously:

Question-based keyword research

Start by uncovering the questions your audience is actually asking:

- Use tools like AnswerThePublic to discover nuanced, multi-dimensional queries around your topics. For instance, when researching “AI policy implementation,” you might discover questions like “What liability frameworks should an enterprise AI governance policy include?” or “How do cross-functional AI policy committees handle model explainability requirements?” — questions that reveal sophisticated information needs beyond basic keyword research.

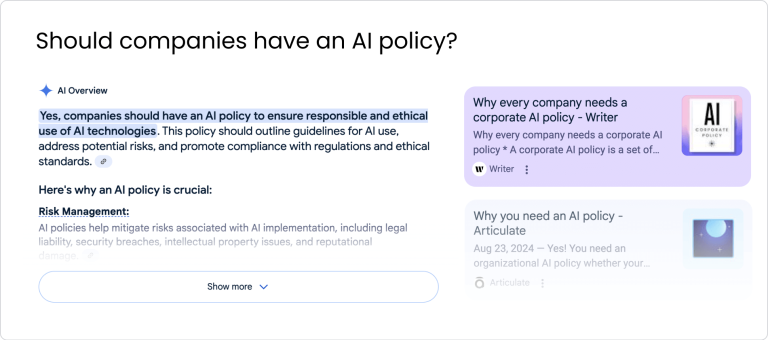

- Identify queries that trigger AI Overviews in Google by testing different search terms and noting which ones generate these special results. Pay special attention to questions with complex informational intent that would benefit from synthesized answers, such as “What metrics indicate effective AI policy compliance?” or “How do industry-specific regulations impact corporate AI policy development timelines?”

- Search for your key topics in Perplexity and mine the “people also ask” section for additional question ideas. This often reveals context-specific questions like “How do you reconcile conflicting departmental priorities when establishing AI guardrails?” or “What are the implications of third-party AI usage for corporate policy inheritance?” that wouldn’t surface in standard keyword tools.

- Track search queries that lead to your site and identify question-based queries that currently don’t have dedicated content. This helps you fill content gaps with precisely what your audience is already looking for.

This approach helps you create content that directly addresses user needs — a win for all three optimization methods.

Strategic content structure

Once you know what questions to answer, the structure becomes critical:

- Use logical heading hierarchies with proper H1, H2, H3 structure that makes your content easy to parse for humans and machines. For example, your main topic might be an H1 (“Content Governance Guide”), with major sections as H2s (“Setting Up Content Governance,” “Measuring Content Governance Success”), and subsections as H3s.

- Frame key sections as questions in headings and provide clear, concise answers in the first paragraph following each heading. For example, instead of a heading like “Implementation Process,” use “How Do You Implement Content Governance?” followed by a direct answer in the next paragraph.

- Add a mini table of contents at the beginning of longer articles with anchor links to each section. This improves navigation and helps users and crawlers understand your content’s structure at a glance. WRITER’s blog posts typically include this feature for articles over 1,500 words.

- Include an FAQ section at the end that addresses additional questions not covered in the main content. This section should use schema.org FAQ markup that’s easily parsable by search engines and AI tools.

- Ensure proper semantic HTML throughout your content, using appropriate tags like <strong>, <em>, <ul>, and <ol> rather than just visual formatting. This helps machines understand the relative importance of different content elements.

This structured approach makes it easier for search engines to extract key information, for answer engines to pull direct responses, and for generative AI to synthesize accurate answers from your content — essentially making your content “machine-friendly” without sacrificing human readability.

Technical considerations you can’t ignore

Even the best content needs solid technical foundations to perform well across all three optimization areas. Let’s dive deeper into the technical aspects you should address:

Structured data implementation

Structured data is like giving search engines and AI systems a roadmap to your content. It helps machines understand exactly what they’re looking at:

- Article markup: This tells search engines and AI systems that your content is an article, along with details like headline, author, date published, and featured image. It significantly improves the chances of your content appearing in rich results and receiving correct citations from generative AI.

- FAQ schema: This explicitly highlights questions and answers in your content. When implemented properly, it can trigger rich results in search and make it much easier for AI systems to extract direct answers from your content.

- Organization markup: This establishes your entity and authority by connecting your content to your brand. It helps systems understand who created the content and builds trust signals when linked to your brand’s overall digital footprint.

Crawler access optimization

Both traditional search engines and newer AI systems need to crawl your content efficiently:

- Review your robots.txt file to ensure it allows legitimate AI crawlers while blocking problematic bots. As more AI companies develop crawlers, staying on top of which ones to allow becomes increasingly important.

- Consider implementing llms.txt — a recently proposed standard that helps AI systems better understand your website content by providing a markdown-formatted guide in your site’s root directory. While still gaining adoption, this emerging standard could improve how your content is represented in AI tools.

- Minimize reliance on JavaScript for critical content. Some AI systems struggle with JavaScript-rendered content, potentially missing important information only visible after JS execution.

- Implement proper HTTP status codes to help crawlers understand when content has moved (301/302) or no longer exists (404/410), improving crawl efficiency.

Speed and performance optimization

Performance impacts both user experience and crawler efficiency:

- Optimize core web vitals, including Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS), to ensure fast loading and stable pages.

- Enable efficient caching through proper HTTP headers and server configuration to reduce load times for repeat visitors and crawlers.

- Compress and optimize media assets, including images, videos, and other rich media, to reduce page weight while maintaining quality.

When you properly implement these technical elements, they ensure your carefully crafted content gets seen, understood, and used by all the systems you’re targeting — from traditional search engines to the newest generative AI tools.

Real results: How this approach works

At WRITER, we’ve seen firsthand how this integrated approach delivers measurable results. Let’s walk through a real example:

One of our recent blog posts on “corporate AI policy” was deliberately structured with the triple optimization approach in mind:

- We started with question-based research, identifying common queries like “How to create a corporate AI policy” and “What should an AI policy include.”

- We structured the article with clear question-based H2 headings, followed by direct answers in the first paragraph.

- We included a mini table of contents at the top with anchor links to each section.

- We added proper schema markup, including Article and FAQ schema.

- We demonstrated E-E-A-T through author credentials, citing industry research, and including real-world examples.

- We included an FAQ section addressing related questions that didn’t fit in the main narrative.

The results speak for themselves:

- The post ranks on the first page for over 25 different question-based keywords related to corporate AI policies.

- It often appears in Google’s AI Overviews when users ask questions about developing AI governance.

- Multiple generative AI tools now cite our article when answering questions about AI policy development.

- The post has become one of our highest-converting blog articles, generating quality leads for our enterprise solution.

This triple exposure across traditional search, answer engines, and generative AI has not only increased our traffic but dramatically expanded our visibility at different stages of the buyer journey. People discover our content whether they’re using a traditional Google search, skimming AI Overviews, or asking questions to AI assistants.

Putting it all together

The most important takeaway is this: you don’t need three separate content strategies. The principles that make content perform well are largely the same across all platforms, with some strategic adjustments:

- Answer real questions — conduct keyword research on questions your audience has about your topics. Tools like AnswerThePublic can help list questions for topics you’re targeting.

- Structure content logically — use logical heading structures, ask questions in headings, and answer in the copy. For longer content, consider adding a mini-table of contents at the top that anchors to each heading.

- Demonstrate expertise and trustworthiness — emphasize the E-E-A-T (Expertise, Experience, Authoritativeness, Trustworthiness) framework in everything you publish, as this is crucial for all types of content optimization.

- Ensure technical excellence — use structured data to give crawlers context (such as Article markup, FAQ schema, and Organization markup), ensure AI crawlers can access your content, and optimize for speed and crawler efficiency.

In summary, the principles we follow for SEO content creation already set us up for success in generative and answer-based engines. There are small adjustments we can make to perform better in them that benefit SEO, but technical considerations can’t be overlooked.

How WRITER can help

Need help implementing these triple-optimization strategies? This is where WRITER’s end-to-end AI platform truly shines. WRITER helps marketing teams transform their content approaches while maintaining brand consistency and quality at scale.

WRITER’s AI platform for enterprise marketing teams

WRITER offers specialized AI solutions that directly address the content optimization challenges we’ve discussed:

- Content creation and optimization: WRITER’s AI agents help you generate content structured with the question-based approach needed for AEO, GEO, and SEO success. They enable you to create content formatted with proper headings, answers, and FAQ sections, requiring no extra effort.

- Brand governance: Ensure all content follows your E-E-A-T principles with WRITER’s brand governance capabilities. Maintain voice, terminology, and style consistency across all content channels, improving your authoritativeness and trustworthiness signals.

- Content transformation: Take existing high-performing content and easily repurpose it for new channels or audiences while maintaining the structured approach that performs well across all platforms.

- Technical implementation: WRITER integrates with your existing martech stack, making it easier to implement the technical aspects we discussed, such as structured data and optimized publishing processes.

Real-world success: Sprout Social’s SEO transformation

Sprout Social, a leader in social media management software, provides a compelling example of WRITER’s impact on SEO workflows. Their marketing team faced a common challenge: the in-house SEO team spent up to eight hours crafting detailed briefs for freelancers. Even then, initial drafts required substantial editing to meet standards.

After implementing WRITER, Sprout Social transformed their approach:

- They created custom AI agents in WRITER’s AI Studio to expedite keyword research and automatically generate detailed SEO briefs.

- They embedded style guides to simplify the review process for freelance content.

- The result? A 68% reduction in production time for SEO content and a dramatic shift in team focus from tedious tasks to strategic work.

In one particularly impressive case, when a competitor went out of business, Sprout Social used WRITER to quickly create high-volume SEO content focused on competitor alternatives. Their content went from not ranking at all to securing top-three positions for relevant searches — an opportunity they couldn’t have capitalized on without WRITER’s platform.

The WRITER advantage

Unlike generic AI tools, WRITER is specifically built for enterprise needs with AI agents that understand marketing requirements and help elevate everything from content creation to optimization. WRITER’s platform is designed for collaboration between technical and marketing teams, helping to close the gap that often exists when implementing new content strategies.

By partnering with WRITER, you’re not just getting an AI tool — you’re getting an end-to-end solution that transforms your content creation processes and ensures everything you publish is optimized for today’s complex digital landscape.

Ready to optimize your content for SEO, AEO, and GEO simultaneously? Let’s talk about how WRITER can help you build a content strategy ready for the future — not just the present.

Try our Blog post outline agent for free or request a demo to see how WRITER can transform your content optimization.