Enterprise transformation

– 4 min read

From chaos to control: why enterprises need a new framework for trusted LLMs

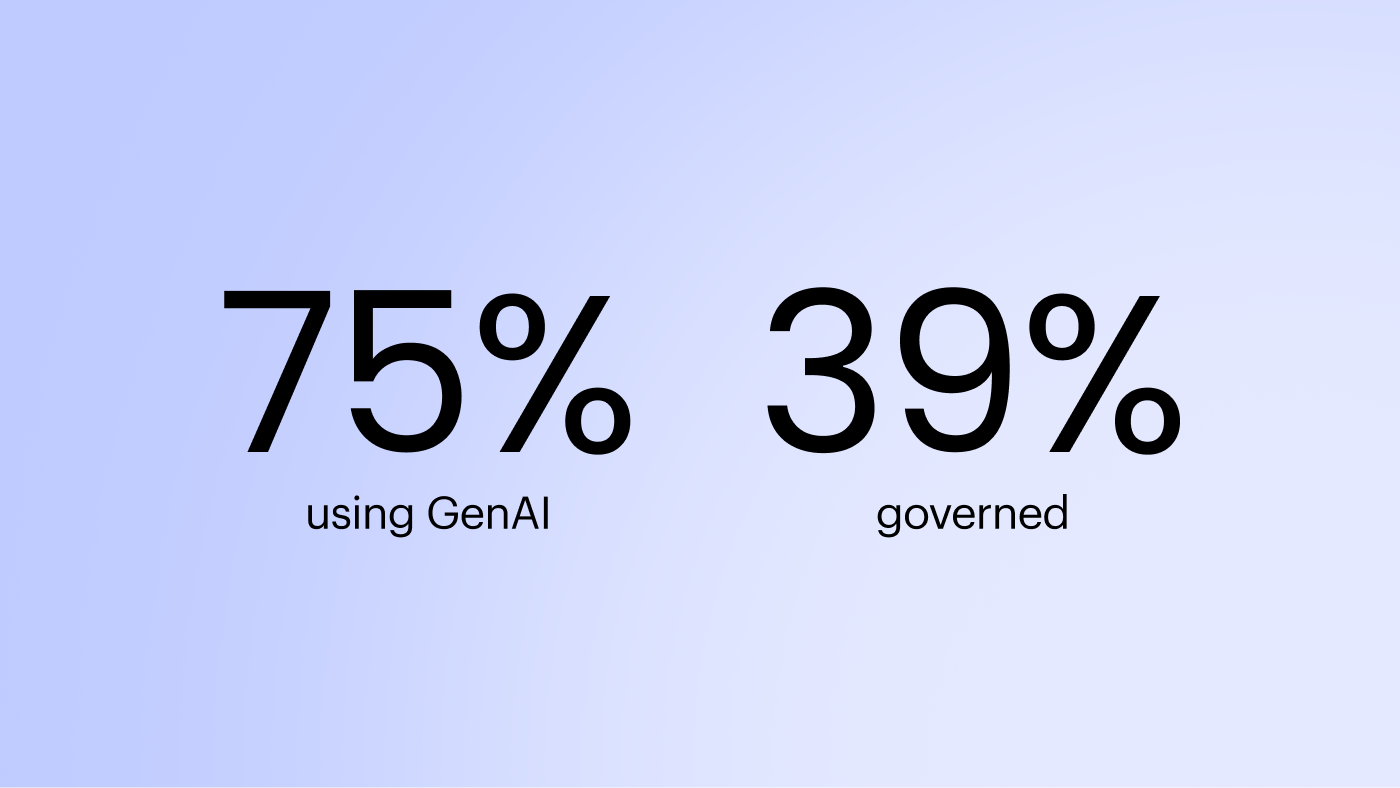

Across the front office, generative AI is already being adopted at scale. Teams are experimenting, deploying, and embedding AI into daily workflows faster than most organizations can govern it. Today, 75% of knowledge workers use GenAI at work, but only about a third of organizations have formal AI governance in place

For CIOs, this creates a familiar and uncomfortable reality: AI enthusiasm is accelerating faster than enterprise readiness.

That’s why HFS Research’s new paper, From Chaos to Control: An Enterprise Framework for Safe and Productive Use of LLMs, is required reading for anyone building or deploying AI in the enterprise. It reframes the conversation away from hype and benchmarks to focus on the question that actually matters at scale: What does it take to run AI safely, predictably, and accountably across the business?

Download the report

HFS introduces eight pillars that define what enterprise-grade LLMs and providers must deliver — spanning transparency, sovereignty, governance, observability, cost control, and accountability. The core insight is clear: enterprise value increasingly comes from how AI is delivered and governed by providers in production, not from the raw IQ of the underlying model alone.

As front-of-office teams push forward, enterprises need more than powerful LLMs. They need providers and platforms that respect data boundaries, integrate cleanly with existing systems, and enforce consistent behavior over time. They need visibility into how agents make decisions — not just the outputs they generate. And they need a model where IT can maintain oversight even as business teams accelerate adoption.

This is where HFS highlights that — in addition to a strong model provider — enterprises need an agent platform that can act as a critical control layer between fast-moving models and mission-critical workflows. Cloud providers help stabilize access to models, but enterprises still need structure around how agents are built, deployed, monitored, and governed. That structure — spanning both LLMs and the providers that operate them — is now essential infrastructure.

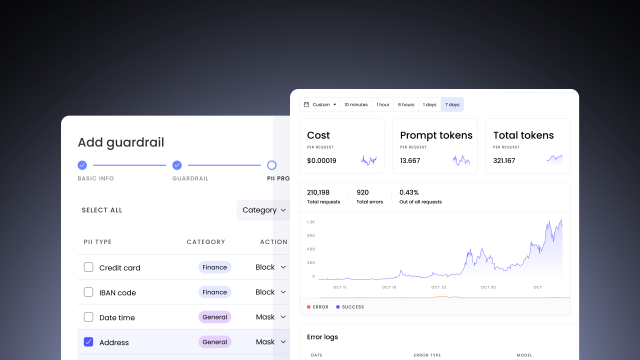

WRITER has been building toward this moment. Our newly introduced agent supervision suite delivers the governance foundation the report calls for — providing centralized visibility into usage and cost, global guardrails for sensitive data, runtime permissions and approvals, and deep integrations with your existing observability and security platforms. It also ensures you can rely on trusted models through providers like Amazon Bedrock, including WRITER’s own Palmyra X5, which was recently recognized as a leader in Stanford’s Foundation Model Transparency Index.

Together, this gives CIOs what they actually need to operationalize agentic AI: enterprise-grade LLMs, enterprise-grade providers, and a governance layer that makes both safe to scale.

The takeaway from HFS is straightforward: enterprises must stop fixating on leaderboard performance and start standardizing on platforms that deliver accountability, stability, and trust. In other words, AI only becomes enterprise-grade when both the LLM and the provider are governable.

WRITER is built for that governable future — combining enterprise-grade LLMs, trusted providers, and a secure agent platform that helps organizations move from AI experimentation to real, durable impact.