AI agents at work

– 7 min read

Scientific credibility is irreplaceable. Are your medical writing systems protecting it?

Scaling accuracy and reliability in content infrastructure with regulatory-grade AI

For medical affairs teams, scientific credibility isn’t just a metric — it’s currency. It underpins every regulatory submission, data discussion, and field engagement, building trust with HCPs, regulators, and patients alike. That credibility depends on accurate, reliable writing. But with more launches in narrower indications, tighter regulator and journal scrutiny, and stretched writing and review capacity, credibility can slip, not because the science is weak, but because the system can’t keep up.

That disconnect puts companies’ reputations on the line. If credibility, once lost, is hard to recover, how do medical affairs build regulatory-grade systems that keep pace with science?

The evergreen burden of scientific content

Medical writing is a continuous operational engine. Unlike commercial campaigns or launch-driven materials, medical content is evergreen, with new information driving a steady flow of deliverables. PubMed now indexes more than 1.56 million new citations a year, and scientific content is thought to be growing at roughly 5–6% annually. Each new publication is a potential trigger for new reviews, updates, or field materials, including:

- Affiliate and localization variants

- Claims-to-deck transformations

- Congress and symposium materials

- Payer Q&A and evidence summaries

- Fieldforce FAQs and medical response variants

Teams must get every asset right. Accurate, consistent, and approved across all regions. Delivered not just once, but every Congressional cycle, every new data cut, and every affiliate request. At the same time, post-approval regulatory requirements are expanding. One global survey found that post-approval changes can take 3–5 years to complete due to divergent documentation and review processes. Multi-year variations increase the chance that outdated or inconsistent content circulates regionally — a credibility and compliance exposure, not just a time cost.

Despite mounting challenges, many teams still rely on manual workflows, siloed tools, and outsourced execution. Scientific experts become buried in formatting decks, rewriting FAQs, and chasing approval loops, leaving less time for providing clinical insight or interpreting new data, and fueling burnout.

These aren’t just productivity bottlenecks — they’re points of failure that compromise consistency, delay responses, and increase regulatory risk. Over time, they create cracks where errors and inconsistencies creep in, threatening credibility.

The bottleneck isn’t writing — it’s the workflow

Content generation speed alone won’t solve the problem. In fact, it can make it worse if the outputs aren’t compliant, reusable, or verifiable. The real challenge isn’t content generation — it’s how content moves between teams, formats, stakeholders, and systems.

Most medical writing processes are fragmented, opaque, and complex to scale. Even as teams adopt generative AI tools to speed up content creation, the core infrastructure often remains unchanged. To address the disconnect between the growing complexity of medical content and the outdated systems used to manage its flow, teams need to invest in orchestration. By leveraging regulatory-grade orchestration tools that can overlay existing systems and ways of working, this gap can be closed without the need for reorganization.

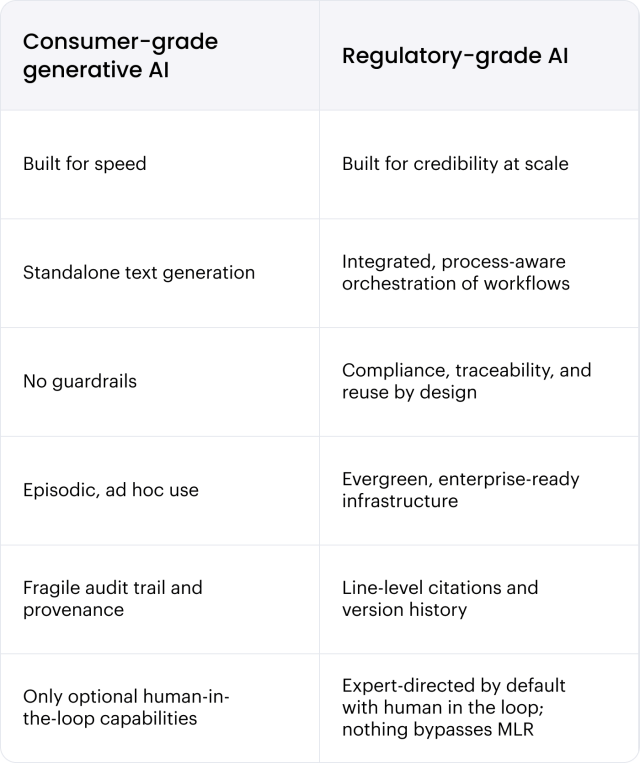

Why consumer-grade generative AI falls short — and what regulatory-grade AI really looks like

Generative AI is reshaping how content creation happens across industries, but most tools don’t work for the regulated, collaborative, high-stakes environment of medical writing. Consumer-grade generative AI tools are fast, but fragile. They’re excellent at producing raw text quickly, but lack the guardrails required to support scientific accuracy, reuse, and compliance.

In medical writing, credibility isn’t just about polished prose — it’s about proof. Every claim needs a clear line back to its source. Without that citation integrity and audit trail, even the most well-written draft is likely to stall in medical, legal, and regulatory affairs review (MLR) or peer evaluation. And rather than reducing workload, these gaps often create more work downstream, with longer review cycles and repeated rework.

The risks extend beyond internal workflows. Many scientific journals still hesitate to accept AI-generated content when transparency and authorship aren’t clear. While attitudes are beginning to shift toward human-in-the-loop approaches, relying on consumer-grade generative AI today can raise red flags during publication and peer review — exactly where credibility matters most.

In medical affairs, regulatory-grade AI isn’t a single tool. It’s an integrated framework that orchestrates workflows end-to-end by combining:

- Generative AI to accelerate draft generation without losing oversight

- Agentic AI to orchestrate workflows so content is consistent and traceable

- Autonomous agents to execute high-volume, repeatable tasks reliably

How to tell if your AI is regulatory-grade

When generative AI is just one layer of a broader, enterprise-ready solution — rather than a shortcut or siloed tool — you can combine automation with the governance, orchestration, and human expertise needed to safeguard accuracy, auditability, and compliance.

A framework that combines generative AI, agentic AI, and autonomous agents with governance, auditability, and jurisdictional rule enforcement so scientific content is defensible, reusable, and compliant at scale.

Harnessing expert insight for compliant scaling

For medical writing, a human-in-the-loop approach is non-negotiable. But what if your company could integrate its expertise beyond reviews and approvals? Without orchestration, organizations risk wasting scarce expertise on formatting and rework, draining energy from the people whose judgment is hardest to replace, while fueling burnout and retention risks.

Agentic AI changes this dynamic. It orchestrates the structured, repeatable workflows that slow teams down while embedding scientific expertise, compliance requirements, and business priorities directly into the process. Expert-in-the-loop isn’t a concession, it’s a feature — claims remain under expert control, jurisdictional rules get codified, and no output skips MLR.

“WRITER frees up a lot of time to do higher-order thinking and more strategic work.”

By shifting attention back to high-value work, experts can focus on data interpretation, clinical insights, and strategic review. Human insight doesn’t get diluted by automation — it’s amplified, enabling teams to scale output without losing the rigor and credibility their science demands.

The true ROI: credibility at scale

Discourse around the financial and time savings from leveraging generative AI is pervasive across industries. But, in a highly regulated field where content quality is key, medical affairs teams cannot evaluate AI adoption purely on writing speed.

The real business case for AI is based on:

- Redistributing expert capacity: Experts spend more time on insights, less on execution

- Reduced agency spend and rebriefs: Lower time and financial costs from agency content generation

- Maturing processes: Better reuse, faster reviews, and more precise traceability

- Increasing delivery capacity and velocity: More outputs, without increasing agency spend or headcount

- Building compliance resilience: Every deliverable is audit-ready by design

“With the same number of people, the same amount of hours in the day, and the same amount of activities and tasks on our plate, we’re able to produce that much more content.”

Most organizations won’t leap to fully autonomous systems immediately. A crawl-walk-run path, starting with assistive agents, then orchestration, then multi-agent systems, ensures AI adoption grows sustainably with governance at each stage. All of this supports one outcome: credibility that scales across regions, formats, and functions — without burning out the teams behind it.

Where to start: your first medical writing orchestration use case

To protect your credibility, you don’t need a full-scale transformation or even to rip and replace legacy systems. Orchestration is modular. You can — and should — start small, with one high-friction, high-value workflow. Look for the processes your team repeats most often, where experts are stretched too thin, or where compliance flags create avoidable rework. Every step adds governance and auditability. Together, they compound into a regulatory-grade content operating system — scaling credibility without compromising scientific integrity.

As you explore pilots for your use case, ensure that you have clear expectations for what regulatory-grade AI should look like:

- Every claim should be verifiable: Credibility requires transparent, traceable sources that reviewers can trust

- Experts should stay in charge: Human oversight must be built in by default, with nothing moving past MLR unchecked

- Content should be built to scale: Outputs need to travel across affiliates and regions without constant rework

Discover how WRITER can help your team close the credibility gap with a regulatory-grade approach.

Learn more about our life science solutions