Enterprise transformation

– 10 min read

The importance of AI ethics in business: a beginner’s guide

When Socrates spent a lifetime defining his code of ethics, he could’ve never dreamt that it would end up expanding into a field that applied to a non-human intelligence.

Yet here we are.

Artificial Intelligence (AI) is an increasingly important part of modern business operations. But we still haven’t cracked the code on what that means in terms of good practices and ethics.

Businesses should understand the implications of using AI from a legal and ethical standpoint to ensure their applications are compliant and responsible.

- AI ethics is the framework that ensures AI outcomes follow a set of ethical practices and guidelines.

- Primary AI ethics concerns include privacy, data bias, discrimination, accountability, and responsibility.

- AI ethics is important to protect vulnerable populations, reassure privacy concerns, reduce legal risks, improve public perception, and gain a competitive edge.

- Establishing AI ethics in a company requires understanding the legal and ethical implications, establishing principles and guidelines, setting up governance structures, and training employees.

- Choosing AI tools that help follow ethical AI guidelines is also important, such as Palmyra LLMs, a trio of generative AI models that’s SOC 2 Type II, PCI, and HIPAA certified.

What are AI ethics?

AI ethics is the framework that ensures AI outcomes follow a set of ethical practices and guidelines.

Companies must stay on top of the latest developments in AI ethics to ensure they make decisions in adherence with existing laws, as well as be mindful about the ethical implications of their actions.

Doing so will help organizations maximize the potential value offered by artificial intelligence technology, while minimizing any potential negative impacts it could have on society.

Primary AI ethics concerns

With AI growing so rapidly, a few primary concerns affect most platforms. It’s important to consider the risks when using any AI tool.

Privacy concerns

AI privacy ethics tends to be a discussion around data privacy, protection, and security. Companies need to make sure that data collected for AI purposes is kept secure, and that users are aware of risks associated with their data being collected.

One problem is that different countries have different approaches. For example, on one side, you have General Data Protection Regulation (GDPR) in Europe, and on the other side California Consumer Privacy Act (CCPA) in the states, which each have their own nuances to data security guidelines and limits.

Take Italy’s ban on ChatGPT for example. On March 31, Italy’s data regulators issued a temporary decision demanding that ChatGPT stop using personal information for training data. According to GDPR laws, OpenAI doesn’t have the right to use and unlawfully collect personal information– even if it’s publicly available, without consent.

The privacy concern stems from the fact that ChatGPT doesn’t warn users their data is collected, nor do they have a legal basis for collecting personal information to train ChatGPT. And apart from the unlawful collection of data, under EU and California privacy rules, people have the right to request that their information be deleted. However, that request becomes tricky when requesting deleting information from an AI system.

As a result, many privacy concerns around data collection and use in AI training models have sparked around EU countries. Many studies have shown that AI has privacy issues. So, it’s important that any company implementing AI consider its security and privacy features to remain compliant and keep data safe.

Data bias and discrimination consequences

AI outcomes are only as good as the data they’re fed, and if the data is biased or incomplete, it can lead to AI making incorrect or discriminatory assumptions.

Data bias affects vulnerable populations and has serious impacts on marginalized groups. For example, a health study found racial bias in a healthcare algorithm, which “falsely concludes that Black patients are healthier than equally sick White patients.”

Another study by Cornell found that when DALL-E-2 was prompted to generate a picture of people in authority, it generated white men 97% of the time. This is because models are trained on images and data from the internet, a process that echoes and increases stereotypes around gender and race.

The problem is, data will never be unbiased. Computer scientist and ethics researcher Dr. Timnit Gebru makes it clear that “there is no such thing as neutral or unbiased data set.” Not all data and views will be represented, and there are certain things, like fascism or Nazisim, that shouldn’t be left neutral. Instead, Gebru advises, “we need to make those biases and make those values that are encoded in it, clear.” That means making users understand where data is collected and what biases might appear.

Companies must ensure that the data they are using to train is accurate, as unbiased as possible (or with a positive bias toward equitable inclusion and representation), and relevant to the task they are trying to achieve.

Accountability and responsibility

AI accountability is murky and uncertain. As of today, there’s no specific regulatory body that holds businesses accountable for how they create and use AI in their organizations.. In other words, there isn’t any legislation that regulates AI practices.

We’re currently in the Wild West of AI regulation. Companies are solely responsible for creating policies around implementing AI. While some researchers try to bring light to ethical issues, responsibility remains decentralized.

Worries about regulations and public safety regarding AI development have led prominent figures like Elon Musk and AI experts to send an open letter calling for a temporary pause on developing systems more powerful than GPT-4.

Some regulatory bodies like the Chamber of Commerce in the US, are currently attempting to make a system of accountability. The Chamber believes business will greatly impact the roll-out of AI technology and argues that business leaders and policymakers must establish frameworks for responsible AI use.

The Chamber advocates that rather than a general approach, AI regulation should be industry-specific and follow best practices. Of course, this comes with the drawback that it still leaves AI regulation in the hands of industry over strict government regulations.

Other nations, like Italy, have taken the issue into their own hands, and temporarily banned ChatGPT because of concerns about data protection and privacy. Authoritarian countries like Russia, North Korea, and Syria have banned ChatGPT because they fear the US would use it to spread misinformation.

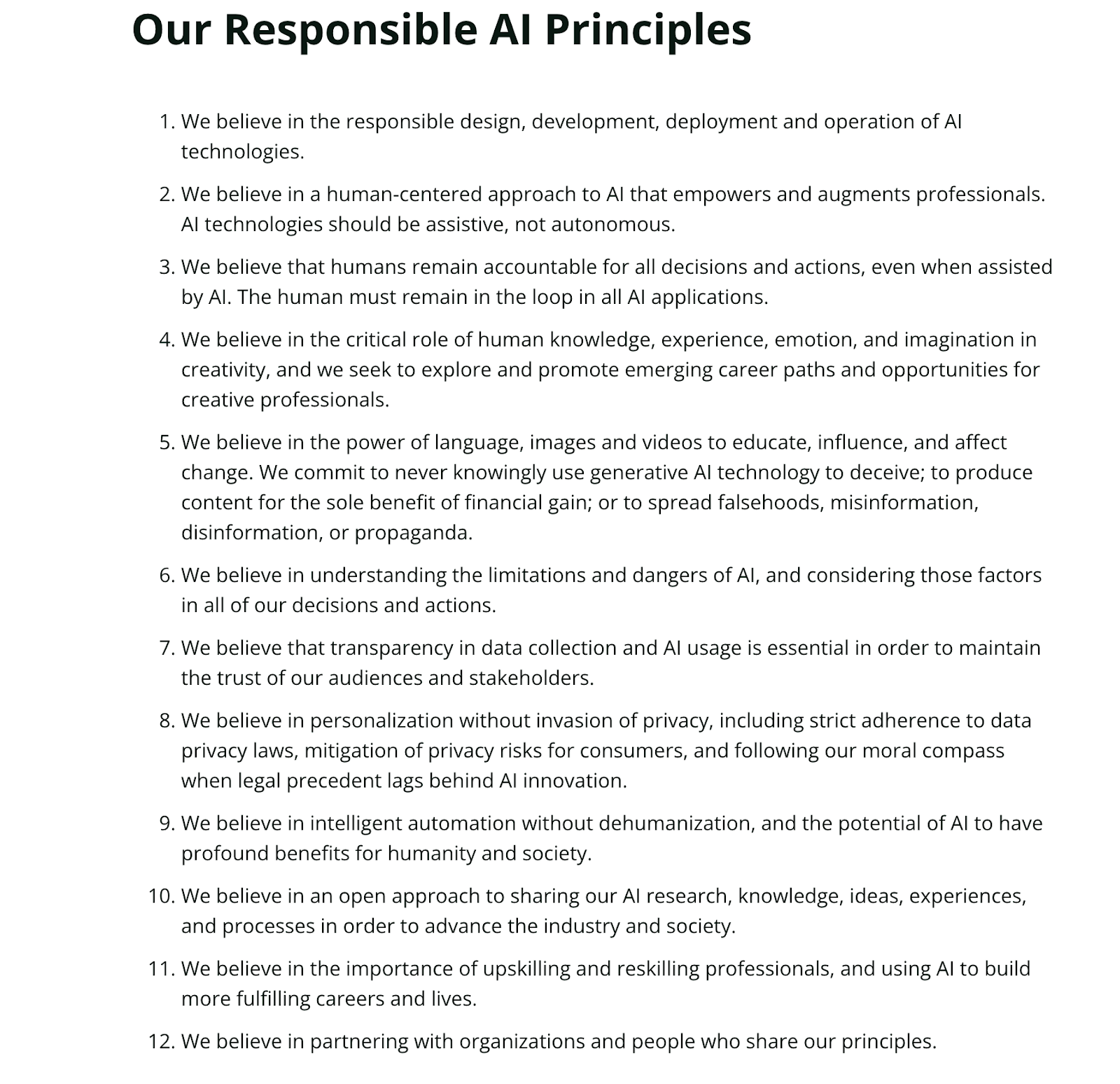

Some professional organizations, like the Marketing AI Institute, have been proactive and brought awareness to the responsible use of AI with an AI code of conduct.

Why is it important to establish AI ethics in your company?

AI ethics is essential for the responsible use of artificial intelligence. As AI increasingly integrates into companies, it’s important to understand how to ethically develop and deploy AI technology.

By establishing clear AI ethics, companies can:

- Protect vulnerable populations: AI ethics also helps protect vulnerable populations from potential harm caused by AI applications.

- Reassure privacy concerns: By considering ethical issues such as privacy protection and data accuracy when designing these algorithms, companies can help ensure they make fair decisions based on accurate data.

- Reduce legal risks: Adopting AI ethics can also help businesses avoid costly legal and reputational risks associated with unethical AI usage. Companies have faced lawsuits due to their use of unethical algorithms, which have resulted in discriminatory outcomes or violated consumer rights.

- Improve public perception: ethical principles can positively affect public perception of a company — customers want to know their data is secure and algorithms are fair when engaging with organizations who employ AI technology.

- Competitive edge: adopting clear guidelines around AI ethics gives businesses a competitive edge over those who don’t take these issues seriously— demonstrating good practices around ethical development will make customers more likely to trust your organization with their data.

How to establish AI ethics in your company

Establishing AI ethics in your company is essential for ensuring the ethical use of AI technologies.

It requires understanding the legal and ethical implications of using AI, developing a set of principles to guide the use of AI, and establishing governance structures to ensure accountability with AI decisions.

Here are some steps that businesses can take to set up an effective system for governing their use of artificial intelligence:

- Understand the legal and ethical implications

Knowing the laws and ethics of using artificial intelligence is important for good practices. Businesses should know about relevant regulations, such as GDPR or HIPAA, and data privacy and security standards. They should also be aware of any potential biases in their data or algorithms.

- Establish principles and guidelines

Companies should have a set of principles and guidelines for how they use artificial intelligence technologies. These guidelines should cover data privacy, algorithmic fairness, transparency, accountability, and user autonomy. Having clear principles in place will help ensure that employees understand what’s expected from them when using AI tools within their workflows.

- Set up governance structures

A company’s governance structure should oversee all aspects of the development and deployment of AI technologies to make sure AI decisions are accountable. You should have a central committee or review board to assess potential risks with new developments or changes to existing systems. They should have subject matter experts who can judge if models are working as expected. You should have processes for getting customer feedback and metrics for success. You should also regularly review algorithms for possible bias or accuracy issues.

- Train employees and review systems regularly

Training employees on how to use artificial intelligence technologies is key to minimizing risks. Have regular reviews to make sure there aren’t any unintended consequences caused by algorithmic decision-making processes. If problems are found, you can fix them quickly.

By establishing an effective system for governing their use of Artificial Intelligence tools and technologies, businesses can build trust with customers while gaining a competitive edge through more efficient operations enabled by intelligent automation solutions.

Choose AI tools that’ll help you follow ethical AI guidelines

Ethics are all about the choices we make, the actions we take to follow through on those choices, and taking responsibility for any risks involved. Investing in an AI platform that’s designed with ethical and legal implications in mind, and that gives you as much transparency and confidence as possible, is a deeply responsible choice to make.

WRITER has been guiding large organizations like Vanguard on taking the most responsible and ethical approach to AI adoption. Companies like Intuit and Victoria’s Secret use WRITER to help scale their environmental, sustainability, governance (ESG) and inclusion initiatives. WRITER’s platform is designed with corporate responsibility in mind, and was the first generative AI platform to be both SOC 2 Type II, PCI, and HIPAA certified.

To learn more about paving the way for ethical AI at your organization, check out our leader’s guide to adopting AI.