AI in action

– 8 min read

Generative AI risks and countermeasures for businesses

It’s no secret that embracing new technology, like generative AI, can give your business a huge leg up on the competition – but it comes with risks. By getting in on the ground floor of emerging tech, you can quickly refine processes, get to know your customers better, and even create products your competitors haven’t had time to develop yet. That doesn’t mean you should rush into things without a plan. As with any new technology, there are hazards associated with AI adoption that you need to take into account. Let’s go over the biggest concerns about generative AI for business use and the safeguards you can put in place to protect your company, your brand, and your customers.

- Generative AI has the potential to give businesses a competitive edge, but it comes with risks

- Risks include “AI hallucinations”, data security/privacy, copyright law, and compliance

- Countermeasures include fact-checking, claim detection, curated datasets, security features, human creativity, and custom training

- Invest in an enterprise-grade AI solution with quality datasets, secure storage, and customizable features

- Teams need to understand the importance of brand and security compliance and get training on using the tools properly

Generative AI “hallucination” (aka “plausible BS”) risks

Generative AI tools are all the rage right now because they’re so good at sounding human, but this capability can be a double-edged sword. These models are designed to mimic and predict the patterns of human language, not to determine or check the accuracy of their output. That means generative AI can occasionally make stuff up in a way that sounds plausible — a behavior known as AI hallucinations.

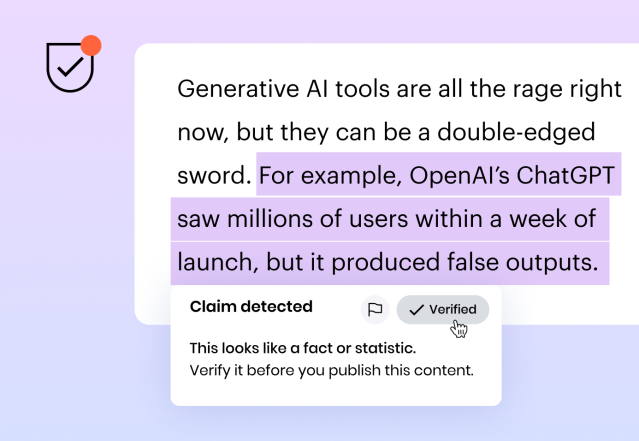

For example, OpenAI’s ChatGPT saw millions of users within a week of launch, but it also alarmed the public and the press when it produced false outputs. OpenAI claims their latest large language model (LLM), GPT-4, reduces hallucinations, but it still isn’t 100% reliable.

Google’s Bard chatbot showed us that unchecked generative AI can be a real danger. In its first demo, it made a factual error (ouch!).

This kind of risk makes companies understandably hesitant to invest in such tech — especially if you’re in a highly regulated industry like healthcare, law, or finance, where delivering accurate information is a matter of life and death.

AI hallucination countermeasures: fact-checking, claim detection, and curated datasets

Here’s the thing: you can avoid these kinds of mishaps by doubling down on fact-checking and vetting the kind of data your AI tool is trained on.

One of the first rules in the age of AI is to fact-check all content before it goes to publication — human-written or AI-generated. And there are already AI-powered tools to help your fact-checkers scale up and speed up their work.

Writer’s claim detection tool flags statistics, facts, and quotes for human verification. Training Writer on a company’s content as a dataset and prompting it with live URLs can also help to produce accurate, hallucination-free output.

It’s also worth considering working with an AI tech partner who uses a “clean”, curated dataset for pre-training their models. That way, you can avoid datasets obtained from a huge web crawl that may include “fake news” and other sources of misinformation.

Take Writer’s Palmyra LLMs as an example. A proprietary model powers them, trained on curated datasets with business writing and marketing in mind. So, if you’re looking for an AI designed specifically for use cases across the enterprise, Palmyra LLMs are your best bet.

Other risks for business use of generative AI

When it comes to using generative AI in business, there are plenty of concerns around data security/privacy, copyright law, and compliance.

Data security and privacy risks

Data security and privacy is one of the biggest worries. Tools built on OpenAI LLMs reserve the right to keep, access and use your data. That means if someone at your company uses confidential or customer data in a prompt, it’s part of OpenAI’s database. So, your IP can be at risk, and you might have unintentionally broken privacy laws. Not ideal.

Copyright concerns

The emergence of generative AI has thrown a wrench into our understanding of copyright and intellectual property.

First, there’s the question of using copyrighted material in LLM training datasets. In the US, it’s not illegal to scrape the web for this purpose. But a lot of legal brains have raised concerns that the existing laws may not be enough to protect creators whose material may have been used as part of AI prompts.

Then there’s the matter of whether AI-generated content is protected under copyright law. Right now, the US Copyright Office says it depends on how much human creativity is involved. The most popular AI systems probably don’t create work that’s eligible for copyright in its unedited state. That could be bad news if your company plans to sell AI-generated material, including digital products created with AI-generated code.

Compliance issues

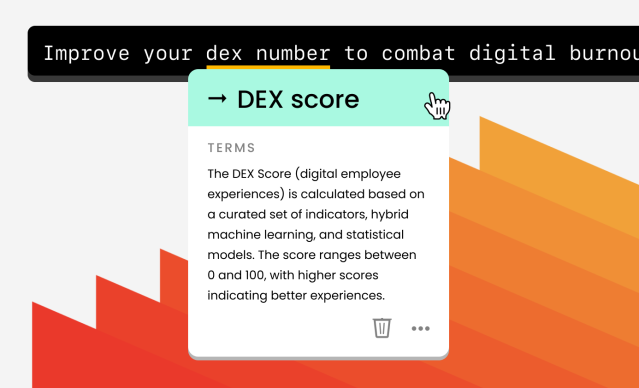

Finally, with the release of GPT-powered APIs, we’ve all got a virtual writer in our pocket — but one we can’t control. Companies can’t tweak general-use AI tools to match their brand, style, and terminology guidelines — which can lead to wonky and wrong output that doesn’t adhere to the brand or industry standards.

Say you’re trying to sell a product and a marketing team member uses ChatGPT to create a quick blurb about it. If the output includes a term like “eco-friendly” – which isn’t allowed under ESG (environmental, sustainability, and governance) standards — you could be up the creek without a paddle in certain countries.

Countermeasures: security features, human creativity, and custom training

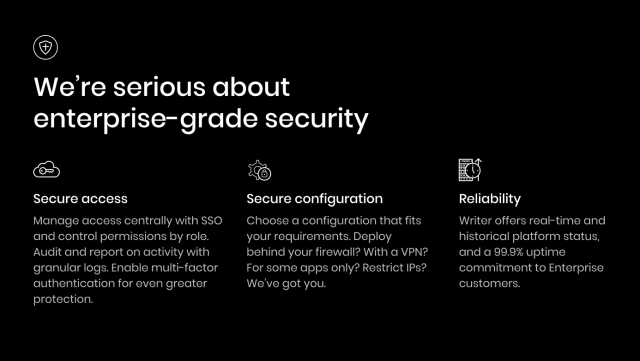

The keys to avoiding all of these risks? An enterprise-grade, customizable generative AI platform and a wealth of human creativity.

To ensure you’re keeping your data safe, you’ll want an AI platform that doesn’t store user data or content that’s submitted for analysis for any longer than is needed. Writer has you covered here, as we’ve been audited for several privacy and security standards, and have received major certifications, such as SOC2, GDPR and CCPA, HIPPAA, and CCPA.

When it comes to copyright issues, keep in mind that AI-generated outputs are just a starting point for your content. The real magic happens when you put your own spin on it. To make sure you’ve got the perfect mix of human- and machine-generated content, use an AI-detection tool.

“Keep in mind that AI-generated outputs are just a starting point for your content. The real magic happens when you put your own spin on it.”

With the right generative AI platform and user training, you can avoid security and copyright risks, while at the same time ensuring brand unity. To get the most out of this tech, you’ll need to fine-tune your models for your specific use cases and train them with your own expertly crafted, human-generated content.

Writer takes brand governance even further with features like style guides and terms. Customers can create their own custom style guide that includes voice, writing style preferences, and approved terminology. Writer will then suggest corrections when users veer off-brand in their writing or use non-compliant language.

Ivanti, a security automation platform that secures devices, infrastructure, and people across 40,000 enterprises, trusts Writer to accelerate their content and align their brand.

“We’re a cybersecurity company and have a robust process for vetting the security of vendors,” Ashley Stryker, Ivanti’s senior content marketing manager, told us. “Writer really appealed to us, and it’s become even more important than we thought when we first bought the platform. We’re looking at enormous quantities of copy to be written in the first half of 2023, and I can’t tell you how grateful we are that we have Writer.”

Generative AI is only as risky as the platform you use — and the people who use it

Generative AI could totally transform content creation for businesses, but only if the necessary precautions are taken to alleviate risks. When it comes to using AI for business, the platform you go with makes all the difference. Investing in a reliable, enterprise-grade AI solution with quality datasets, secure storage, and customizable features is the way to go.

Keep in mind, though, that no matter how fancy the technology is, it’s only as good as the person using it. Make sure that your team understands the importance of brand and security compliance and are trained to use the tools properly. Having a good mastery of the tools available makes sure that writers can be more efficient and create content that meets all of your company’s needs.