Product updates

– 4 min read

Meet Palmyra Med, a powerful LLM designed for healthcare

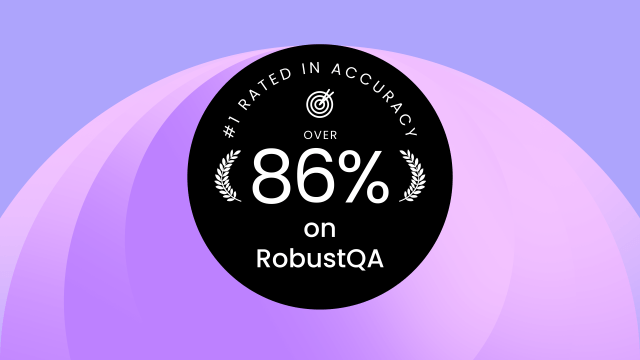

Palmyra Med is a model built by WRITER specifically to meet the needs of the healthcare industry. Today we’re thrilled to share that Palmyra Med earned top marks on PubMedQA, the leading benchmark on biomedical question answering, with an accuracy score of 81.1%, outperforming GPT-4 base model and a medically trained human test-taker.

Overcoming the limitations of base models

Base models have a breadth of knowledge, but when tasked with answering a domain-specific question, they tend to generalize answers versus follow user instructions and produce meaningful, relevant responses. This can be especially challenging when AI is applied to industries like healthcare, where accuracy and precision are a matter of life and death. To meet the needs of this industry safely and responsibly, we need models that are trained on medical knowledge and equipped to handle complex, healthcare-specific tasks.

Palmyra Med takes top marks

To create Palmyra Med, we took our base model, Palmyra-40b, and applied a method called instruction fine-tuning. Through this process, we trained the LLMs on curated medical datasets from two publicly available sources — PubMedQA and MedQA. The former includes a database of PubMed abstracts and a set of multiple-choice questions, and the latter consists of a large collection of text on clinical medicine and a set of US Medical License Exam-style questions obtained from the National Medical Board Examination. Through the training process, we also enhanced the model’s ability to follow instructions and provide contextual responses instead of generalized answers.

The results speak for themselves. On PubMedQA, Palmyra attained an accuracy of 81.1%, beating a medically-trained human test-taker’s score of 78.0%.

Palmyra LLMs are designed for the enterprise

From the beginning, our commitment has been to build generative AI technology that’s usable by customers from day one. And every decision we’ve made along the way has supported us in that mission. At 40 billion parameters, Palmyra Med is orders of magnitude smaller than PaLM 2, which is reported to have 340 billion parameters, and GPT-4, which is reported to have 1.76 trillion parameters.

Supporting these massive models isn’t economically viable for most enterprises, given hardware and architecture limitations. As a reaction to these scaling challenges and in an attempt to make these models commercially viable, some have pursued distilling down models, which has had negative effects on model performance. In a research paper titled “How is ChatGPT’s Behavior Changing over Time?,” authors from Stanford University and UC Berkeley show that GPT-4 and GPT-3.5 have drastically changed their ability to answer the same questions within a three-month period. Given the black-box nature of these models, it’s not possible to predict reliably how they’ll operate over time.

In contrast, Palmyra LLMs are efficient in size and powerful in capabilities, making them the scalable solution for enterprises. Despite being a fraction of the size of larger LLMs, Palmyra has a proven track record of delivering superior results, including top scores on Stanford HELM. And because we invested in our own models, we offer full transparency, giving you the ability to inspect our model code, data, and weights.

We’re deeply focused on building a secure, enterprise-grade AI platform. WRITER does not retain your data or use your to train our models, and WRITER adheres to global privacy laws and security standards, such as GDPR, CCPA, Data Privacy Framework, HIPAA, SOC 2 Type II, and PCI. Our LLMs can be deployed as a fully managed platform or self-hosted, and unlike other services with waitlists and limited access, they’re generally available to all customers.

Paving the way for generative AI in healthcare

We’re excited for Palmyra Med to empower healthcare professionals to accelerate growth and increase productivity. In the last few months, we’ve already been in discussions with customers on creative and innovative use cases that leverage generative AI in the healthcare industry:

- Ensure patients use their medication safely by translating drug information, such as warning labels and interactions, into non-jargony language.

- Reduce administrative overhead by turning doctor notes and summaries written in shorthand into easily understandable text.

- Generate useful insights from a sea of medical notes, tests, and documents.

- Shorten review times by automatically generating easy-to-follow narrative descriptions for medical decision letters.

- Uncover valuable medical insights from text and seamlessly combine them with data analytics.

If you’re interested in learning more about how we built Palmyra Med, read our white paper. Ready to experience LLMs built for healthcare needs? Schedule a demo with our sales team today.