Advancing AI for the enterprise

At Writer, we have one goal: to build scalable, reliable,

and transparent AI technology for the enterprise.

Our approach

Our team

Our results

Our approach

Our approach is different: we believe that building large language models (LLMs) informed by enterprise requirements leads to AI systems that are more reliable, more controllable, and more transparent. When you ground cutting-edge AI innovation in real-life needs, it yields solutions that solve problems people actually face.

Our team

Our globally distributed team of AI/ML researchers and engineers has a five-year track record of groundbreaking research and development across language models, retrieval systems, and evaluations.

Our results

Our results demonstrate that when AI research starts with real needs, it leads to:

- Prioritizing capabilities that map to tangible outcomes

- Balanced focus between sophistication and practicality

- Better evaluation metrics to understand real-world performance

- Earlier identification of potential risks and failures

Research pillars

Enterprise-optimized models

Focus on developing more scalable, reliable, and transparent models specifically engineered for enterprise requirements

Practical evaluations

Development of model evaluation methodology that reflects real-world scenarios and risks

Domain-specific specialization

Research into applying AI systems in high-stakes industries

Retrieval & knowledge integration

Work on next-generation retrieval systems that safely and reliably connect language models with enterprise data

Towards Outcome-Oriented, Task-Agnostic Evaluation of AI Agents

Practical evaluations Nov 11, 2025Palmyra-mini: Small models, big throughput, powerful reasoning

Enterprise-optimized models Sep 19, 2025Reflect, retry, reward: Self-improving LLMs via reinforcement learning

Practical evaluations Jun 12, 2025Palmyra X5: The end of context constraints

Enterprise-optimized models Apr 28, 2025Expecting the unexpected: FailSafeQA Benchmark

Practical evaluations,Domain-specific specialization Feb 10, 2025

Palmyra Creative: Unlocking creativity with AI

Domain-specific specialization Dec 17, 2024Introducing Self-evolving models

Enterprise-optimized models Nov 20, 2024Palmyra X4: Introducing actions

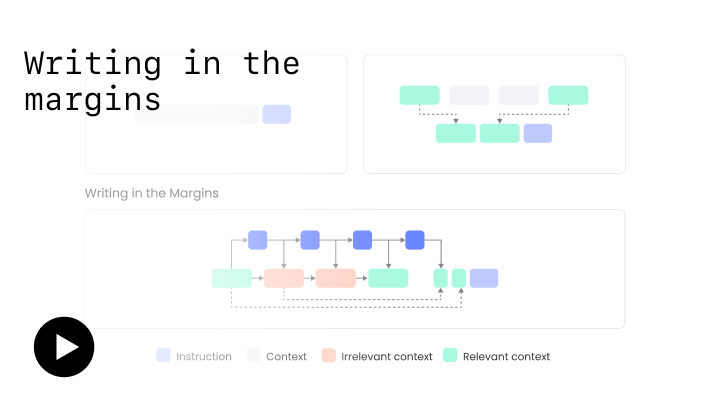

Enterprise-optimized models Oct 9, 2024Writing in the Margins

Enterprise-optimized models Aug 27, 2024Comparative analysis of retrieval systems in the real-world

Retrieval May 3, 2024OmniACT: A benchmark for enabling multimodal generalist autonomous agents

Practical evaluations Feb 27, 2024Fusion-in-decoder: achieving state-of-the-art open-domain QA performance

Enterprise-optimized models Sep 13, 2023Becoming self-instruct: Introducing early stopping criteria for minimal instruct tuning

Enterprise-optimized models Jul 5, 2023Palmyra Med: Instruction-based fine-tuning of LLMs enhancing medical domain performance

Domain-specific specialization Jul 3, 2023Palmyra Fin

Domain-specific specialization Jul 3, 2023Grammatical error correction: a survey of the state of the art

Enterprise-optimized models Apr 29, 2023