Enterprise transformation

– 8 min read

Making the most of AI without compromising data privacy

You’ve probably read dozens of LinkedIn posts or articles about all the different ways AI tools can save you time and transform the way you work. We love it — and we’re excited, too. Right now AI is hotter than the molten core of a McDonald’s apple pie, but before you take a big bite, make sure you’re not gonna get burned.

Our recent survey revealed that 59% of companies have purchased or plan to purchase at least one generative AI tool this year. And we expect those numbers to grow in the future. So whether you’re ready to embrace the AI revolution or not, it’s happening, and it’s happening real fast. And the impact? Oh, it’s going to be seismic.

As companies rush to embrace generative AI tools, the implications on data and privacy are profound. With AI systems processing vast amounts of personal information, concerns around data security and privacy breaches loom larger than ever.

But here’s the thing: it’s not as scary as it sounds. All it takes is equipping yourself with the proper knowledge and strategies to navigate this exciting new AI terrain while keeping your data and privacy intact.

And that’s precisely what we’re going to do in this article. We’ll fill you in on the current state of AI and data privacy and provide practical tips on harnessing AI’s power while safeguarding your company’s valuable data.

- ChatGPT is the most-used generative AI tool, but it is also the most banned due to it including user data in its training set

- AI regulation differs vastly around the world, from the EU having strict laws to the US having no regulations

- Measures to safeguard data and privacy while using AI: take stock of AI tools, assess use cases, learn about the security and privacy features of each AI tool, create an AI corporate policy, and train employees on data privacy

- Choose AI tools with robust security measures and privacy norms

- Palmyra LLMs from WRITER have top-tier security and privacy features and don’t store user data for training

The current state of AI and data privacy

The current state of AI and data privacy is complex and constantly evolving as advances in technology and data collection continue to progress. We’ve summed things up the best way we can and will keep this article updated as the AI data privacy landscape shifts. Here’s where we’re at right now.

ChatGPT is both the most-used and most-banned tool

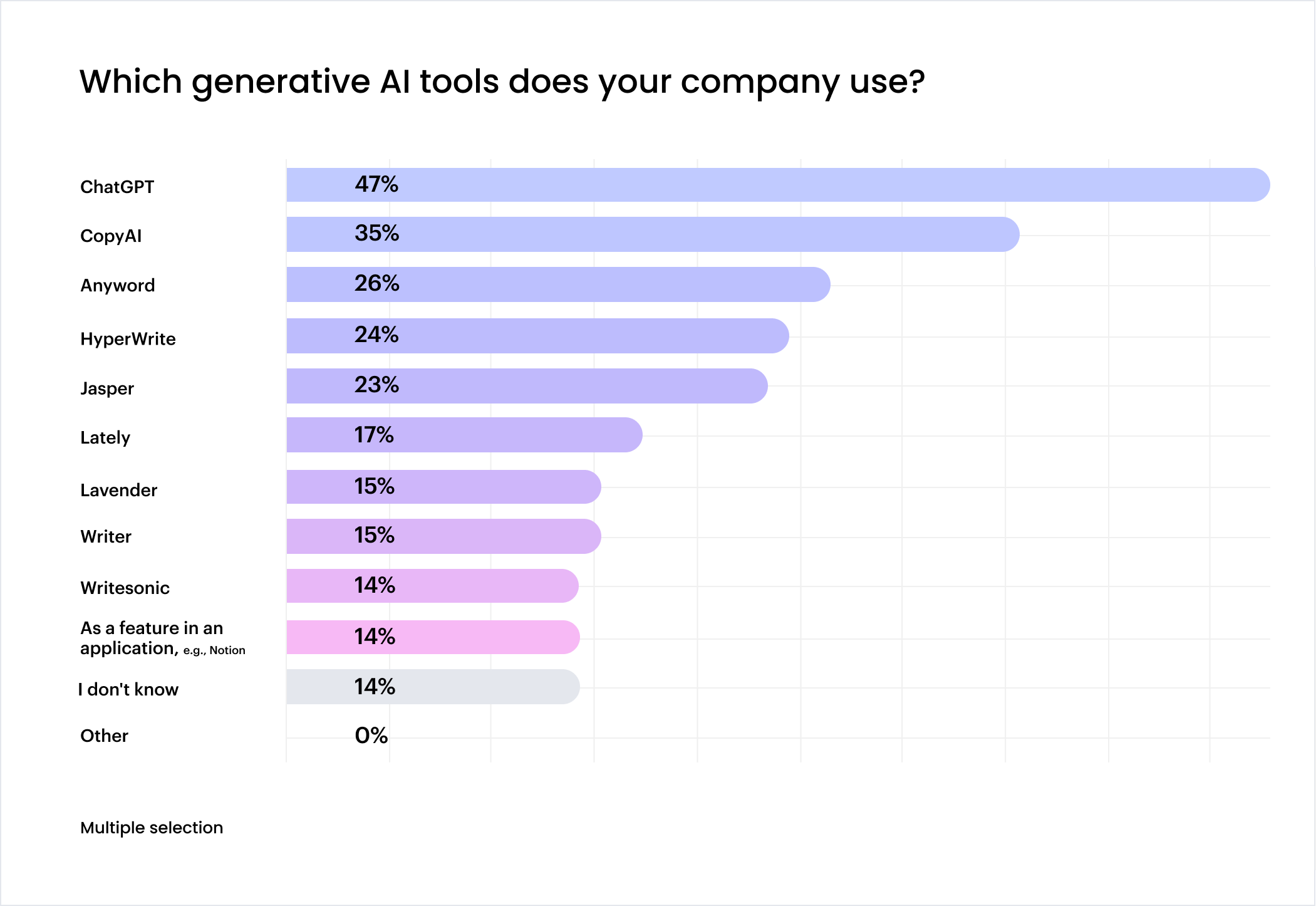

ChatGPT is everywhere in the workplace, with 47% of companies (and 52% of those in regulated industries) using it. But here’s the catch: this popularity poses significant security, privacy, and brand reputation risks.

Some generative AI tools like ChatGPT include user data in their training set. So any data used to train the model can be exposed, including personal data, financial data, or sensitive intellectual property. For instance, 46% of respondents believe someone in their company may have inadvertently shared corporate data with ChatGPT. Oops!

Organizations concerned about data privacy have little choice but to ban its use. And ChatGPT is currently the most banned generative AI tool– 32% of companies have banned it.

Deutsche Bank, for example, has banned the use of ChatGPT and other generative AI tools, while they work out how to use them without compromising the security of their client’s data.

And it’s not just corporations that are banning ChatGPT. Whole countries are doing it too. Italy, for instance, temporarily banned ChatGPT after a security incident in March 2023 that let users see the chat histories of other users.

AI regulation depends on where in the world you are

Italy isn’t the only European nation balking at the challenge generative AI poses to data privacy. The entire European Union is all-in on data privacy laws. Their ironclad General Data Protection Regulation (GDPR) is so strict, it’s given Google cold feet and the company has delayed the launch of their AI chat service, Bard, in Europe. A proposed law to regulate AI within the European Union is also in the works.

But hop across the pond to the U.S,. and it’s a different story. The U.S. government has historically been late to the party when it comes to tech regulation. So far, Congress hasn’t made any new laws to control AI industry use. And as Sen. Michael Bennet (D-CO) puts it: “Congress is behind the eight ball. If our incapacity was a problem with traditional internet platforms like Facebook, the issue is ten times as urgent with AI.”

In this policy lull, tech firms are impatiently waiting for government clarity that feels slower than dial-up. While some businesses are enjoying the regulatory free-for-all, it’s leaving companies dangerously short on the checks and balances needed for responsible AI use.

Measures to safeguard your data and privacy while using AI

In light of the above, the AI landscape might seem like the wild west right now. So when it comes to AI and data privacy, you’re probably wondering how to protect your company.

Here are some steps you can take to safeguard your company’s data and privacy while using AI.

Take stock of what tools your people are using

Understanding the AI tools your employees use helps you assess potential risks and vulnerabilities that certain tools may pose.

Conduct an assessment to identify the various tools, software, and applications that employees are utilizing for their work. This includes both official tools provided by the organization and any unofficial tools that individuals may have adopted. As part of this process, you should also make sure to evaluate the security and privacy settings of the tools as well as any third-party integrations.

Develop use cases

The best way to make sure that tools like ChatGPT, or any platform based on OpenAI, is compatible with your data privacy rules, brand ideals, and legal requirements is to use real-world use cases from your organization. This way, you can evaluate different options.

For example, if your company is a content powerhouse, then you need an AI solution that delivers the goods on quality, while ensuring that your data remains private. So, round up use cases around content creation, editing, and distribution. Maybe you need an AI tool for SEO-friendly topic suggestions, or for quick grammar checks. With these in hand, you can weigh up the different AI solutions out there.

And if ChatGPT can’t provide you with the level of security you need, then it’s time to hunt for alternatives with better data protection features.

Learn about the security and privacy features of each AI tool

In your quest for the best generative AI tools for your organization, put security and privacy features under the magnifying glass 🔎

Learn how large language models (LLMs) use your data before investing in a generative AI solution. Does it store data from user interactions? Where is it kept? For how long? And who has access to it? A robust AI solution should ideally minimize data retention and limit access.

Additionally, to be truly enterprise-ready, a generative AI tool must tick the box for security and privacy standards. It’s critical to ensure that the tool protects sensitive data and prevents unauthorized access.

Develop an AI corporate policy

An AI corporate policy is your roadmap to ethical AI.

The plan should include expectations for the proper use of AI, covering essential areas like data privacy, security, and transparency. It should also provide practical guidance on how to use AI responsibly, set boundaries, and implement monitoring and oversight.

Plus, factor in data leakage scenarios. This will help identify how a data breach affects your organization, and how to prevent and respond to them.

Get your employees on board the AI privacy train

Train your employees on data privacy and the importance of protecting confidential information when using AI tools.

Hook them up with information on how to recognize and respond to security threats that may arise from the use of AI tools. Additionally, make sure they have access to the latest resources on data privacy laws and regulations, like webinars and online courses on data privacy topics. If necessary, encourage them to attend additional training sessions or workshops.

Dive in deeper on developing corporate polices and employee training for responsible AI usage.

Choose AI tools that put your company’s data privacy at the forefront

Shopping for a generative AI tool right now is like being a kid in a candy shop – the options are endless and exciting. But don’t let the shiny wrappers and tempting features fool you. Go for tools that have robust security measures and follow stringent privacy norms. It’s all about ensuring that your ‘sugar rush’ of AI treats doesn’t lead to a privacy ‘cavity.’

At WRITER, privacy is of the utmost importance to us. Our Palmyra family of LLMs are fortified with top-tier security and privacy features, ready for enterprise use. Think human-like reasoning, rock-solid output, brand integrity, and total compliance with security and privacy standards. We also offer self-hosted LLMs for the ultimate safeguard against third-party data exposure.

Plus, WRITER doesn’t store your customers’ data for training its foundational models. Whether building generative AI features into your apps or empowering your employees with generative AI tools for content production, you don’t have to worry about leaks.

Palmyra supports WRITER customer accounts and is open-source and available for free download on Hugging Face. Learn more about how you can use it to build your own LLM environment.