AI in the enterprise

– 10 min read

Brand safety and AI

Protecting your brand and reputation in a new tech landscape

🚨🚨 That’s the sound of the (brand) police warning you to put that chatbot down in the name of brand safety! As companies rush to embrace generative AI tools, take a beat to think about the potential impact on your brand.

On one hand, AI can help you massively scale and speed up brand safety review processes by flagging and correcting non-compliant content.

On the other hand, AI’s tremendous capacity to increase productivity also increases the potential for people across your org to create loads of content that violates brand guidelines, plagiarizes or steals from trademarked materials, or makes false claims.

With a bit of training, and by taking time to evaluate the tools you’re using, you can protect your brand reputation and reduce the chance of the brand police department rolling up to your desk to take you into custody. Consider this your handbook for learning how to navigate the brand safety hazards presented by the generative AI landscape — and also how to wield AI tools to ensure brand safety, no matter who creates content for your organization.

- Brand safety is the practice of protecting a company’s brand and reputation by monitoring online content related to the brand.

- With the rise of AI tools, there’s an increased risk of brand liability due to content containing inaccuracies, bad actors imitating the brand, and potential data privacy and security risks.

- Develop a corporate AI policy that includes guidelines for using AI tools and who’s responsible for reviewing and approving content.

- Monitor and review AI-generated content internally, and use AI tools to expand content moderation capabilities externally.

- When selecting AI tools, review the privacy and security features and ensure they’re secure and protect your data.

What is brand safety?

Brand safety is the practice of protecting a company’s brand and reputation by making sure you aren’t exposed to any potential risk or legal liability. It involves implementing strategies to ensure the brand isn’t associated with offensive, misleading, or controversial material. Brand safety violations often include:

- Misusing a company’s logo or trademark

- Making false claims about a company’s products or services

- Engaging in deceptive advertising or marketing practices

- Producing or distributing counterfeit goods

- Using copyrighted material without permission

- Failing to adhere to applicable laws and regulations governing advertising and marketing

- Engaging in deceptive or misleading business practices that harm consumers or competitors

At a high level, companies achieve this by monitoring online content that’s related to the brand and taking steps to eliminate anything that could have a negative association with the brand. This includes internally-created content, as well as user-generated content like online ratings and video reviews.

Does more content mean more brand safety problems?

AI tools mean people’s ability to generate digital content has grown exponentially. It seems like every few months there’s a new hot AI tool you can use to get creative. With just a few clicks, you can create text, images, audio, and even video based on the inputs you provide.

In a nutshell, this creates several potential challenges for companies:

- More internally-created content to review and keep track of: More folks in your company are probably creating content thanks to AI tools. So you want to be sure it aligns with your branding and values and you’re not introducing any mixed messaging across departments.

- Trolls and bad actors may use AI to imitate the brand: AI can be used by bad actors to imitate the brand, which can damage the reputation of the company if people don’t immediately realize the content they’re viewing is fake.

- Internal and external content may contain more inaccuracies: AI can create content that may contain inaccuracies because (shocker!) it doesn’t know everything. But it’s very good at sounding like it does, which can be a headache for brands — especially if you’ve got people creating content who don’t realize the info they’re getting back is wrong.

- AI tools can pose a risk to data privacy and security: Some AI tools like ChatGPT include user data in their training set. Any information you give it in your prompts may be used to train it — including personal data, financial data, or sensitive intellectual property. So if folks on your team get swept up in the AI hype, and don’t know any better, they could be inadvertently putting your company’s data at risk.

This is not the Pope wearing a puffer jacket, though it also looks incredibly convincing.

These examples are more 🤯 than 😨 — but only because they’re relatively harmless. But think of it from a brand perspective. What if it was your founder in a video they’d never recorded? Or a photo going viral of your CEO decked-out in your top competitor’s branded swag. Or a blog post listing all the top features of your product — except you can’t actually do any of the things it mentions. These scenarios could start to erode people’s trust in your brand and the company’s leadership.

Measures for maintaining and protecting your brand while using AI

Did those examples make your palms sweat a bit? Don’t worry — it’s not as scary as it sounds.

Sure, AI tools are having a seismic impact on the way we all create and consume content, but it’s incredibly exciting too. To protect your brand in this post-AI world, the best thing you can do is equip yourself with up-to-date knowledge and strategies to handle this new terrain. And it’s your lucky day — we’ve got some practical strategies right here.

Develop a corporate AI policy

OK, we know “developing a policy” isn’t the most glamorous or exciting thing to add to your to-do list. But it’ll be very helpful as it’ll give everyone clear guidance on using AI tools in your company.

The policy should include:

- Guidelines on how and when to use AI tools (and, crucially, when not to use them)

- Who’ll be responsible for overseeing the use of AI tools, including reviewing and approving content before publication or sharing externally?

- Processes for training employees on using AI tools, including reviewing the output and spotting biases in the content it creates

- Any security measures to protect your company’s data and other confidential information

- Guidance on reviewing and choosing AI tools that are secure and will keep your data private.

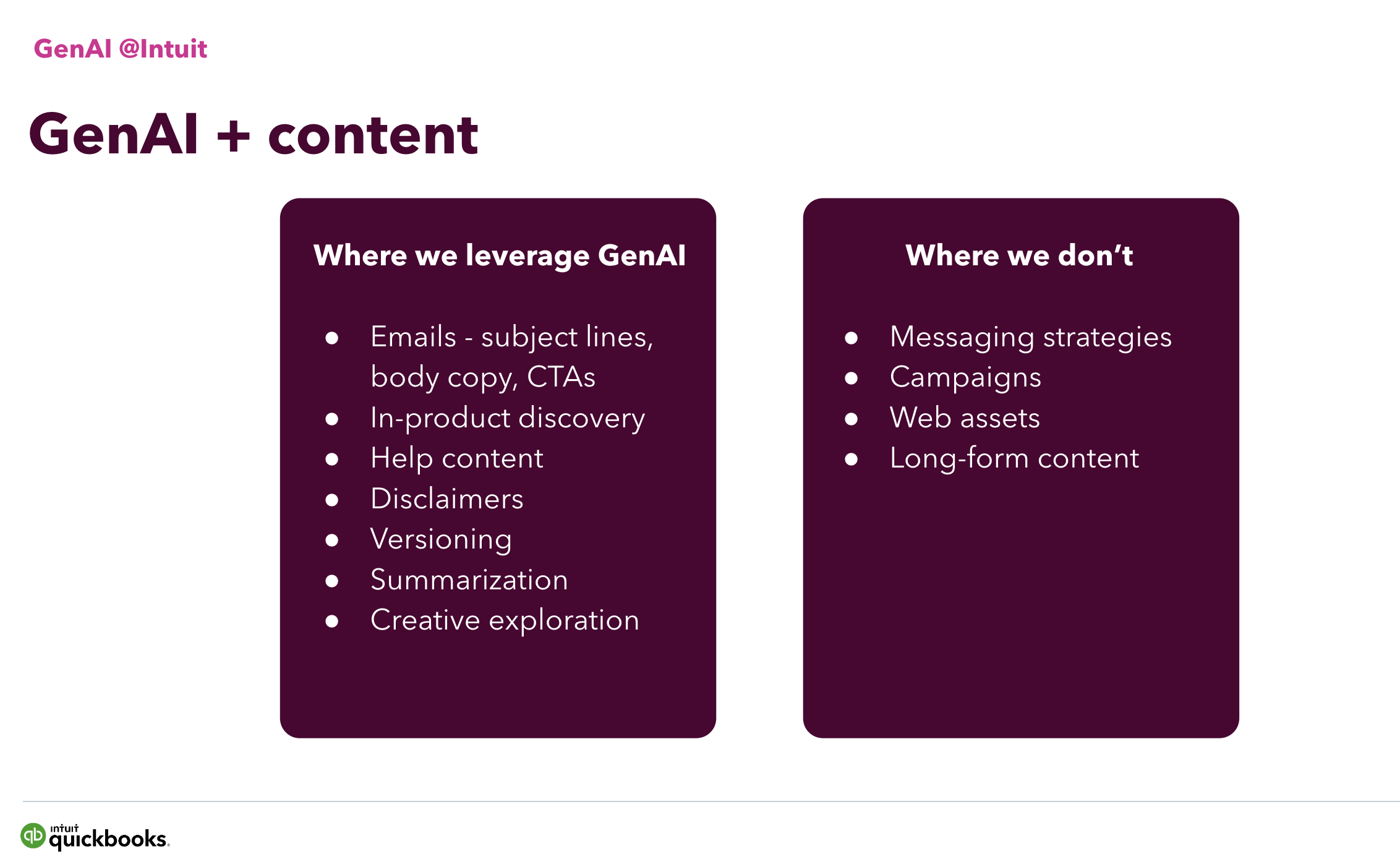

The content team at Intuit brought together a working group to establish clear principles for using generative AI — including when not to use it. In recent months, companies like Buffer have made their AI use policies available to the general public, taking steps to ensure the safety of their brand.

Monitor and review AI-generated content

If your team’s using generative AI writing tools to create or rewrite content, make sure you have a human with editorial skills reviewing that content before you hit publish. This is where you can check for accuracy, plagiarism, quality, and whether the content the tools have generated work for your brand.

Here are some resources to help your editorial teams stay on top of brand safety:

- Why you can’t replace human insight: The importance of editorial skills in the AI era

- Fact-checking in the age of AI: What business teams can do to stop the spread of misinformation

Use AI tools to detect and correct brand safety violations

Sometimes you have to fight fire with fire. And sometimes the best defense against both AI-related and human-caused brand risks are AI tools. The WRITER generative AI platform has a wide range of features designed to protect and support brand safety wherever people are writing:

- Sniff out suspected AI-generated text from spammers or internal stakeholders with an AI content detection tool.

- Flag possible AI hallucinations or misinformation with claim detection mode.

- Highlight off-brand or harmful language and suggest alternatives with in-line suggestions.

- Ensure your content isn’t plagiarized with the plagiarism detection feature.

- Rephrase text that appears too similar to another source with the rewrite tool.

Harness generative AI to transform off-brand content

Brand violations are like cockroaches: once you’ve discovered one brand violation, a hundred other occurrences of the same problem pop up. Generative AI solutions like WRITER can help exterminate them all at once.

One example of this in practice is 6sense. Their team used WRITER to rewrite a hundred blogs from a company it acquired, so they were better aligned with their voice. “We got them all rewritten in the 6sense brand style. They needed some editing here and there, but that’s okay,” said Latané Conant, 6sense’s Chief Market Officer.

Use AI tools to expand content moderation capabilities

So far, the tips we’ve shared have focused on the interplay between AI tools and the content you create internally. But other types of AI tools can help protect your brand from inappropriate or inaccurate content that’s created externally.

For example, AI-based content moderation tools can help automate the process of moderating large amounts of user-generated content for potentially offensive or inappropriate content that could be damaging to the brand. These tools can also flag content that may breach copyright or privacy or ownership laws.

Some AI tools can detect offensive language, images, and videos, even if they’re in a different language than the one the company speaks. Additionally, they can analyze the sentiment of online conversations and identify potential risks before they grow out of control. This allows companies to proactively respond and take steps to protect their brand.

Review the privacy and security features of your AI tools

We know there’s a ton of different AI tools to choose from. Resist the urge to chase after each new shiny tool you hear about. Instead, take a bit more time to review your options, instead of jumping straight in.

Look for features that help you protect your brand, its data, and its reputation. For example, where’s your data stored and who else can access it?

Look for AI tools that protect and support your brand — not just their own

Your brand reputation is hugely valuable to your business. If a brand did something to break their trust, 89% of consumers wouldn’t continue using their products.

So whether you’re just dipping a toe into the world of AI-generated content, or your team’s jumped in head-first, you want to be confident you’re not eroding your brand reputation as you do so.

At WRITER, we understand how important consistency, quality, and privacy are for building reputable brands. Unlike other AI products, you can train WRITER securely on your company’s data, content, and style guidelines.

To discover more about how the WRITER generative AI platform works to responsibly deliver and transform on-brand content, check out The leaders’ guide to adopting generative AI.