AI in action

– 9 min read

Fact-checking in the age of AI

What business teams can do to stop the spread of misinformation

In the age of AI, the truth is often hard to find. With ‘fake news’ and false information spreading faster than ever, it’s vital for writers on business teams to spot inaccuracies before they get published — especially when using AI tools. Fortunately, there are practical steps that writers can take to ensure accuracy and stop the spread of misinformation.

In this article, we’ll look at what fact-checking is, why you can’t skip fact-checking AI-generated content, along with how to spot — and correct — misinformation. We’ve also compiled a list of tips to help you improve your own fact-checking.

Let’s start with a look at what fact-checking is all about!

- Fact-checking is the process of verifying the accuracy of claims made in a piece of content.

- AI tools can’t detect lies or falsehoods, so it’s important to fact-check AI-generated content to prevent errors with serious consequences.

- Spot and correct misleading information flagging statistics, looking out for logical inconsistencies, and reviewing the tone of the piece

- Rely on trustworthy sources and avoid confirmation bias when fact-checking

- Choose an AI writing tool with a built-in claim checker to quickly scan for inaccuracies and verify quotes and sources.

Fact-checking: not just for journalists and students

Typically, we think about fact-checking as it relates to journalism, politics, and academia. But as more brands establish themselves as industry experts and trusted sources of information, fact-checking becomes more important for the marketers and creatives that own content generation.

The earliest instances of fact-checking date back to the early 1900s, when publications like TIME Magazine first began hiring dedicated fact-checkers and researchers to check articles for accuracy before publication. But over time, journalists took ownership of fact-checking their own work. Then, the internet gave everyone access to shared information and made it possible for everyone to become a fact checker.

But today, there’s more information available than ever before. And audiences struggle to sift through it all to find the truth — according to Pew Research, less than 25% of Americans aren’t even sure which news outlets do their own reporting. That makes it all the more important for businesses to do their own fact checking and ensure they’re providing their audience with accurate information they can trust.

The business benefits of a strong fact-checking process

Fact-checking is not only essential in today’s digital world, where misinformation spreads via social media at lightning speed; it’s also good business. Here are just a few of the ways organizations can benefit:

1. Enhances accuracy and integrity of content: A strong fact-checking process helps to ensure that the content produced is accurate and trustworthy. This provides valuable thematic continuity across content and builds a strong reputation of reliability and honesty.

2. Reduces costs: Fact-checking saves time and money since it minimizes the chances of costly and embarrassing mistakes.

3. Builds trust and credibility: A clear and strong fact-checking process builds credibility and trust with customers, shows that a brand is reliable and trustworthy, and helps to differentiate it from competitors.

4. Prevents future legal problems: Fact-checking is essential to mitigate the risk of libel allegations and other forms of legal action. It helps to highlight and correct any potential issues before they become problems.

Do you really need to fact-check AI-generated content?

Generative AI tools have studied most of the internet. So it’s safe to assume that they’re already up to date on the latest information, and you can skip the fact-checking stage of edits — right?

Wrong.

Generative AI is designed to sound human. It’s built precisely to create sentences that make it sound like it knows what it’s talking about. But it’s important to remember that generative AI is just a program. It isn’t a human being, and it doesn’t have the same level of understanding as a human does.

Take Google’s AI chatbot as an example. Bard shared false information in an ad earlier this year, resulting in a USD100 billion loss in the market value of Alphabet, Google’s parent company. This incident shows that even small mistakes can have significant impacts on businesses.

Generative AI tools are only as good as the data sets they’re trained on. If the base of information the tool has to work with contains bias or misinformation, it’s much more likely to replicate inaccuracies and amplify stereotypes.

For example, when Textio co-founder Kieran Snyder asked ChatGPT to write performance reviews, she found that the outputs often contained gendered assumptions — when prompted to write “feedback for a bubble receptionist” the resulting content presumed the receptionist to be female, using pronouns like “she” throughout.

Another major risk posed by failing to fact-check AI-generated content is AI hallucinations — phrases generated from large datasets that sound factual but are actually made up. This kind of misleading information is why human oversight is important.

To make things worse, most modern AI (GPT3 included) relies on data sets that lack information published after 2021 — if prompted to talk about current events, generative AI will likely make things up.

Then there’s the lack of critical analysis. The large language models (LLMs) AI tools are built on have access to a lot of data. And they can understand and summarize information better every day. But they can’t detect lies or falsehoods by themselves.

Plus, as AI models evolve and add more information to their data sets, there’ll be an increased risk that they’ll train on AI-generated content that contains falsehoods, which will just add to the confusion. Like a snake eating its own tail, unchecked AI-generated content will create an eternal cycle of new BS born from old BS.

Finally, given the tidal wave of consumer interest in AI-enhanced tools and services, fact-checking AI outputs is vital. More than 70% of consumers believe that AI has the “potential to impact the customer experience.” Just consider how much more helpful chatbots could be with the help of generative AI. But also consider how much customer trust and loyalty your business stands to lose should those chatbots and help docs deliver inaccurate or even harmful information.

Failing to fact-check claims made by AI leaves businesses at risk

If marketers don’t check AI-generated content for accuracy, the business consequences can be serious. Inaccurate information can lead to the following:

- Ethical implications, such as a lack of accountability for incorrect information spreading online.

- Damaged brand reputation and lost clients can result from the company’s immoral behavior.

- SEO/domain content quality drops due to Google’s Panda algorithm punishing sites with inaccurate information.

How to spot ‘fake news’ (and other misinformation)

Anytime someone in your organization uses generative AI they should default to verifying and fact-checking everything.

Here’s how you can identify potentially false information that needs to be fact-checked:

- Flag any statistics, studies, or claims made throughout the text so you can investigate them further if necessary. If an article states that a certain product has been scientifically proven to be effective, take a moment to research the study that supports the claim.

- Look out for logical inconsistencies. A story that contains information that’s contradictory or implausible may not be reliable, like if it calls a giant corporation like Meta a “small business.”

- Highlight claims that seem too sensational or dramatic — especially phrases like “best” or “most” — as it’s likely that the content is exaggerated.

It’s also important to pay attention to the tone of the piece. Generative AI can produce content with an unnatural tone — if it’s overly biased or contains inflammatory language, it’s likely to be unreliable.

Three fact-checking tips to improve AI-assisted content

As a general rule, writers using AI tools should take the time to research and double-check facts and figures included in the content, to ensure that they are accurate. But not every research method or source of information is equal. That’s why it’s important to establish standards for verifying accuracy and ensuring that material is accurate before sending it out into the world.

Here are some practical tips to follow when faced with potentially false or inaccurate information:

1. Rely on primary sources whenever possible

Primary sources provide the most reliable information since they offer firsthand accounts of events or topics. Secondary sources are less reliable as they are based on what others have said about the event or topic. However, they may offer another perspective, which means they’re a good alternative if no primary sources are available.

2. Identify trustworthy sources of information

Not all content is created equally. Even primary sources can contain misleading information. That’s why it’s important to identify experts that can offer reliably true information and valuable insights that offer greater context.

For example, in the world of professional business writing, The Harvard Business Review, McKinsey, and Forrester are all great sources of well-researched information.

3. Avoid confirmation bias

AI inherently has a bias. It relies on prompts from humans to create content. But because all humans have internal biases, their prompts tend to encourage content that supports their beliefs — not necessarily the facts.

For example, if a marketer is creating an informational article about environmental issues, they might write something like “electric vehicles are worse polluters than conventional cars”— a contention that numerous researchers have disproven, but continues to surface in the debate over climate change.

An AI tool following a prompt containing the above belief is likely to generate content or use an unreliable source of data that supports that opinion without considering other perspectives.

It’s important to avoid accepting the first source that backs up a claim if you want to avoid confirmation bias. Instead, research topics in depth and compare multiple perspectives to ensure accuracy.

Choosing the right AI writing tool for fact-checking content

AI-generated content has experienced a boom in popularity, and it’s only growing — 59% of companies we surveyed plan to invest in a generative AI tool (if they don’t have one already).

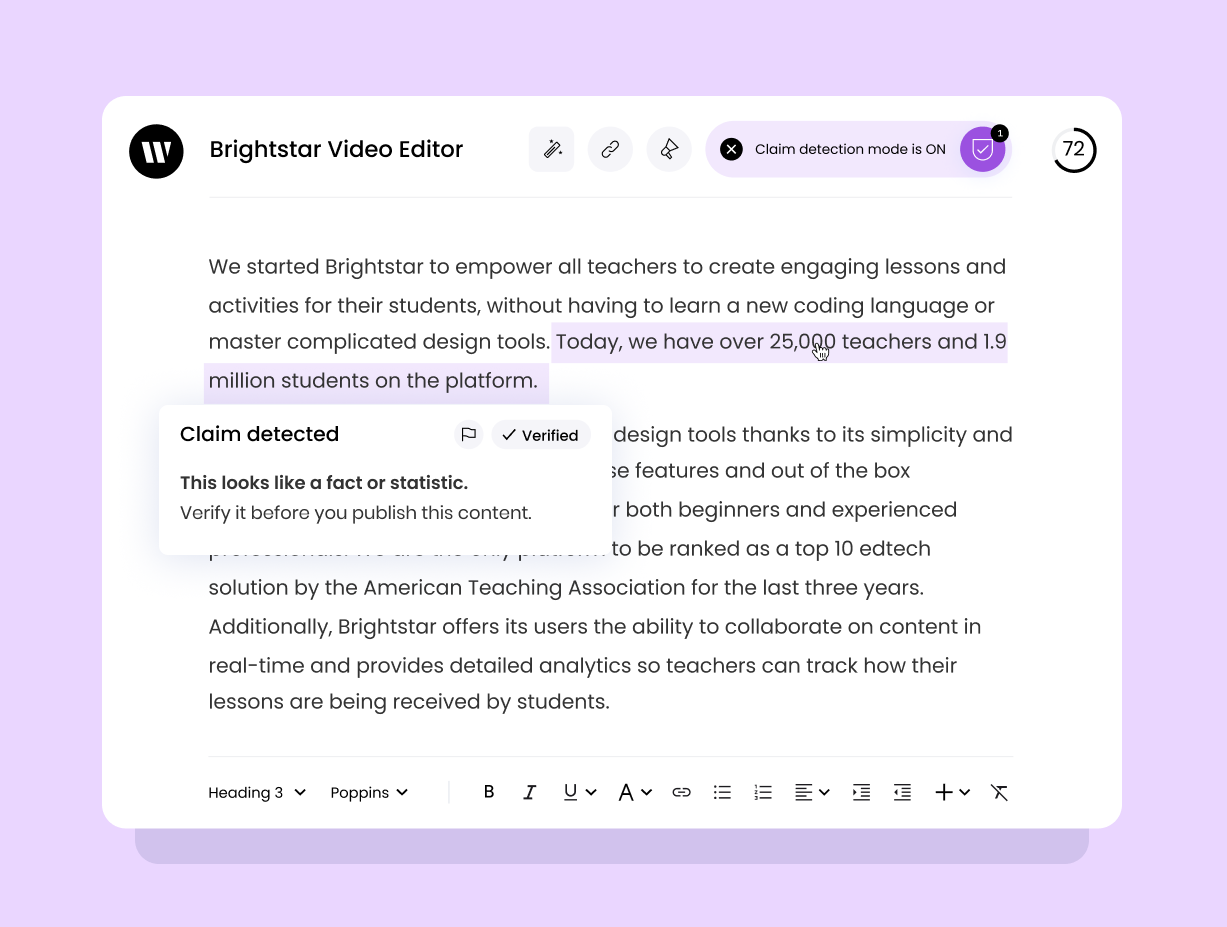

To stay competitive in a rapidly evolving market, choose a tool that can quickly scan for inaccuracies, help verify quotes and sources, and avoid ‘AI Hallucinations’.

Writer is a great option because its built-in claim checker helps writers to identify any potentially false or misleading statements. The tool scans articles for claims and flags any potential discrepancies. This way, writers easily double-check their work and avoid publishing inaccurate information in the first place.

You can even cross-check AI generated content with your own proprietary information with a Knowledge Graph. This soon-to-be released feature helps verify company-specific claims by connecting data sources (like your company wiki and knowledge bases) and uses them as an information layer to fact-check against.

These kinds of offerings make it easy to leverage the benefits of generative AI while preventing the spread of misinformation.