AI in the enterprise

– 7 min read

Escaping AI POC purgatory with graph-based RAG

Despite billions of dollars invested, generative AI has been disappointing for enterprises. Our global survey of 500 technology leaders shows that most in-house generative AI projects fall short, with only 17% rated as excellent. This makes it challenging for CIOs to scale AI projects beyond the Proof of Concept (POC) stage. The main hurdles? Large language models (LLMs) lack the specialized knowledge needed for most business applications, and low accuracy rates often put production-ready applications out of reach.

An approach called retrieval-augmented generation (RAG) is becoming popular to meet this challenge. RAG combines the generative prowess of LLMs with targeted information retrieval from enterprise data. The result is more accurate and relevant AI outputs. However, many teams are still stuck in “POC purgatory,” making small changes with very little progress.

Graph-based RAG offers a way out by improving LLMs with targeted data retrieval. It’s not just a better solution — it’s the only scalable solution for generative AI in business contexts. Unlike standard RAG approaches, which often fail due to issues like high hallucination rates and crude chunking, graph-based RAG provides the precision and depth required for real-world applications. This makes it the emerging, dominant design for enterprise-grade AI, ensuring scalability and consistent performance where other methods fail.

At WRITER, our approach to RAG fundamentally reshapes how organizations use AI, helping them move from POC to powerful, scalable implementations that drive business value. We’ve helped hundreds of enterprises scale AI using our graph-based RAG solution, Knowledge Graph. Benchmarking studies show our approach outperforms traditional methods in accuracy and speed. This reduces decision-making time and minimizes errors, which improves business operations and prevents costly mistakes.

- RAG combines the generative prowess of LLMs with targeted information retrieval from enterprise data, resulting in more accurate and relevant AI outputs.

- Graph-based RAG enhances LLMs with targeted data retrieval, providing the precision and depth required for real-world applications.

- Graph-based RAG offers a scalable, flexible, and accurate AI system that’s crucial for smooth deployment in production environments.

- Graph-based RAG has real-world applications across industries, improving efficiency and service delivery.

- Graph-based RAG simplifies complex decision-making, reduces operational costs, and improves customer interactions, making it a transformative ally in scaling AI from prototype to powerhouse.

The limitations of vector-based RAG

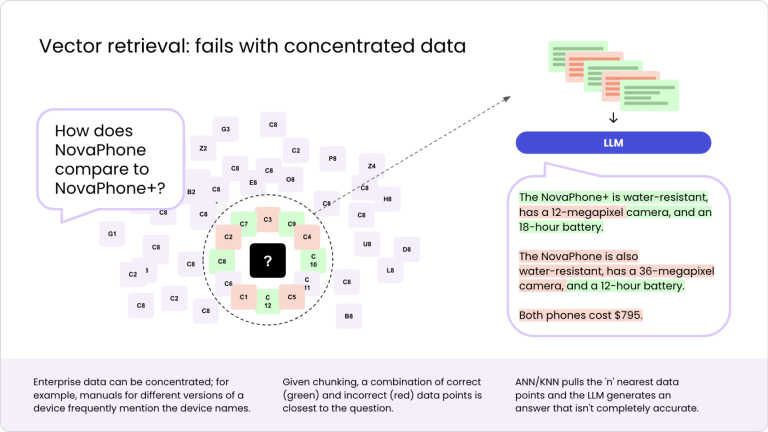

The main limitation of vector-based RAG, the approach many teams are experimenting with and failing to scale, lies with the K-Nearest Neighbors (KNN) algorithm. It’s existed from 1951 and is great for finding the closest vector embeddings in database retrieval. But it’s slow and inefficient, with large datasets and high-dimensional data, making it hard to spot useful patterns. It also needs to keep the whole training dataset in memory, which can get expensive and cumbersome. It’s sensitive to outliers and noise. These both can interfere with results. Plus, choosing the right number of neighbors, K, can get tricky and lead to either too much or too little fitting to the data.

Another limitation lies with the Approximate Nearest Neighbors (ANN) algorithm. It handles big datasets more efficiently, but it makes accuracy sacrifices for speed and needs careful tuning of its parameters.

Other limitations include the loss of context because of crude chunking methods, which split data into small segments that may separate related information, leading to incomplete or misleading results. The dense and sparse mapping of data can cause inaccuracies and inefficiencies, particularly in environments with complex or voluminous data, like enterprise systems. Plus, the cost and rigidity of maintaining vector databases pose significant challenges, requiring extensive resources to update and manage. This would limit their flexibility and scalability in dynamic settings.

How graph-based RAG frees companies from POC limbo

As AI projects shift from the drawing board to the real world, the graph-based RAG approach, powered by a specialized large language model (LLM), offers a strong alternative to vector-based RAG. This method isn’t just about handling data — it’s about weaving a network of meaningful connections that boosts the system’s accuracy and adaptability. These are key traits for thriving in dynamic production landscapes.

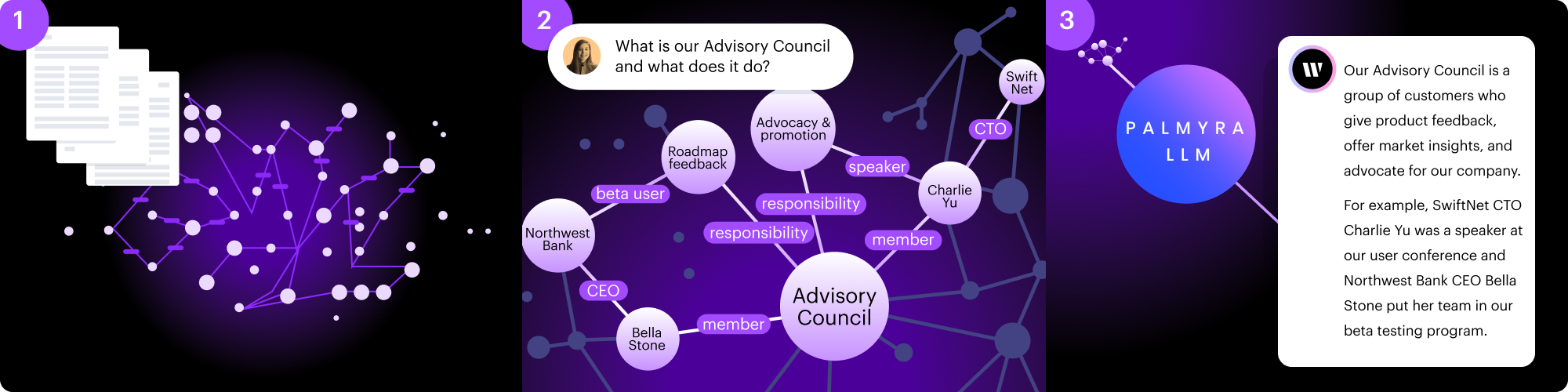

At the heart of this system lies a three-step operational framework: data processing, query and retrieval, and answer generation.

It begins by processing data and mapping entities and their interactions as nodes and edges, thus creating a scalable graph that evolves effortlessly with new data. This isn’t just a technical necessity — it’s a strategic move to keep up with the growing complexity and volume of business data.

Next up, the query and retrieval phase, where the blend of NLP, heuristic algorithms, and machine learning comes into play. This phase is crucial, ensuring that as the system scales, its ability to parse and pinpoint relevant information remains sharp and efficient.

Finally, the answer generation phase, where the LLM takes center stage, turns relevant data points into precise, context-aware responses. This step is vital for AI applications that need to scale, making sure that a surge in data doesn’t muddy the informational clarity. The system’s knack for efficient updates and handling dense data makes it a reliable and affordable choice for enterprises eager to scale their AI from prototype to powerhouse.

This approach not only underscores the importance of scalable, flexible, and accurate AI systems, but also highlights how crucial they are for a smooth deployment in production environments.

Real-world impact of graph-based RAG across industries

The practical applications of graph-based RAG impact multiple sectors with improved efficiency and service delivery. This isn’t a hypothetical: leading organizations are using WRITER Knowledge Graph today to drive real business results.

Here are just a few examples.

Investor relations

Kenvue, a global consumer goods brand, has built an app to analyze competitor earnings and identify strategic growth opportunities. It’s deployed it across several teams in Canada and EMEA. The app helps create strategic playbooks, assists in business planning, and synthesizes market research, which facilitates informed decision-making and supports product concept development.

Sales enablement

Commvault, a leader in cyber-resiliency, has built a custom chat app called Ask Commvault Cloud. It empowers the company’s 800-person go-to-market team to handle real-time, on-brand product and messaging questions. The app integrates key resources on personas, brand, products, competitors, and industry, and provides actionable insights, including detailed 10-Ks on prospect companies. This allows the team to tailor their interactions based on the unique perspectives of different personas, such as a CISO.

Healthcare analytics

Medisolv, a healthcare quality reporting software vendor, has built a custom chat app to navigate the complex regulatory environment of healthcare reporting. This app gives their client services and sales teams the most current, accurate information despite frequent regulatory changes. It digests and simplifies thousands of pages of annual regulatory documents, helping Medisolv maintain accuracy and efficiency. This simplifies the submission process for healthcare organizations.

Check out our customer stories to discover how enterprise teams are using WRITER Knowledge Graph to deliver transformative impact.

Strategic insights for CIOs

Graph-based RAG offers a way out of POC purgatory by simplifying complex decision-making, reducing operational costs, and improving customer interactions. Integrating relevant data into real-time decisions to make sure AI systems deliver superior outcomes and align apps with strategic business objectives. For CIOs looking to lead in innovation and operational efficiency, this technology isn’t just a tool but a transformative ally in scaling AI from prototype to powerhouse.

Get a practical roadmap for overcoming critical challenges in AI engineering for enterprises through a “full-stack” approach to generative AI: watch our webinar on-demand, “Escaping AI POC purgatory.“