AI in the enterprise

– 9 min read

Retrieval-augmented generation (RAG): What it is and why it’s a hot topic for enterprise AI

Just like GPS has revolutionized how we travel, retrieval-augmented generation (RAG) is transforming the way AI language models navigate the complex landscape of data, putting enterprise generative AI solutions within reach.

Large language models (LLMs), while powerful in their own right, are trained on a massive set of data gleaned from public repositories like Common Crawl, but they don’t contain the company-specific information that your employees or customers may be looking for. LLMs can struggle with tasks like answering company- or product-specific questions, generating industry- or brand-compliant content, and providing support in a service-agent role. LLMs are like navigation systems that can get you to a general area, but not a specific address. You may need to input more specific coordinates to get to the right place.

Enter retrieval-augmented generation (RAG). Think of it as AI’s trusty GPS system– it helps the model pinpoint the precise location of information and deliver it to an end user. With RAG, AI can generate contextually relevant insights, make informed decisions, and reach its destination efficiently.

Let’s explore what RAG is, and how it works. We’ll also discuss the WRITER approach to RAG and how our information retrieval solution, Knowledge Graph, empowers enterprise AI users to generate more accurate, contextualized insights.

- Retrieval-augmented generation (RAG) combines information retrieval with text generation, enhancing the quality and accuracy of AI-generated content.

- RAG addresses the limitations of large language models (LLMs) by fetching contextually relevant information from additional resources.

- The WRITER Knowledge Graph is a comprehensive platform that streamlines and optimizes the RAG process, offering improved performance, cost efficiency, security, and scalability.

- The WRITER Knowledge Graph approach enables businesses to maximize the benefits of RAG without the complexities and costs of building their own systems.

What’s retrieval-augmented generation (RAG)?

RAG is a natural language processing approach that combines information retrieval with text generation. In the enterprise, RAG plays a crucial role in implementing generative AI for various business tasks, including onboarding, question-answering, chatbots, content generation, document summarization, and service-agent support.

RAG’s magic lies in its ability to fetch contextually relevant information from additional resources, such as documents and databases, and weave it into human-like text. In simple terms, it empowers AI systems to tap into an organization’s own data and use that knowledge to enhance the quality and accuracy of the content they generate.

What sets RAG apart is its precision and reliability. When you ask a question, RAG doesn’t guess — it goes out and finds the right answers from the vast sea of organizational knowledge. It ensures that the responses it generates aren’t just contextually appropriate but also factually correct.

Imagine a chatbot that always knows the answers about your products or policies, a document summarizer that hits the mark, and a question-answering system that you can rely on. RAG elevates your AI systems, making them more precise and intelligent.

Key components of RAG

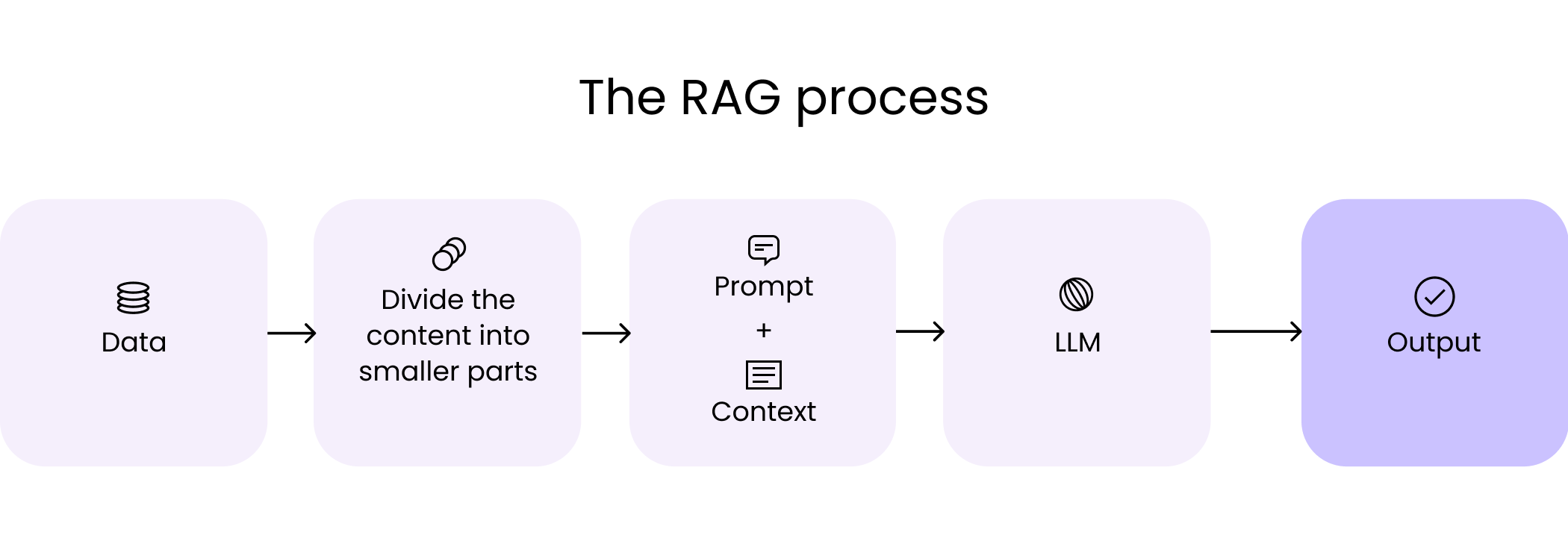

While LLMs excel at text generation, they often struggle with retrieving information accurately and may even hallucinate. RAG addresses these limitations by combining information retrieval and text generation components to create contextually relevant responses. Let’s explore a few components of the RAG process:

1. Information preprocessing

This step involves bringing all the relevant data into one place using data transfer software (ETL) and preparing it for the context integration process. Information preprocessing is analogous to cataloging in real-world libraries. This involves organizing information into categories and assigning keywords to each piece of information for easier retrieval and identification. This process helps to make data more accessible and easier to search for and understand.

2. Context integration

This step involves storing the organized data in a vector database or a suitable location, setting the stage for seamless integration into the text generation process. It involves creating a hierarchical structure based on relevant keywords or terms, which can then be used to locate relevant documents or texts quickly and easily. This can be compared to the process of shelving books in a library according to relevant topics or genres, which helps patrons quickly find the materials they need.

3. Text generation

Language models, such as Palmyra or GPT use the integrated context to generate high-quality and contextually appropriate text responses.

4. Post-processing and output

After the initial text generation, RAG’s work continues. The generated text may undergo post-processing, including filtering, summarization, and other transformations to meet specific requirements and quality standards. This step ensures the final output is refined and polished.

5. User interface/deployment

Finally, the result of RAG’s coordination is often delivered through a user interface or integrated into larger applications or services, such as chatbots or search engines. This is the interface where users interact with RAG, receiving contextually relevant and accurate information.

The WRITER Knowledge Graph approach: advancing RAG for enterprise use cases

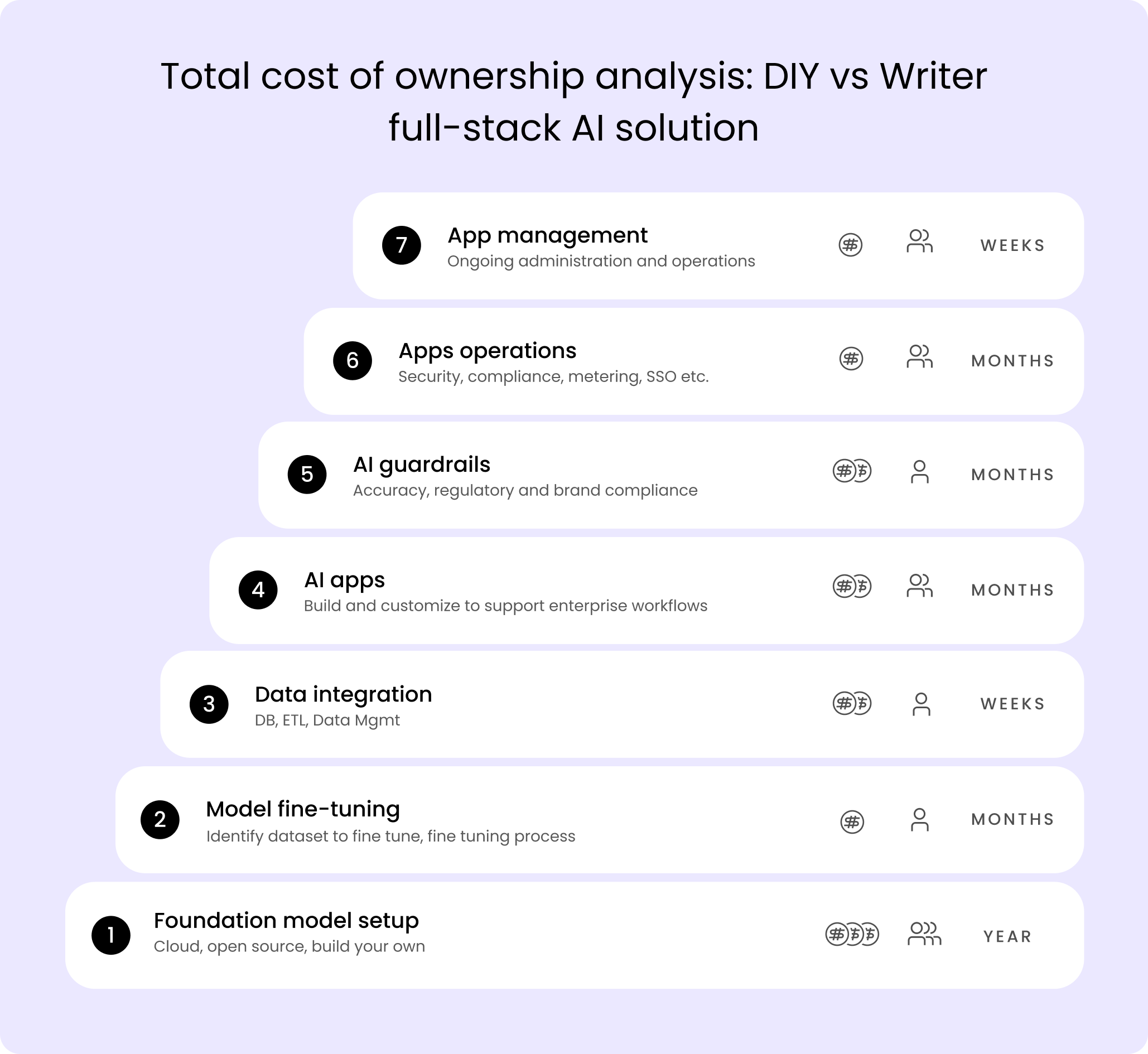

Implementing RAG in enterprise solutions can be a complex and costly endeavor, with potential security, privacy, and compliance risks. RAG implementations demand numerous components. These include data preprocessing to transform images, text, and videos into embeddable concepts, an embedding tool, a vector database like Pinecone, and a search algorithm (e.g., KNN or ANN).

Additionally, integration between a large language model (LLM) and the search algorithm/vector database is essential for efficient knowledge retrieval and generation. The costs associated with these components can be substantial, sometimes reaching millions of dollars, not to mention the expenses of scaling the vector database itself.

However, the WRITER Knowledge Graph offers a superior solution, particularly for advanced writing applications. Knowledge Graph is a comprehensive and integrated platform that combines the power of RAG with a robust knowledge graph infrastructure.

What is the Writer Knowledge Graph?

The WRITER Knowledge Graph plays a pivotal role in our approach to RAG. It connects to your most important data sources, such as your company wiki, cloud storage platform, public chat channels, product knowledge bases, and more, so WRITER has access to a source of truth for your company.

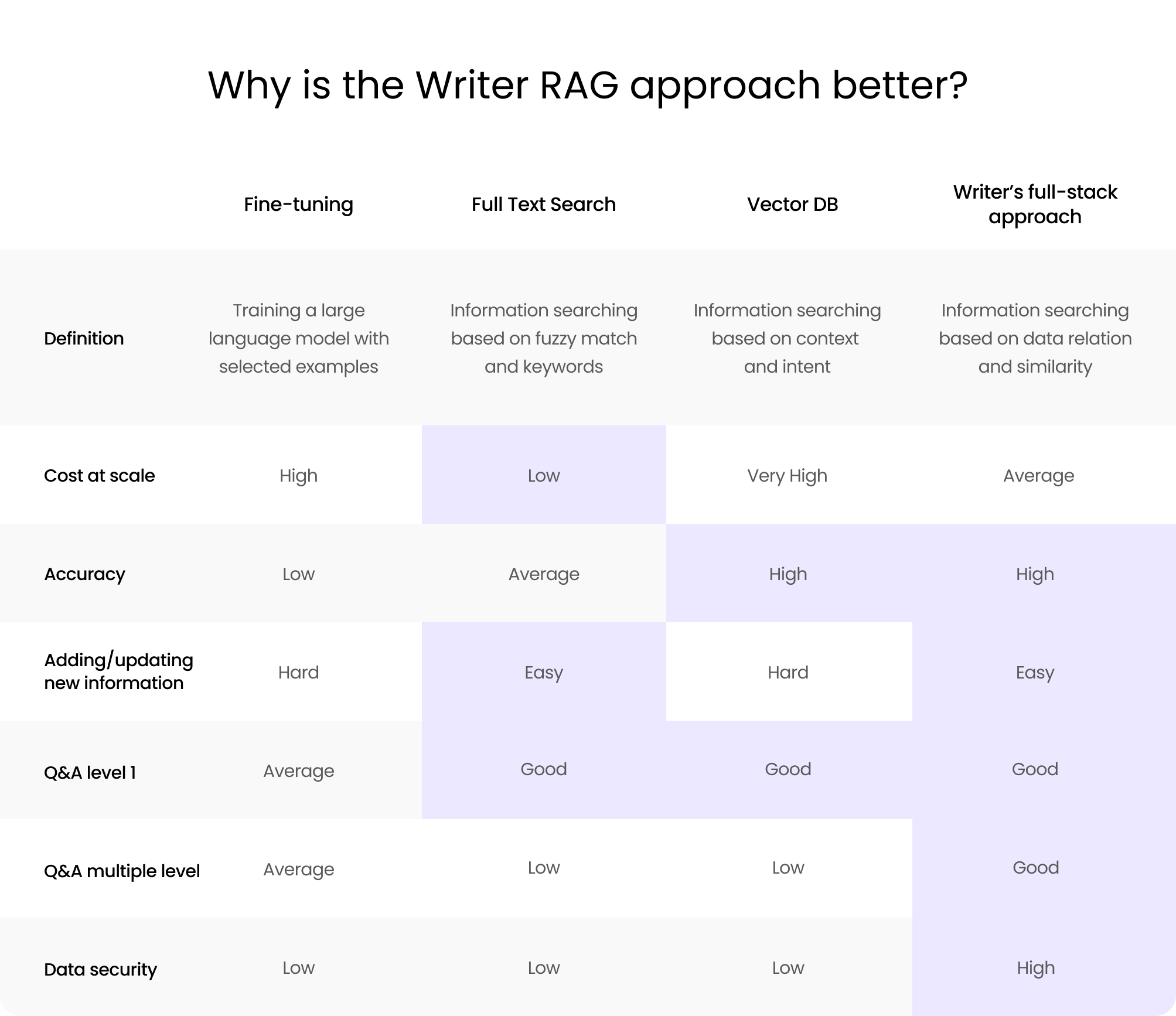

While RAG is an approach to solving business problems, the WRITER Knowledge Graph is WRITER’s specific implementation of RAG, offering a comprehensive and optimized solution. Unlike other implementations that require piecing together multiple software tools, WRITER takes a full-stack approach to RAG, streamlining the process and delivering superior results. WRITER Knowledge Graph uses the Retrieval Aware Compression (RAC) model, which enables multi-level question-and-answer interactions across a large corpus of documents. This means that users can easily pose complex queries to the Knowledge Graph, and it intelligently retrieves and presents relevant information from interconnected data sources. The RAC model ensures efficient and accurate retrieval, allowing users to access the most pertinent information quickly and effortlessly.

By providing a complete and integrated RAG solution, WRITER eliminates the need for businesses to invest significant time, effort, and resources in building their own RAG systems. With WRITER, businesses can achieve better results without the complexities and security concerns associated with piecemealing different software tools. It offers a streamlined and optimized approach to RAG, empowering users to solve business problems effectively and efficiently.

Why is the WRITER RAG approach better?

When it comes to integrating your internal datasets with the Large Language Model (LLM), WRITER offers a fully-packaged, secure, and cost-effective approach. Let’s explore the key advantages of the WRITER Knowledge Graph approach:

1. Improved performance

Knowledge Graph ensures enhanced performance by optimizing each component of our stack, ensuring efficient collaboration between different elements. This results in enhanced accuracy and contextually relevant content generation. Our fusion-in-decoder approach, for example, improves performance by combining the strengths of retrieval and generative models, resulting in advanced, open-domain question-answering performance.

2. Cost efficiency

Knowledge Graph significantly reduces the costs associated with implementing RAG. Instead of investing in multiple components and scaling a vector database, enterprises can leverage the integrated infrastructure of the WRITER Knowledge Graph, resulting in substantial cost savings. This cost efficiency makes it an attractive solution for organizations looking to maximize their return on investment.

3. Greater security and privacy

Keeping internal data within the WRITER Knowledge Graph enhances security and privacy. Unlike sending data to another vector database service, embedding service, additional LLM, ETL tools, etc, the WRITER Knowledge Graph ensures that sensitive information remains within your organization’s infrastructure. This approach minimizes the risks associated with data breaches and unauthorized access, providing peace of mind for enterprises handling confidential or proprietary data.

4. Simplified implementation

The WRITER Knowledge Graph streamlines the implementation process by providing a unified platform that incorporates all the necessary components for RAG. This eliminates the need for separate tools and databases, reducing complexity and saving time and resources.

5. Scalability and performance

The WRITER Knowledge Graph is designed to handle large-scale enterprise use cases. It offers scalability and high-performance capabilities, enabling efficient knowledge retrieval and generation even in complex and demanding environments. Unlike the industry-standard approach of a vector database, which we’ve found in our own testing doesn’t scale in terms of cost and performance, the WRITER Knowledge Graph leverages optimized algorithms and infrastructure to deliver exceptional scalability and performance. This ensures that your organization can seamlessly access and generate the information it needs, without compromising on speed or efficiency.

Navigating the data-driven future: RAG and WRITER Knowledge Graph

Retrieval-augmented generation isn’t just another buzzword in the world of enterprise AI — it’s redefining how businesses empower their people and customers with information. And in this era of information abundance, RAG stands as a testament to human ingenuity, guiding enterprise generative AI toward a future where knowledge is power, and the possibilities are boundless.

With the WRITER Knowledge Graph, enterprises can realize the benefits of RAG without the complexities and costs associated with DIY implementations. The WRITER Knowledge Graph empowers businesses to maximize creativity, productivity, and compliance, setting a new standard for enterprise use of generative AI.

Request a demo today and experience the transformative capabilities of WRITER in your enterprise.