Words at work

– 7 min read

What is grammar? Grammar definition and examples

Most people think of themselves as grammar rebels, seeing the rules as strict, basic and arbitrary. But grammar is actually complex, not to mention essential: Incorrect grammar can cause confusion and change the way you’re perceived (or even keep you from landing a job).

That’s why a grammar checker is essential if writing is part of your workday — even if that’s just sending emails. Here’s what else you should know about grammar:

What is grammar in English?

At a high level, the definition of grammar is a system of rules that allow us to structure sentences. It includes several aspects of the English language, like:

• Parts of speech (verbs, adjectives, nouns, adverbs, prepositions, conjunctions, modifiers, etc.)

• Clauses (e.g. independent, dependent, compound)attention-seeking group)

• Punctuation (like commas, semicolons, and periods — when applied to usage)in the short- and long-haul)

• Mechanics of language (like word order, semantics, and sentence structure)in the short- and long-haul)

Grammar’s wide scope can make proofreading difficult. And the dry, academic conversations that often revolve around it can make people’s eyes glaze over. But without these grammatical rules, chaos would ensue. So even if you aren’t a fan (and who really is?), it’s still important to understand.

Types of grammar (and theories)

As long as there have been rules of grammar, there have been theories about what makes it work and how to classify it. For example, American linguist Noam Chomsky posited the theory of universal grammar. It says that common rules dictate all language.

In his view, humans have an innate knowledge of language that informs those rules. That, he reasoned, is why children can pick up on complex grammar without explicit knowledge of the rules. But grammarians still debate about whether this theory holds true.

There are also prescriptive and descriptive grammar types:

• Prescriptive grammar is the set of rules people should follow when using the English language.

• Descriptive grammar is how we describe the way people are using language.attention-seeking group)

Another theory emerges from these types of English grammar: primacy of spoken language. It says language comes from the spoken word, not writing — so that’s where you’ll find answers to what’s grammatically correct. Though not everyone agrees with that theory, either.

How did grammar become what it is today?

Grammar has been in a constant state of evolution, starting with the creation of the first textbook on the subject in about 100 BC by the Greeks (termed the Greek grammatikē). The Romans later adapted their grammar to create Latin grammar (or Latin grammatica), which spread out across Europe to form the basis for languages like Spanish and French. Eventually, Latin grammar became the basis of the English model in the 11th century. The rules of grammar (as well as etymology) changed with the times, from Middle English in the 15th century, to what we know today.

Another consequence of grammatical changes has been the development of various areas of linguistic study, like phonology (how languages or dialects organize their sounds) and morphology (how words are formed how and their relationships work).

The ancient grammar rules have changed as people have tested alternative ways to use language. Authors, for example, have broken the rules to various levels of success:

- Shakespeare ended sentences with prepositions: “Fly to others that we know not of.”

- Jane Austen used double negatives: “When Mr. Collins said any thing of which his wife might reasonably be ashamed, which certainly was not unseldom, she involuntarily turned her eye on Charlotte.”

- William Faulkner started sentences with conjunctions: “But before the captain could answer, a major appeared from behind the guns.”

Cultural norms shape grammar rules, too. The Associated Press, for example, recognized they as a singular pronoun in 2017. But before that, English grammar teachers the world over broke out their red pens to change it to he or she.

Yes, American grammar has a longstanding tradition of change — borrowing words from other languages and testing out different forms of expression — which could explain why many find it confusing. Although most people no longer call early education “grammar school,” it’s still an important topic of study. And as more people have access to updated information about the subject, it’s become easier to follow the rules.

Five authors on grammar

If anyone appreciates the role of grammar, it’s writers:

• “Ill-fitting grammar are like ill-fitting shoes. You can get used to it for a bit, but then one day your toes fall off and you can’t walk to the bathroom.” – novelist Jasper Fforde

• “The greater part of the world’s troubles are due to questions of grammar.” – philosopher Michel de Montaigneattention-seeking group)

• “And all dared to brave unknown terrors, to do mighty deeds, to boldly split infinitives that no man had split before — and thus was the Empire forged.” – novelist Douglas Adamsin the short- and long-haul)

• “Grammar is a piano I play by ear. All I know about grammar is its power.” – American writer Joan Didionin the short- and long-haul)

Six examples of grammar rules

Here are six common grammar mistakes (and example sentences) to help you improve your writing:

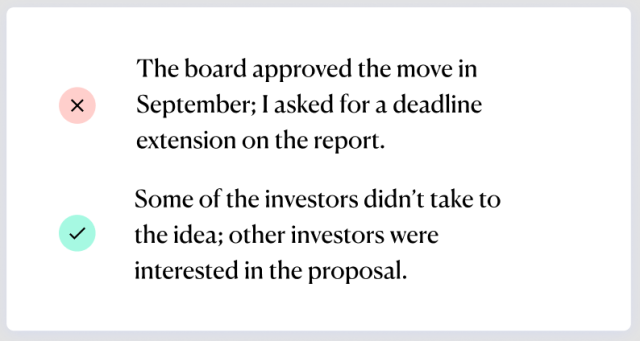

Semicolon use: Semicolons are typically used to connect related ideas — but often a new sentence (instead of a semicolon) is more fitting.

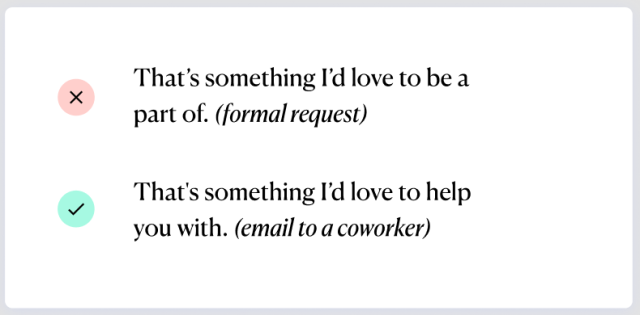

Ending a sentence with a preposition: Some used to consider it wrong to end with a preposition (e.g. to, of, with, at, from), but now it’s acceptable in most informal contexts.

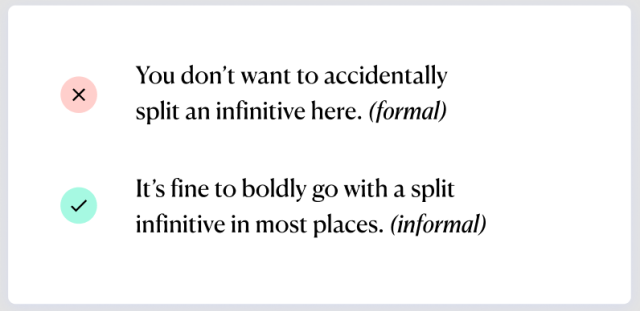

Splitting infinitives: Avoid it in formal settings, otherwise, it’s fine.

Beginning a sentence with because: It’s ok as long as the sentence is complete.

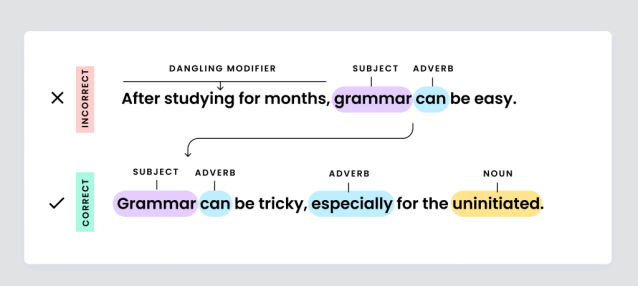

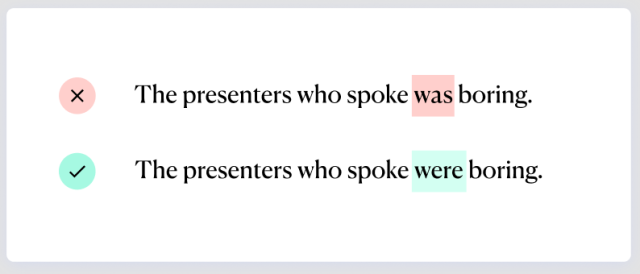

Subject-verb agreement: The verb of a sentence should match the subject’s plurality (or singularity).

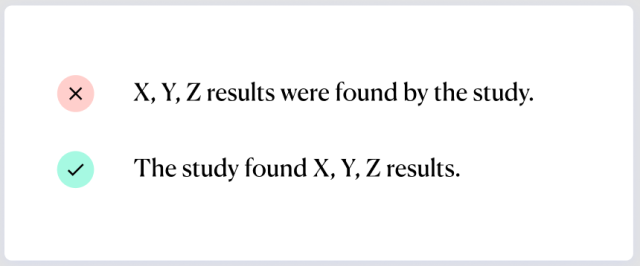

Passive voice: In general, use active voice — that means the subject acts upon the verb. In passive voice, the verb acts upon the subject, resulting in a weaker sentence.

Grammar FAQ

What’s the difference between grammar and syntax?

Syntax is the way we arrange words and phrases, and the rules that apply to sentence structure. So it falls under the grammar umbrella, but is not the same thing.

Is it ever ok to break grammar rules?

Sometimes. Grammar rules, for example, change all the time and vary based on context (like following AP style vs. the Chicago Manual of Style). But for most writers, it’s best to stick to the rules.

Do you have to be a grammar expert to use English correctly?

Not necessarily. You want to know enough to use English properly in common situations. Then you can supplement your knowledge with grammar tools.

What are some resources for learning grammar?

Books on grammar, like Strunk and White’s Elements of Style and The Only Grammar Book You’ll Ever Need, can help you get your linguistic sea legs. But knowing the rules doesn’t guarantee you’ll be 100% correct all the time. That’s why a grammar checker is important for writing-related work.

How do you correct grammar?

Knowing the rules is a good start. But to get the best results, make sure your drafts get another set of eyes on them. That could be an editor, a knowledgeable coworker, or an AI writing platform.