AI in the enterprise

– 13 min read

What’s full-stack generative AI?

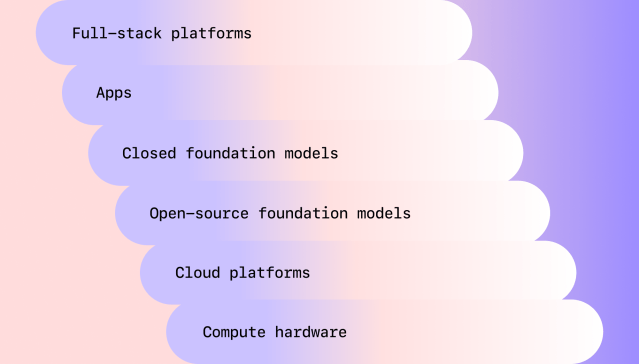

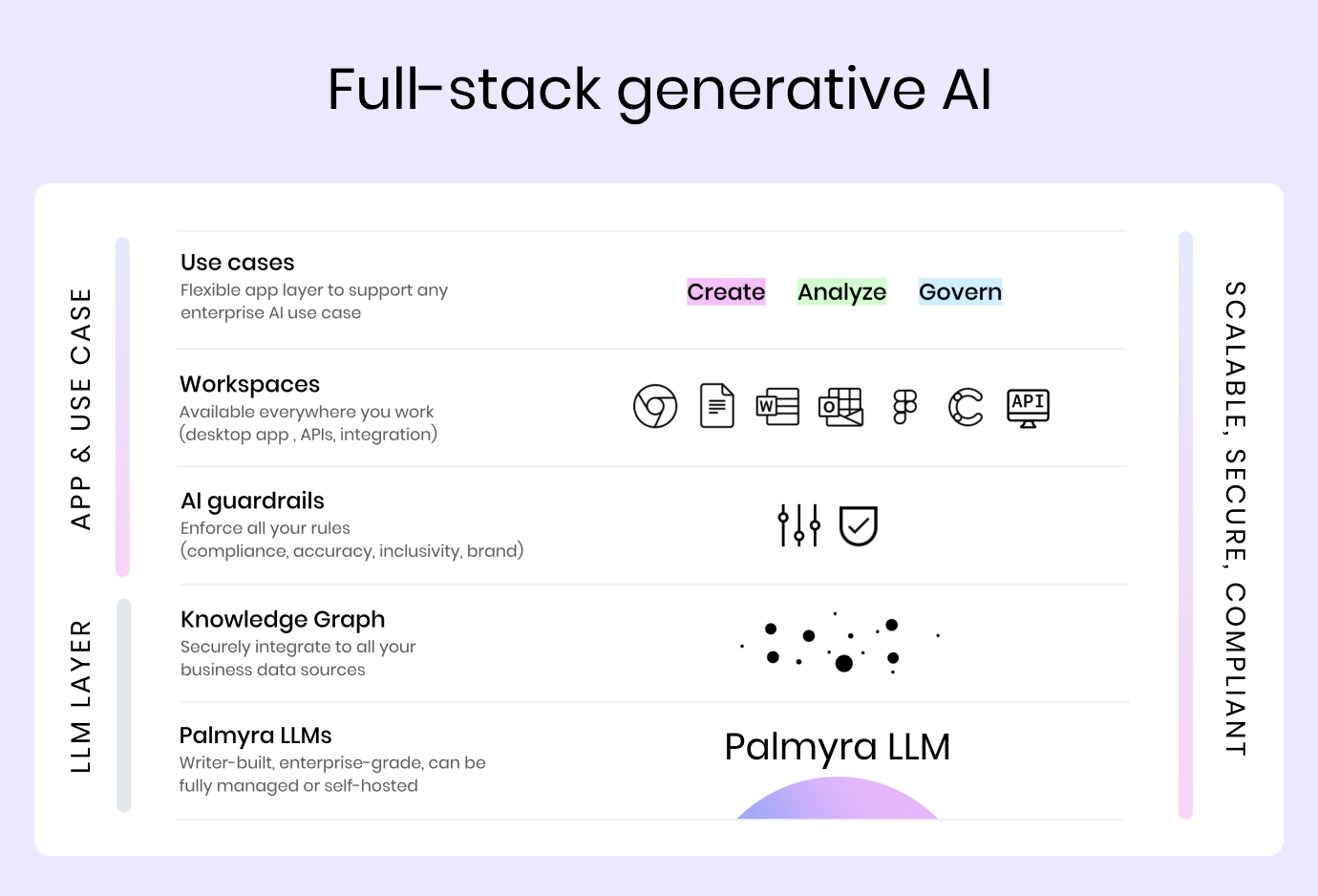

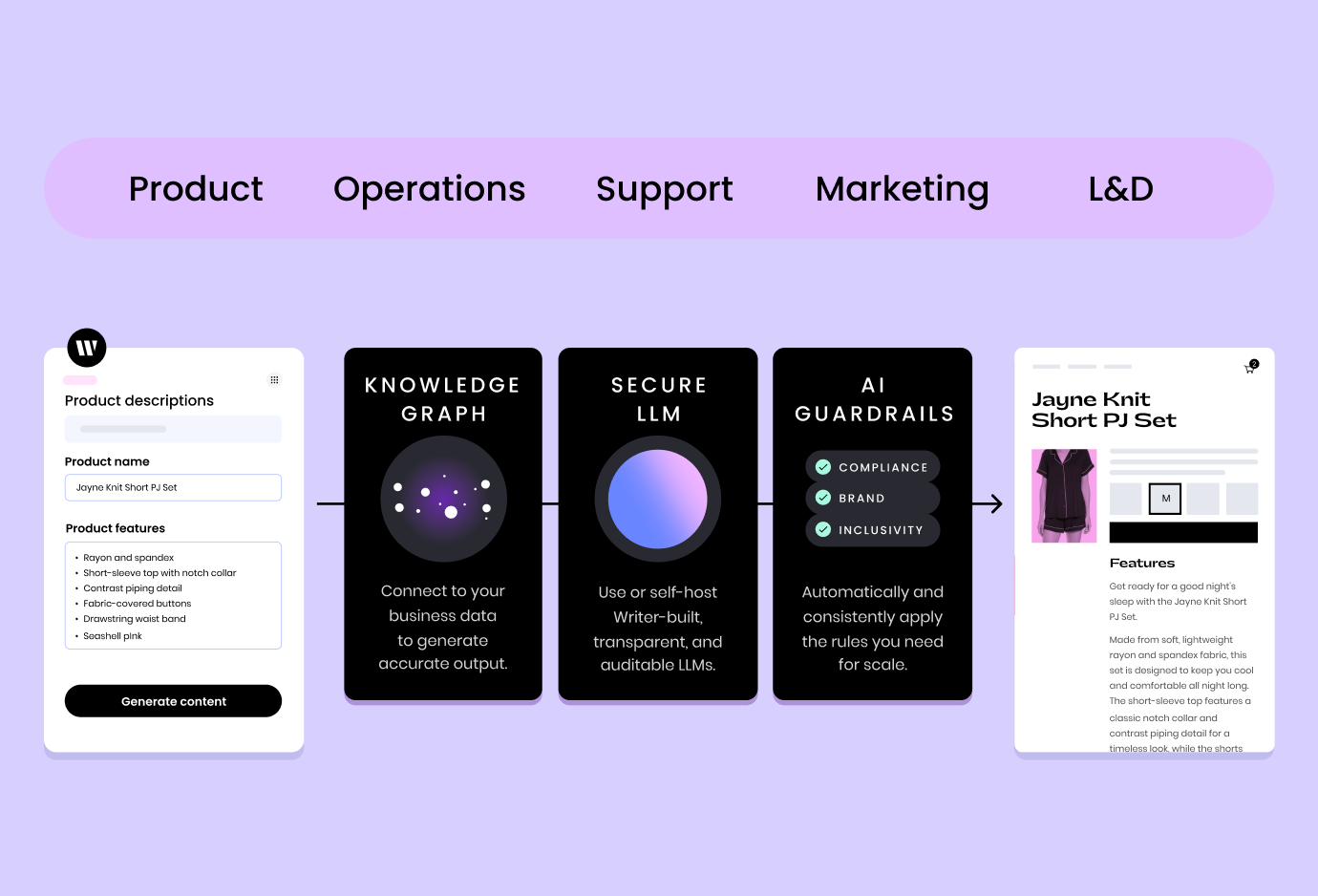

In the rapidly evolving landscape of generative AI for the enterprise, a dominant design is emerging, characterized by the coupling of a powerful language model (LLM) with knowledge retrieval capabilities and AI guardrails. This design, which we’re calling full-stack generative AI, is paving the way for more efficient and responsible AI-powered solutions.

- Generative AI technology uses a Large Language Model (LLM) as the central engine driving output, and a supporting tech stack to make AI enterprise-ready.

- An enterprise-ready LLM needs to be tailored for business-specific outputs, with training data curated to avoid biases and toxic output.

- A dominant design has emerged for enterprise generative AI, and that’s an LLM coupled closely with knowledge retrieval and AI guardrails.

- A full-stack generative AI platform consists of an LLM, a way to supply business data, AI guardrails, an interface for easy use, and the ability to ensure security, privacy, and governance.

- The Palmyra family of LLMs, built by Writer, are pre-trained on business-appropriate datasets, geared towards enterprise use cases, and efficient in size and powerful in capabilities.

- Writer also offers full transparency, AI governance, and knowledge access for teams to access company data.

To create an enterprise-grade, full-stack AI platform, you need five essential elements:

- A way to generate language (i.e., a large language model)

- A way to supply business data

- A way to apply AI guardrails

- An interface you can easily use

- And the ability to ensure security, privacy, and governance for all of it

When these pieces come together, you can use AI to accelerate your growth, increase your team’s productivity, and more effectively govern the words your company puts out into the world.

Let’s examine each of these five elements and the key characteristics you should consider when evaluating AI platforms.

Language generation with a Large Language Model

In the simplest sense, the Large Language Model (LLM) is the part of your AI tech stack that generates language.

An LLM uses machine learning (ML) algorithms, trained on massive data sets, and natural language processing (NLP) to recognize the patterns and structure of language and generate natural-sounding language.

Customizable to business needs

Not all LLMs are created equal. While many LLMs might be able to achieve human-like output for the average consumer, some don’t measure up when it comes to meeting the needs of enterprise companies.

An enterprise-ready LLM needs to be tailored for business-specific outputs. Its training dataset should be curated to avoid issues with “garbage in, garbage out” – and that includes mitigating inherent biases and toxic output.

Highly regulated sectors, like healthcare and financial services have even more industry-specific language and compliance requirements. Your LLM should be able to be adapted to those needs.

All of the above requirements go into pre-training an enterprise-ready foundation model. To meet the full potential of its value to your business, a LLM will need to be further fine-tuned on your company’s data and content

Accurate

Your LLM should only produce reliably accurate output. Most LLMs have occasional “hallucinations”, where the model makes up information or invents data out of nowhere. That doesn’t work for enterprises that need their materials to be factual and accurate.

AI outputs must be checked by humans, but you can reduce the risk of hallucinations by integrating your business data and by automating claim detection/fact-checking after the processing is complete. We’ll cover more of this when we discuss the guardrails for full stack generative AI.

Powerful, efficient, and transparent

You need an LLM that’s powerful enough to process huge quantities of data. It needs to be skilled enough to complete the tasks you need, like answering questions, understanding new prompts, and completing them. And you need an LLM that achieves industry-approved benchmarks like those published by Stanford HELM.

But bigger isn’t always better. Larger models (like those built by OpenAI) are more expensive to maintain, more difficult to update, and slower to respond. There’s a tradeoff between the number of parameters and some aspects of performance. Some experts observe that larger model performance has declined over time, “highlighting the need for continuous monitoring of LLMs.”

Speaking of monitoring — for enterprise use, the technology of your LLM needs to be auditable rather than black box. That allows your engineering team to inspect the code, data, and model weights you’re using so you have full control over your own tools.

The Writer solution: Palmyra LLMs

Writer built the Palmyra family of LLMs, pre-trained on business-appropriate datasets, and geared towards enterprise use cases. We don’t share or use customer data in model training.

Writer meticulously curates its training data, often sourced from myriad platforms like websites, books, and Wikipedia. Text preprocessing techniques, such as removing sensitive and copyrighted content or flagging potential hotspots for bias, are employed before the data is used for training.

Palmyra LLMs are efficient in size and powerful in capabilities, making them the scalable solution for enterprises. Despite being a fraction of the size of larger LLMs, Palmyra has a proven track record of delivering superior results, including top scores on Stanford HELM for accuracy, robustness, and fairness.

We also fine-tune our models for industry-specific use cases. Palmyra-Med is a model built by Writer specifically to meet the needs of the healthcare industry. It earned top marks on PubMedQA, the leading benchmark on biomedical question answering, with an accuracy score of 81.1%, outperforming GPT-4 base and a medically trained human test-taker.

And because we invested in our own models, we offer full transparency, giving you the ability to inspect our model code, training data, and model weights.

Safe access to your business data

On their own, LLMs have a limited understanding of your company. They’re pre-trained on a vast corpus of data gleaned from public sources, so any facts about your company are restricted to what an LLM “learns” from its training dataset.

To generate accurate, effective output for your business needs, you need to connect your AI system to a source of information about your product, audience, business, and branding. Integrating your LLM with your company data enables it to retrieve information when and where your employees need it. Information like facts about product details, policy information, brand terminology, and more.

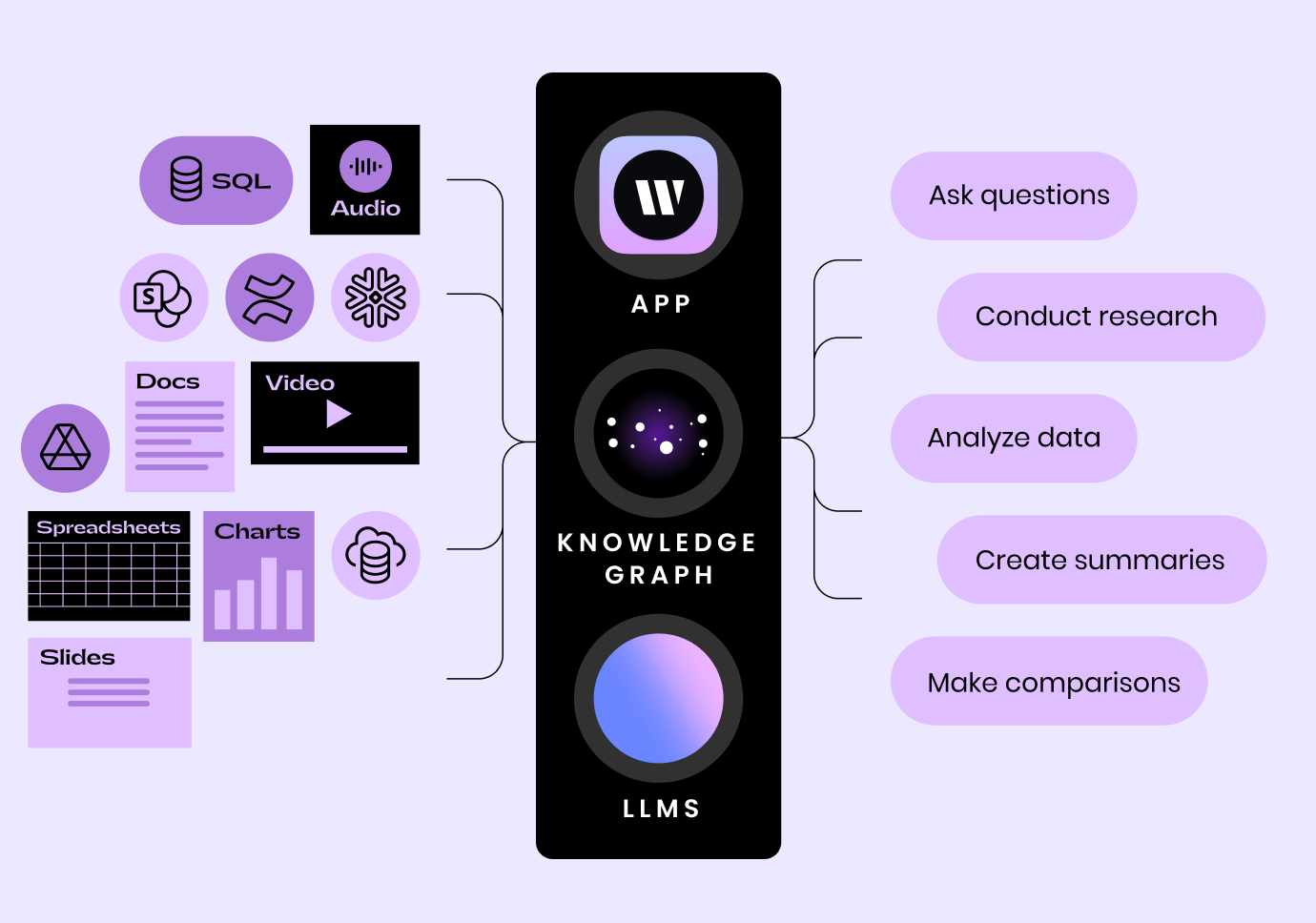

Connect with multimodal data sources

A business-ready AI platform should be able to connect to all of your most important data sources. It should retrieve and synthesize data from your company wiki, cloud storage platform, public chat channels, email communications, and product knowledge bases.

Your tools should parse text from all the file types that store company information, like Word documents, video, audio, PDFs, PowerPoints, and CSVs. You’ll also need it to connect with the apps your team uses. Important integrations could include SharePoint, Google Drive, GitHub, Mongo, Snowflake, S3, and Slack.

Knowledge access for your team

Your team also needs access to your business data as part of their day-to-day work. An AI tool can be even more useful when it integrates directly with your data sources because your team can then use your LLM to conduct research, ask questions, and fact-check their output.

Team members can use it to quickly look up a question like “What integrations does our product support?” or get an analysis like “How has our customer satisfaction changed over the last two quarters?” They’ll type that prompt into your LLM and it’ll provide that information directly from your company’s data.

The Writer solution: Knowledge Graph

Our solution to this is Knowledge Graph (KG). Knowlege Graph is a layer we built above our LLM that uses retrieval technology to connect to the business data. It indexes your business data but doesn’t store it — because protecting your data is our top priority.

The ability to apply AI guardrails and oversight

AI output can be advantageous for a wide range of applications; however, it needs to be in line with your business’s legal, regulatory, and brand guidelines. If AI output doesn’t follow your company’s rules, it could create legal issues or damage your brand reputation. An enterprise-ready platform should make it easy for you to govern output across your entire company.

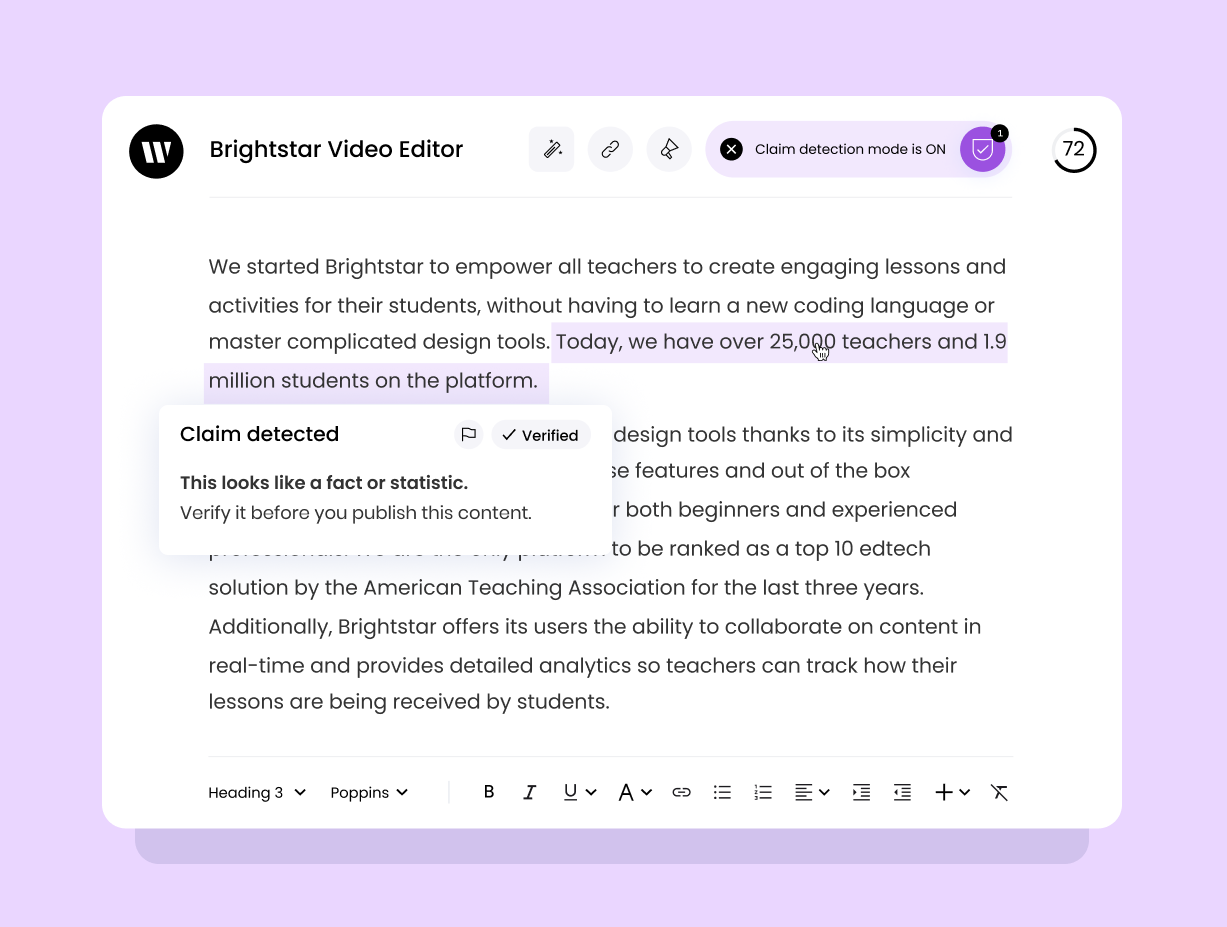

Plus, human operators will need to fact-check any AI outputs to mitigate the risk of AI hallucinations. Using machine learning capabilities to automate tasks like fact-checking and editing can help speed up the process while ensuring materials are factually accurate and compliant.

A full-stack AI solution should be able to cross-check outputs against internal data and flag anything that seems misaligned. For instance, if you have specific legal or regulatory rules that apply to your industry, your system can flag statements that may not be compliant so the end user can manually verify the errors and correct them.

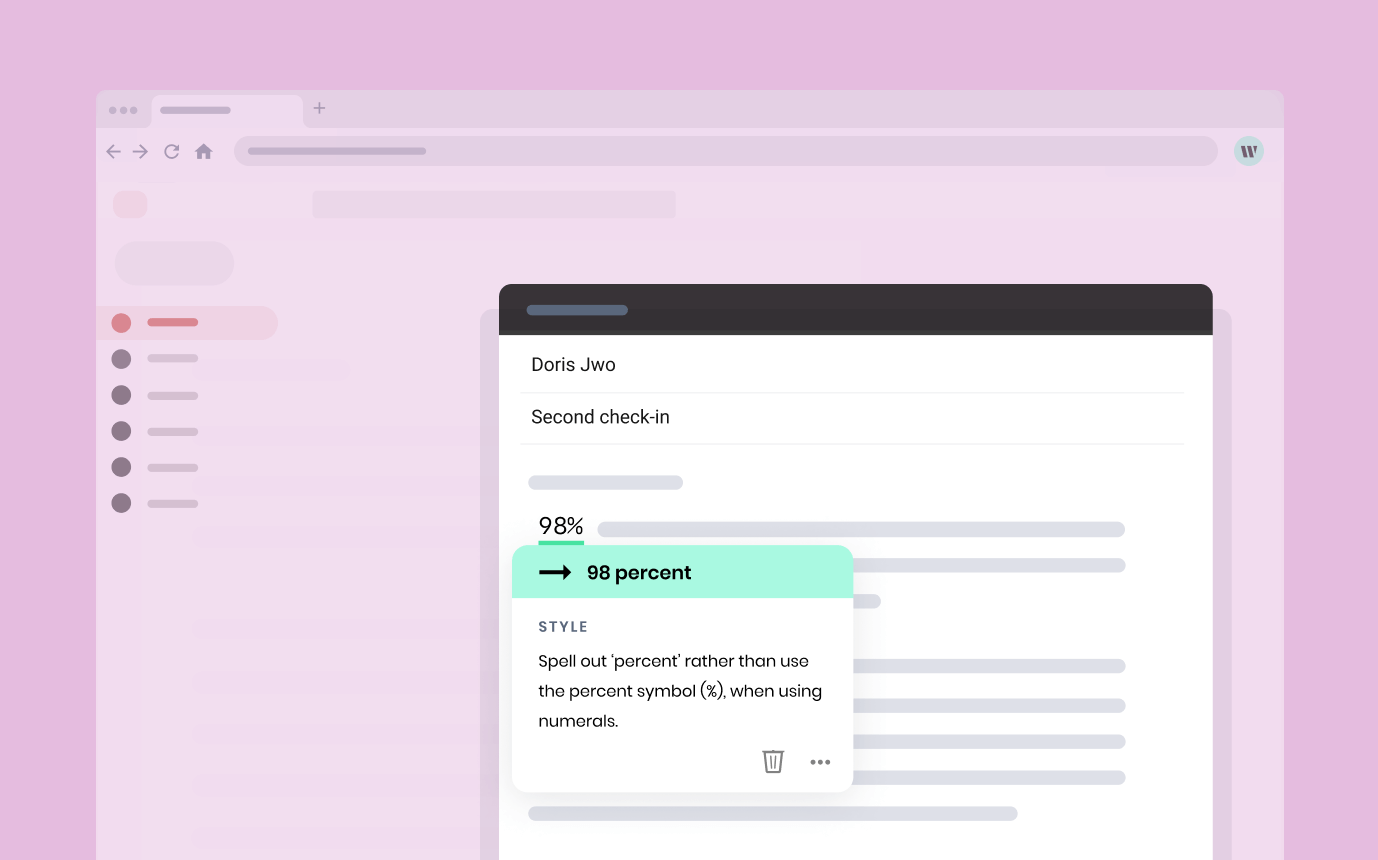

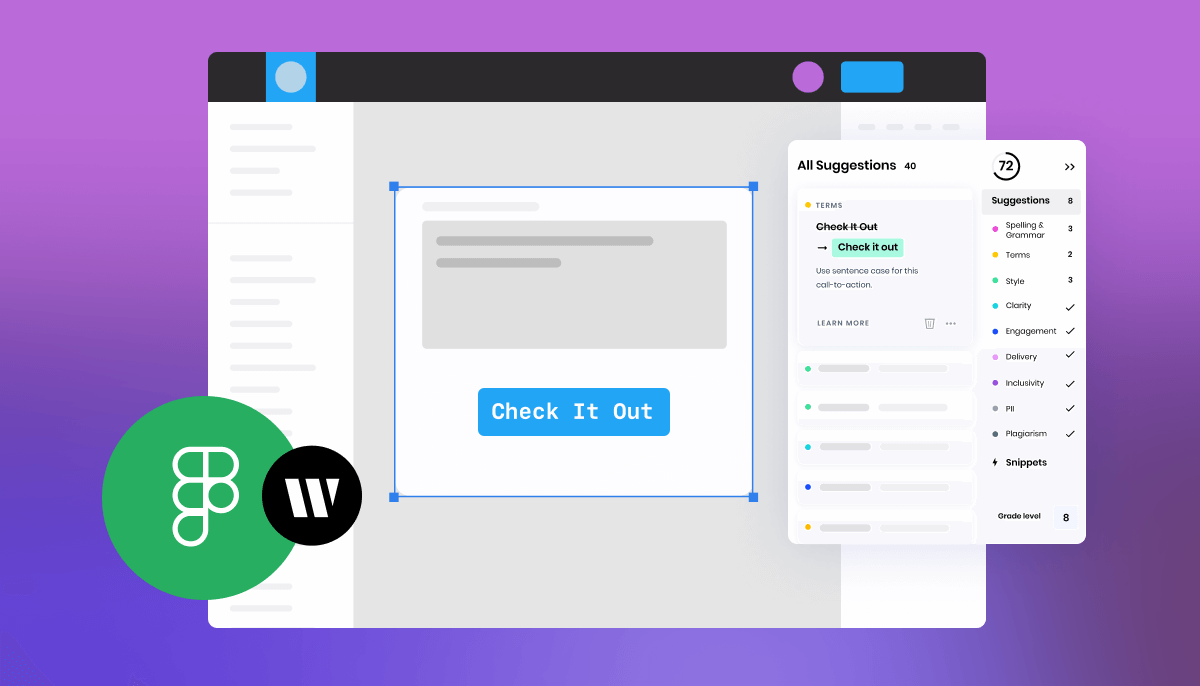

The Writer solution: suggestions, terms, claim detection, and style guide

Writer automatically enforces your AI guardrails with style guide, terms, and snippets to make sure everyone’s work is compliant, accurate, inclusive, and on-brand. It provides in-line suggestions to help your team produce consistently accurate and compliant work.

You can provide easy-to-follow guidance for punctuation and capitalization, encourage unbiased, inclusive language, and even enforce clarity and reading grade-level requirements. In-line suggestions will provide written guidance, examples, and alternative terms, making adherence easy for everyone.

You can also use a terminology bank that provides a single source of truth for brand terms. A terminology bank helps you manage, enforce, and share the language you want to use for your brand for both internal and customer-facing materials.

A flexible and easy-to-use user interface

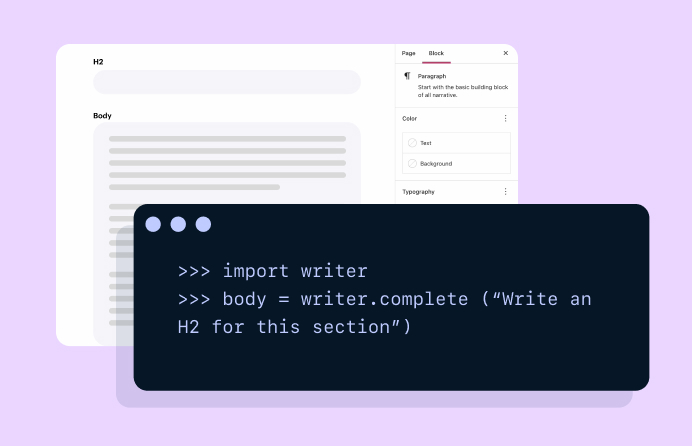

Using generative AI in a single app or closed ecosystem can’t support the needs of the entire organization. A true AI platform must embed easily into an organization’s processes, tool ecosystems, and applications. It needs to integrate with the tools and applications your teams are using at work. Ideally, your application layer should offer a variety of plugins, desktop applications, and a robust API.

You need a user interface for your AI stack so all your employees can easily take advantage of its powerful capabilities. It should be intuitive enough that everyone in the organization, regardless of their skill level, can take advantage of generative AI to help their work.

Teams across your organization, including IT, support and sales, marketing, comms, PR, and product, and design should all be able to use AI to deliver powerful business outcomes specific to their function.

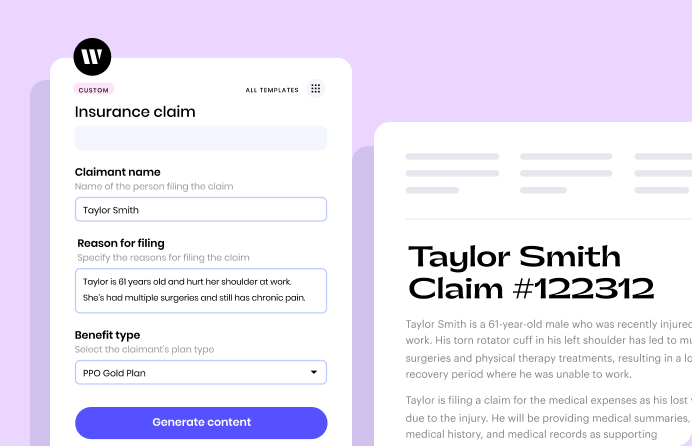

To guarantee consistency in repetitive, structured outputs, you’ll need templates that span a wide range of your common use cases. Writer apps help teams use AI more efficiently because they won’t have to engineer or manually input prompts each time. Since bespoke use cases come up, you should also have way to quickly build templates that fit your specific needs.

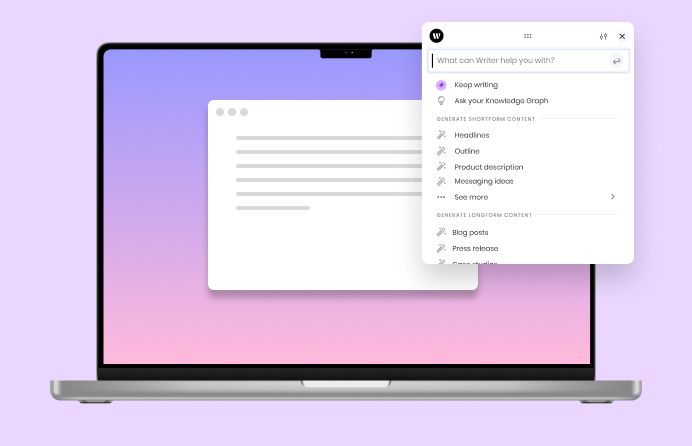

The Writer solution: composable UI options, customization, embeddable API

Writer offers an easy-to-adopt user interface with many customization options that help you effortlessly use generative AI in all of your business workflows. We offer thoughtful UI options, a variety of plugins, desktop applications, and a robust set of APIs, so you can embed Writer into your team’s processes and applications.

Integrating AI into your business applications will improve adoption, ease of use, and capabilities, while creating a more collaborative work environment.

In addition to prebuilt apps that span a broad spectrum of popular use cases, you can use composable UI options to create custom apps highly tailored to your use cases.

Security, privacy, and governance features

The ability to protect the security and privacy of your company’s data is a non-negotiable requirement for an enterprise-grade tech stack. So is the ability to make sure you and your team are always compliant with legal and regulatory requirements.

AI technology can carry real risks, especially if you use the wrong tools. Some generative AI tools (like ChatGPT) reserve the right to store and use an organization’s data. Regulated companies or those with sensitive corporate, employee, or customer data require a platform with a private, secure model, and compliance with privacy regulations. Your generative AI tech stack needs to ensure your data privacy, security, and compliance at every level.

Make sure you’re familiar with the terms of service and technical specifications of any tool that you add to your AI stack. Enterprises need to use AI tools like Writer that don’t retain or use your data to train their algorithms.

Additionally, look for tools that help meet your compliance obligations under SOC 2 Type II, HIPAA, PCI, GDPR, and CCPA to protect the privacy of your data. Enterprise-grade platforms should also provide you with secure access, admin, and reporting capabilities so you can keep access to your AI tools secure. Look for features like SSO, role-based permissions, multi-factor authentication, and activity audits and reports.

The Writer solution: unparalleled data protection

Our platform supports your data privacy and security requirements, and even provides features for data loss protection and global compliance detection. We’re SOC 2 Type II, HIPAA, PCI, GDPR, and Privacy Shield compliant.

We offer all the centralized admin, access control, and reporting capabilities you’d need for an enterprise roll-out. Our dedicated and experienced success team is here to support your onboarding, implementation, and ongoing AI program management.

A full-stack solution designed for enterprise

Enterprises need to make sure the tools they use can meet the demands of their business. You need more from an AI tool than just the ability to generate language. A full-stack platform makes your use of generative AI more effective and helps you use it in a way that doesn’t put your business’s data at risk.

When you adopt the Writer platform for your business, you join major companies across sectors who are accelerating their growth, increasing productivity and aligning their brand:

- A cybersecurity firm reduced technical writing quality assurance time by 50%

- A financial services company standardized core messaging, voice, and inclusive writing across all their divisions and employees

- A healthcare company saved over 96 hours per person and over $100K in resources.

Want your company to be in such company? Get started with a demo today.