Enterprise transformation

– 13 min read

Generative AI: risks and countermeasures for businesses

It’s no secret that embracing new technology, like generative artificial intelligence and AI systems, can give your business a huge leg up on the competition. By getting in on the ground floor of emerging tech, you can quickly refine processes, get to know your customers better, and even create products your competitors haven’t had time to develop yet.

But that doesn’t mean you should rush into things without a plan. As with any new technology, there are potential risks associated with AI systems adoption that organizations need to take into account. Understanding the capabilities of responsible AI is crucial before implementation and businesses must carefully consider both the benefits and risks posed by these systems.

Let’s go over the biggest concerns about generative AI systems for business use and the safeguards you can put in place to protect your company, your brand, and your customers.

- Generative AI can give businesses a competitive edge, improving efficiency and innovation, but it comes with significant risks.

- Risks include “AI hallucinations,” data security/privacy vulnerabilities, copyright challenges, compliance issues, and bias in outputs.

- Countermeasures include fact-checking, claim detection tools, curated datasets, enterprise-grade security features, and custom AI training.

- Investing in an enterprise-grade AI platform with secure storage, customizable features, and adherence to global privacy standards is essential for safe adoption.

- Teams need to prioritize brand and security compliance, receive proper training, and maintain human oversight to ensure ethical and effective use of AI tools.

The “plausible BS”, aka “AI hallucinations” risk

Generative AI tools are all the rage right now because they’re so good at sounding human, but this capability can be a double-edged sword. These models are designed to mimic and predict the patterns of human language, not to determine or check the accuracy of their output. That means generative AI can occasionally make stuff up in a way that sounds plausible — a behavior known as AI hallucinations.

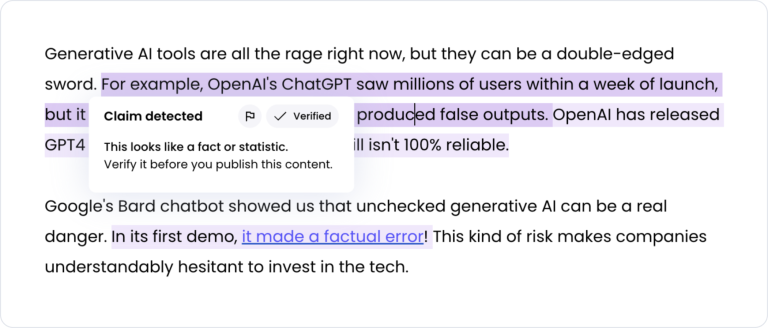

Take OpenAI’s ChatGPT, for example. It saw millions of users within a week of launch but also made headlines for generating false outputs. OpenAI claims their latest large language model (LLM), GPT-4, reduces hallucinations, but it still isn’t 100% reliable. Google’s Bard chatbot had its own public stumble when it made a factual error during its first demo!

The risks go beyond just being embarrassing. By 2023, analysts estimated that chatbots hallucinate as much as 27% of the time, with factual errors in 46% of generative AI output.

The stakes get even higher in the medical field. An AI system providing information about common ophthalmic diseases was found to generate incomplete, incorrect, and potentially harmful details. For healthcare providers, relying on AI outputs like these could pose serious risks to patient safety.

Even in the legal sector, AI hallucinations can cause chaos. In May 2023, an attorney used ChatGPT to draft a legal motion, only to find that it included fabricated judicial opinions and citations.

It’s not hard to see why businesses in industries like healthcare, law, or finance would hesitate to invest in such tools without proper guardrails.

The countermeasures: fact-checking, claim detection, and curated datasets

Here’s the thing: you can avoid these kinds of mishaps by doubling down on fact-checking and vetting the kind of data your AI tool is trained on. Regularly reviewing generative AI outputs is crucial to ensure accuracy and compliance.

One of the first rules in the age of AI is to fact-check all content before it goes to publication — human-written or AI-generated. And there are already AI-powered tools to help your fact-checkers scale up and speed up their work.

WRITER’s claim detection tool flags statistics, facts, and quotes for human verification. Training WRITER on a company’s content as a dataset and prompting it with live URLs can also help to produce accurate, hallucination-free output.

It’s also worth considering working with an AI tech partner who uses a “clean”, curated dataset for pre-training their models. That way, you can avoid datasets obtained from a huge web crawl that may include “fake news” and other sources of misinformation.

Take WRITER’s Palmyra LLMs as an example. A proprietary model powers them, trained on curated datasets with business writing and marketing in mind. So, if you’re looking for an AI designed specifically for use cases across the enterprise, Palmyra LLMs are your best bet.

Other risks for business use of generative AI

Generative AI systems have the potential to give businesses a competitive edge, but come with significant security risks and challenges. Key concerns include AI hallucinations, data privacy, intellectual property protection, and compliance requirements.

Data security and privacy risks

Data security and privacy are understandably top concerns for businesses and AI security is a critical aspect of managing these risks. Risks like exposing sensitive information, unauthorized access to personally identifiable information, exposure of sensitive data, and compliance violations can create significant challenges for companies.

For example, in May 2023, Samsung banned employees from using ChatGPT after sensitive company data was accidentally leaked through prompts. Similarly, in March 2023, a bug in an open-source library forced OpenAI to take ChatGPT offline temporarily due to a data breach.

This breach exposed some customers’ payment-related information and allowed active users to view chat history titles, highlighting the vulnerabilities even in widely trusted platforms.

Malicious actors may also attempt to exploit vulnerabilities in AI systems, leading to potential identity theft or exposure of proprietary information.

These examples show just how important it is for businesses to have solid data management strategies and to tread carefully when using generative AI tools—especially when dealing with sensitive or proprietary information.

Copyright concerns with generative AI models

The emergence of generative AI technology has thrown a wrench into our understanding of intellectual property and copyright infringement. As organizations implement these systems, they must carefully consider how their use of generative AI impacts existing legal frameworks. Organizations must also ensure that the data used in a generative AI model is properly safeguarded to prevent copyright infringement.

First, there’s the question of using copyrighted material in training data for AI models. In the US, it’s not illegal to scrape the web for this purpose. But a lot of legal brains have raised concerns that the existing laws may not be enough to protect creators whose material may have been used as part of AI prompts.

Then there’s the matter of whether AI-generated content is protected under copyright law. Right now, the US Copyright Office says it depends on how much human creativity is involved. The most popular AI systems probably don’t create work that’s eligible for copyright in its unedited state. That could be bad news if your company plans to sell AI-generated material, including digital products created with AI-generated code.

A real-world example underscores how tangled this can get. In the Southern District of New York, 17 authors, including Sarah Silverman, Paul Tremblay, and Ta-Nehisi Coates, sued OpenAI (and later Microsoft) for copyright infringement. They claim their copyrighted works were used without permission to train GPT models like ChatGPT. In a key development, OpenAI agreed to let plaintiffs inspect the training data under strict conditions.

The European Union’s AI Act may soon require AI companies to disclose details about training data, regardless of where it was collected. Similarly, the proposed Generative AI Copyright Disclosure Act of 2024 in the US aims to enhance transparency, requiring AI developers to clarify whether copyrighted works were part of their training datasets.

Compliance issues

With the release of GPT-powered APIs, we’ve all got a generative AI model in our pocket or in our favorite software tool — but one we can’t control. Companies can’t tweak general-use AI tools to match their brand, style, and terminology guidelines — which can lead to wonky and wrong output that doesn’t adhere to the brand or industry standards.

Say you’re trying to sell a product and a marketing team member uses ChatGPT to create a quick blurb about it. If the output includes a term like “eco-friendly” — which isn’t allowed under ESG (environmental, sustainability, and governance) standards — you could be up the creek without a paddle in certain countries.

Data bias

AI bias can create real problems, from reinforcing harmful stereotypes to enabling discriminatory practices. In fields like lending, these biases can spark regulatory backlash. In fields like human resources, AI bias can lead to unfair hiring practices and reinforce systemic inequities.

For example, a 2024 University of Washington study revealed significant racial and gender bias in how three advanced AI models ranked resumes. The study found that white-associated names were favored 85% of the time over Black-associated names, male-associated names were preferred 52% of the time versus female-associated names, and Black male-associated names were never favored over white male-associated names.

These findings reveal that unchecked generative AI systems can reinforce systemic inequities, raising significant ethical concerns about fairness and accountability.

Vendor lock-in challenges

Vendor lock-in can be a major headache for companies. When businesses become too dependent on proprietary platforms, they often face limited flexibility, inflated costs, and difficulty switching providers.

Imagine a company that builds its entire workflow around a specific AI platform, only to discover later that the provider is raising prices or phasing out certain features. Switching to a new vendor could require extensive retraining of AI models, causing delays, disruptions, and hefty expenses—all while leaving critical workflows vulnerable.

This reliance on a single provider can stifle adaptability and innovation, forcing businesses into uncomfortable compromises.

Over-reliance on generative AI

Generative AI can feel like a lifesaver, but relying too heavily on it has risks of its own. Over-reliance can reduce critical human insight, lead to unchecked errors, and even stifle creativity within teams.

Consider a marketing team that uses AI exclusively for content creation. While the AI may churn out text efficiently, the lack of human input might result in tone-deaf messaging or generic outputs that fail to resonate with the intended audience.

When businesses lean too hard on AI, they risk sacrificing originality and strategic thinking for convenience.

Organizations must strike a balance between leveraging artificial intelligence capabilities and maintaining human expertise in decision making. This requires careful attention to responsible AI practices and continuous monitoring of security risks.

Countermeasures: security features, data transparency, and custom training for responsible AI

The keys to avoiding all of these security threats? A combination of enterprise-grade AI systems with built-in guardrails, responsible AI practices, and a self-reliant AI strategy.

Successful implementation requires careful attention to training data quality, robust governance frameworks, and continuous stakeholder engagement. Organizations must prioritize both innovation and ethical use in their artificial intelligence initiatives.

Ensuring data safety

To ensure you’re keeping your data safe, you’ll want an AI platform that doesn’t store user data or content that’s submitted for analysis for any longer than is needed. WRITER has you covered here, as we’ve been audited for several privacy and security standards, and have received major certifications, such as SOC2, GDPR and CCPA, HIPPAA, and Privacy Shield.

Addressing bias

Addressing bias requires more than just good intentions. WRITER’s Palmyra LLMs, combined with its graph-based retrieval-augmented generation (RAG) capabilities, ensure customized outputs trained on diverse, curated datasets. This approach reduces reliance on generalized, potentially biased web crawls, delivering fair and inclusive content tailored to your business needs.

Mitigating copyright infringement

To mitigate the risk of copyright infringement, it’s essential to vet your AI vendors and partner with companies that are transparent about the provenance of their training data.

WRITER, for instance, is committed to transparency and ethical practices. Our latest AI model, Palmyra X4, leverages synthetic data to achieve state-of-the-art performance in reasoning, tool calling, and API selection. This approach not only enhances privacy and fills data gaps but also ensures that the training data is reliable and ethically sourced. By using synthetic data, Palmyra X4 offers a more cost-effective and scalable solution compared to other models in the industry.

Ensuring brand unity

With the right AI platform and user training, you can avoid security and copyright risks, while at the same time ensuring brand unity. To get the most out of this tech, you’ll need to fine-tune your models for your specific use cases and train them with your own expertly crafted, human-generated content.

WRITER takes brand governance even further with features like Style guide and Terms. Customers can create their own custom Style guide that includes voice, writing style preferences, and approved terminology. WRITER will then suggest corrections when users veer off-brand in their writing or use non-compliant language.

Building a self-reliant AI strategy

Generative AI turns the traditional conversation about vendor lock-in on its head. Enterprises should be trying to choose vendors who can truly partner to help build the “golden four” of a self-reliant AI strategy:

- Use cases and business logic

- Data and examples

- In-house talent

- Organizational capacity for learning and change

WRITER’s no-code AI tools and backward-compatible platform updates allow enterprises to build and adapt internal generative AI systems to use cases and business logic without being tied to a single LLM.

Finally, fine-tuning domain-specific models and empowering in-house teams ensures businesses stay competitive. WRITER supports this with tools like AI Studio, where users can experiment with prompts, test outputs, and deploy custom LLM applications at scale. By doing so, businesses can retain ownership over their AI journey while fostering a culture of continuous improvement.

How Commvault partnered with WRITER to combat AI risks

Commvault, a leader in data protection, turned to WRITER to streamline content creation and empower its teams during a major platform repositioning. With WRITER’s Knowledge Graph, they developed Ask Commvault Cloud, a custom AI app that equips sales reps with on-brand messaging and quick access to critical resources.

Anna Griffin, Commvault’s Chief Market Officer, shared, “We’ve been able to get work that might’ve taken eight to 12 hours a day down to getting something outlined and ready for review in 20 minutes. It’s saving us countless hours and accelerating our ability to bring new messages to market effectively.”

By prioritizing security and customization, Commvault demonstrates how enterprises can use generative AI to enhance efficiency, maintain brand integrity, and drive meaningful results.

Commvault enables go-to-market teams with generative AI

Learn more on the blog

Generative AI is only as risky as the platform you use — and the people who use it

Generative AI could totally transform content creation for businesses, but only if the necessary precautions are taken. When it comes to using AI for business, the platform you go with makes all the difference. Investing in a reliable, enterprise-grade AI solution with quality datasets, secure storage, and customizable features is the way to go.

Keep in mind, though, that no matter how fancy the technology is, it’s only as good as the person using it. Make sure that your team understands the importance of brand and security compliance and are trained to use the tools properly. Having a good mastery of the tools available makes sure that writers can be more efficient and create content that meets all of your company’s needs.