Generative AI governance: a toolkit for enterprise leaders

Generative AI is a key that opens up a realm of endless opportunities, propelling businesses forward in today’s dynamic technological landscape.

As an enterprise leader, you play a pivotal role in shaping the future of generative AI development and implementation. You’re a trailblazer, setting the stage for innovation and paving the way for transformative advancements in the field.

But — as any Marvel fan can tell you — with great power comes great responsibility. You need to maximize the brilliant business outcomes that generative AI offers while avoiding potential risks. That’s where this generative AI governance toolkit comes in. This comprehensive guide not only emphasizes the importance of AI governance, but also provides a roadmap for enterprise IT leaders to build a robust governance framework tailored specifically for generative AI adoption.

At its core, this toolkit recognizes the need for a balanced approach that harnesses the brilliance of generative AI while mitigating potential risks. It delves into the ethical considerations surrounding AI, ensuring that the technology is developed and deployed in a manner that aligns with the values and principles of your organization.

But it doesn’t stop there. This toolkit goes beyond theory, offering practical insights and actionable steps to implement effective governance. From establishing clear policies and guidelines to fostering transparency and accountability, you’ll have the tools you need to navigate the complexities of generative AI adoption.

Download the ebook

The relationship between AI governance and responsible AI adoption

AI governance is the GPS of the responsible AI adoption journey. It provides an ethical and accountable navigation system that organizations can rely on to guarantee they’re making decisions that are in line with their AI adoption goals.

It’s important to think of responsible AI adoption as a journey, with each step building on the previous one. Just like a road trip, understanding the ethical implications of a technology is the first step in a successful journey. From there, the path becomes clearer with each deliberate step taken toward transparent and responsible use of AI. By investing in responsible AI adoption, your organization will increase assurance of trust and safety, while reaping the rewards of real, tangible value generative AI can offer your employees and customers.

Learn more about the ethical implications of generative AI for business:

The importance of AI ethics in business: a beginner’s guide

Essential principles for responsible AI adoption

AI adoption principles are the lifeblood of ethical and accountable AI practices, making sure that organizations tread the path of integrity and transparency. These principles serve as guiding beacons so that AI technologies are developed, deployed, and used in a manner that aligns with ethical and responsible practices.

By embracing and communicating these principles, your organization can establish a solid foundation for your AI governance frameworks, fostering trust, transparency, and accountability. These principles encompass crucial aspects such as fairness, explainability, privacy, and bias mitigation, addressing the potential risks and challenges associated with AI.

Here are the principles for responsible AI adoption:

Human-centric focus

Keep the needs and well-being of humans at the forefront of AI development and deployment, ensuring that AI systems are designed to augment human capabilities rather than replace them.

Respect for human dignity

Uphold the principles of fairness, equality, and non-discrimination, ensuring that AI systems do not perpetuate biases or harm individuals based on their race, gender, or other protected characteristics.

Clear purpose

Define a clear and specific purpose for implementing AI, aligning it with the values and goals of your organization to ensure that AI is used in a purposeful and meaningful way.

Data transparency

Foster transparency by providing clear and accessible information about the data used to train AI systems, enabling users to understand how decisions are made and promoting trust in AI technologies.

Respect for safety

Prioritize the safety of individuals and communities by implementing robust safety measures and protocols to mitigate potential risks and ensure that AI systems operate in a safe and reliable manner.

Auditability

Enable the ability to audit AI systems, allowing for accountability and the identification of potential biases, errors, or unintended consequences, ensuring that AI remains transparent and accountable.

Respect for human autonomy

Preserve and respect human autonomy by ensuring that AI systems don’t unduly influence or manipulate human decision-making processes, allowing individuals to retain control and make informed choices.

Security

Implement robust security measures to protect AI systems from unauthorized access, ensuring the integrity and confidentiality of data and preventing malicious use or attacks.

Privacy protection

Safeguard the privacy of individuals by implementing strong data protection measures, ensuring that personal information is collected, stored, and used in a responsible and ethical manner.

By keeping these essential principles in mind as you develop policies and invest in AI solutions, you can confidently navigate the responsible adoption of AI, creating a positive and beneficial impact on your business — and society.

A corporate AI governance framework pays off

Establishing a robust AI governance framework brings substantial benefits to businesses, including significant financial outcomes. By implementing effective governance practices, organizations can mitigate risks, improve operational efficiency, and drive revenue growth. Let’s explore some key business benefits that arise from establishing generative AI governance.

“Where AI governance frameworks are implemented, AI governance has resulted in more successful AI initiatives, improved customer experience, and increased revenue.”

Gartner1

Enhanced operational efficiency

Companies that have employed governance frameworks to adopt enterprise-grade generative AI at scale have witnessed remarkable improvements in operational efficiency. They experience fewer bottlenecks, increased productivity, improved quality, and substantial time savings on tedious tasks.

For instance, SentinelOne, a global computer and network security company, uses generative AI to streamline their content production process across several teams. As a result, they saved over 100 hours per month, reduced QA time by 50%, and achieved more consistent document creation. These efficiency gains allow teams to focus on mission-critical projects, driving productivity and cost savings.

Resource optimization and revenue growth

Generative AI governance empowers organizations to offload tedious taskwork, enabling their talented workforce to concentrate on high-value initiatives that directly impact financial success.

Ivanti, a security automation platform, leveraged generative AI to automate marketing tasks, reducing content production time by half. This efficiency gain allowed their team to repurpose content more efficiently and allocate more time and energy to creative endeavors.

Similarly, Adore Me, a fashion industry company, used generative AI to maintain compliance and consistency in content production. By automating and streamlining the process, they saved countless hours previously spent on monotonous tasks, enabling them to scale their business and deliver a better customer experience.

Avoiding costly mistakes

A recent report from Gartner reveals that organizations that have implemented AI governance frameworks have experienced a reduction in costs and avoided failed initiatives.

By adhering to governance practices, companies can identify and address potential risks associated with AI implementation, ensuring compliance with regulations and industry standards. This proactive approach helps organizations avoid costly penalties, fines, and legal issues that may arise from non-compliance. By integrating AI governance into their operations, businesses can achieve cost savings while maintaining a strong focus on responsible and ethical AI practices.

These examples and insights demonstrate how a well-implemented generative AI governance framework optimizes resource allocation, improves productivity, enhances operational efficiency, and achieves cost savings. By adopting such a framework, organizations can unlock the full potential of generative AI, driving financial outcomes, mitigating risks, and gaining a competitive edge in the market.

The challenges ahead in your AI governance journey

It’s still early days for an emerging and disruptive category of technology. Companies building governance policies and frameworks for generative AI are navigating through uncharted and rapidly changing territory. The key challenge for businesses is to learn how to use this technology responsibly and ethically while staying ahead of regulatory demands.

Business leaders need to consider the potential risks associated with relying on automated decision-making and make sure that appropriate safeguards are implemented. It’s also essential to use AI technologies in a way that builds trust with customers, colleagues, and other stakeholders.

All of this is made even more difficult by the fact that generative AI technology is dynamic and constantly evolving, making it difficult to anticipate potential issues before they occur. Navigating through these uncharted waters requires careful reflection and analysis to make sure that the use of AI technologies is beneficial for all involved.

Practical considerations of an AI governance framework

Think of setting up an AI governance framework like building a solid foundation for a house. You want to consider practical factors that’ll ensure the responsible and effective use of AI, just like you’d want a sturdy base to support the entire structure.

- Establishing training requirements

Ensure that everyone involved in AI strategy planning, development, vendor evaluation, and deployment receives comprehensive training to understand the risks and ethical considerations associated with AI. - Setting up a continuous audit process

Implement regular audits to identify biases, errors, and unintended consequences in AI systems, fostering transparency and accountability. - Establishing a system for reporting AI-related incidents

Create a reporting system for users and employees to report concerns, biases, or adverse effects, promoting transparency and continuous improvement. - Establishing an AI ethics code

Develop a code that outlines principles and values guiding AI development and use, addressing fairness, transparency, privacy, and accountability. - Developing metrics for measuring AI performance

Create metrics to assess accuracy, reliability, and fairness of AI systems, enabling evaluation and improvement while enhancing transparency and trust.

What an AI governance framework looks like

While AI governance frameworks will vary depending on the unique needs and culture of the organization, the key components remain the same:

- Policies and procedures

Established policies and procedures are essential for making sure that AI is used responsibly and ethically. - Risk assessment and management

AI governance frameworks should include a thorough risk assessment process to identify, assess, and mitigate any potential risks associated with AI technology. - Data governance

Data governance is essential for ensuring that data is collected, stored, and used in a responsible and ethical manner. - Transparency

The systems these frameworks govern should be transparent and explainable to ensure that users understand how the system works and can trust the results. - Auditing and monitoring

AI governance frameworks should include an auditing and monitoring process to make sure that AI systems are operating according to established policies and procedures. - Stakeholder engagement

AI governance frameworks should include a process for engaging stakeholders and guaranteeing that their perspectives are taken into account.

Global digital consultancy Perficient provides a complete approach with their P.A.C.E. framework for operationalizing generative AI:

- Policies

As new and innovative solutions emerge in generative AI, it’s important to have corporate guidelines and boundaries in place for acceptable usage. By establishing these guidelines and boundaries, companies can make sure that their AI usage is aligned with their company’s goals and values. - Advocacy

Educating all stakeholders about the benefits of AI and promoting AI literacy and adoption. Advocacy is a critical component of success for AI initiatives, and can be a source of inspiration and motivation for employees, customers, and partners. - Controls

As generative AI solutions become more prevalent, it’s important that companies take the necessary steps to oversee, audit, and manage the risks associated with them. This can include regular monitoring and assessment of AI systems, as well as developing procedures for how to respond to any potential incidents or errors. - Enablement

It’s critical to have the necessary resources, tools, and support structures in place to use AI technology responsibly. This can help to make sure that users have the information they need to make responsible decisions about the usage of AI systems.

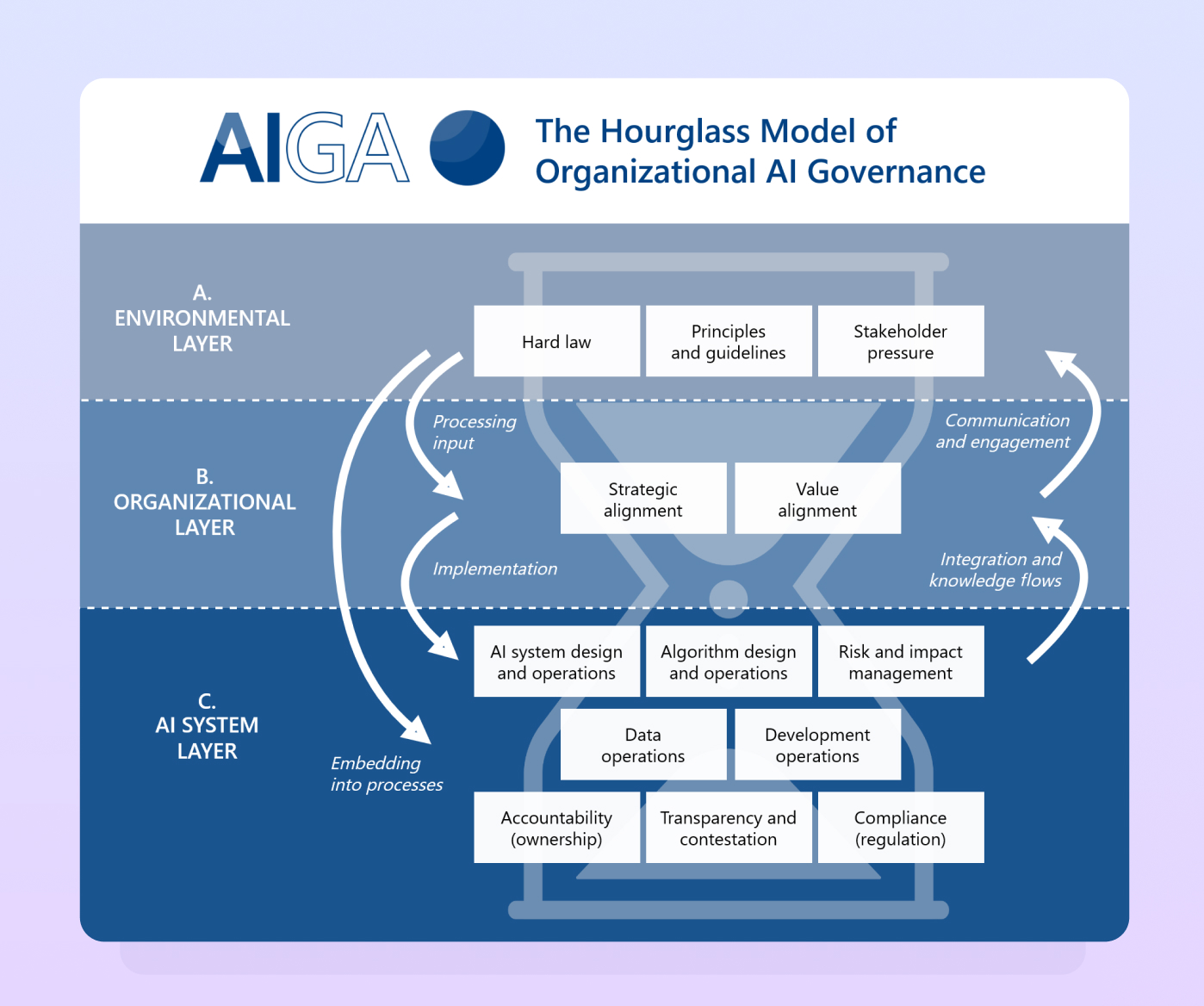

In the European Union, the Artificial Intelligence Governance and Auditing (AIGA) project has developed “the hourglass model,” a three-layer framework for organizational AI governance.

These models are designed to adapt to many kinds of companies and organizations across industries. More models will no doubt emerge as large enterprises develop, evolve, and communicate their approaches to AI implementation. Now is the time to research the governance landscape, discover approaches your company can incorporate, and assess areas where your company still needs to catch up.

Establishing your AI Center of Excellence (CoE)

An AI Center of Excellence (CoE) plays a vital role in supporting AI governance by providing expertise, guidance, policy development, risk assessment, training, monitoring, and fostering collaboration. Their involvement ensures that AI initiatives align with ethical standards, regulatory requirements, and organizational goals, leading to responsible and successful AI implementation.

When forming an AI CoE committee on governance, consider the following:

- Who should be involved?

Identify the right people to serve on a multi-disciplinary team. This includes executive leaders of departments with AI use cases, IT and cybersecurity leaders, and any other important personnel such as legal or compliance representatives.

AI will affect your workers, and AI bias harms historically disadvantaged groups — prioritize bringing in the voices of those who aren’t usually represented. Whether you plan to build AI solutions in-house or work with third-party AI vendors, you’ll also want to include key decision-makers like project managers and procurement specialists. - What is the purpose of the governance committee?

The CoE should have a clear objective and mandate to achieve its goals. Here are some questions to consider:

- What is the scope of the CoE’s responsibilities?

- What decisions will the CoE be responsible for?

- How will we measure the impact of the CoE?

- How will the CoE make sure AI is used ethically and responsibly?

- How will the CoE operate?

Leaders must consider how the CoE will function and interact with other departments, both internally and externally. Questions to consider include:

- What processes and procedures will be in place?

- Will there be any reporting requirements?

- What are our communication channels?

- How will we interact with other departments and stakeholders?

- How will the committee be held accountable?

An AI Center of Excellence plays a key role in enterprise AI beyond governance. It plans AI initiatives, conducts research and development, develops proof-of-concepts, fosters collaboration, builds AI capabilities through training, evaluates vendors and partnerships, monitors and optimizes AI performance, and promotes ethics and responsible AI practices. The AI CoE acts as a catalyst for driving AI strategy, innovation, collaboration, capacity building, and ensuring the effective and responsible use of AI technologies within the organization.

Developing AI governance policies and procedures

Crafting effective AI governance policies and procedures is a top priority for your AI governance committee. To ensure ethical and responsible AI adoption, your corporate AI policy should cover key elements:

- Compliance with legal and regulatory standards is crucial. This means following data privacy, intellectual property, and consumer protection laws to avoid legal issues and penalties.

- Protecting data privacy is paramount. Establish strict protocols for data collection, storage, and usage to safeguard the privacy of customers and employees.

- To prevent bias and discrimination, conduct regular bias reviews and audits. AI language models can absorb biases from training data, so it’s essential to ensure fair and unbiased outputs.

- Emphasize the ethical use of AI. Discourage replacing human employees with AI and promote AI as a tool to augment jobs, aligning with your organization’s values.

- Regularly review and update your corporate AI policy to keep pace with evolving technology, regulations, and societal norms. Incorporate feedback from employees and customers to maintain effectiveness and relevance.

By incorporating these elements into your AI corporate policy, you can establish a framework that promotes ethical AI use, mitigates risks, and ensures compliance.

Assessing and managing the risks

It’s crucial to proactively address the risks associated with generative AI. This comprehensive table serves as a valuable tool for assessing and mitigating potential risks in areas such as data privacy, accuracy, bias and fairness, and ethical considerations. By shining a spotlight on these risks, you can navigate the complex terrain of AI governance with an analytical and ambitious approach, while prioritizing the well-being of your people and your customers.

Risk Category

Potential Risks

Impact

Mitigation Strategies

Unauthorized data access

Breach of privacy laws

- Implement strong access controls, encryption, and data anonymization.

- Regularly audit data access.

- Obtain explicit user consent.

- Comply with relevant regulations.

- Train employees on data privacy.

Biased training data

Discrimination

- Use diverse and representative training data.

- Regularly evaluate and mitigate bias in AI models.

- Conduct fairness assessments.

- Involve diverse stakeholders in model development.

- Provide transparency in decision-making process.

- Regularly monitor and update models.

Non-compliance with regulations and industry standards

Legal consequences and penalties

- Stay updated with applicable laws, regulations, and industry standards.

- Establish compliance monitoring and reporting mechanisms.

- Conduct regular compliance audits.

- Provide training on compliance requirements to employees.

- Collaborate with legal and regulatory experts.

- Implement processes for handling and reporting non-compliance.

Unintended harmful consequences

Negative societal impact

- Establish clear ethical guidelines for AI development and deployment.

- Conduct ethical impact assessments.

- Engage with relevant stakeholders.

- Encourage transparency and openness.

- Regularly review and update ethical guidelines.

- Foster a culture of ethical AI use.

- Provide channels for reporting ethical concerns.

- Regularly educate employees on ethical considerations.

- Foster interdisciplinary collaboration.

Inaccurate outputs

Misinformed decisions

- Continuously evaluate and improve model performance.

- Regularly update training data.

- Conduct rigorous testing and validation of AI models.

- Implement feedback loops for model improvement.

- Monitor and address biases in training and evaluation data.

- Provide explanations and transparency in predictions.

AI hallucinations

Misleading or harmful information

- Implement strict validation mechanisms (claim detection, fact-checking) to detect and prevent AI hallucinations.

- Regularly review and update language models to minimize hallucination risks.

- Incorporate human oversight and intervention in critical decision-making processes.

- Continuously train AI models on real-world data to reduce hallucination tendencies.

- Implement retrieval-augmented generation (RAG) (e.g., the Knowledge Graph feature in WRITER) to improve the accuracy and relevance of generated content.

Infringement of copyrighted material

Legal consequences and penalties

- Obtain proper licenses and permissions for copyrighted content.

- Implement content filtering and copyright.

Find out more about the risks associated with AI and how to avoid them. Read Generative AI risks and countermeasures for businesses.

Generative AI governance is a team effort

Generative AI governance is an ongoing process. Like all company-wide initiatives, it requires collaboration and clear communication across teams. And because generative AI is an emerging technology, it also requires careful guidance from technology partners.

At WRITER, we’ve had the privilege of partnering with and learning from our enterprise customers as they forge a new path toward digital transformation with generative AI. We run on-site training workshops on generative AI and help our enterprise customers develop strategies for implementation that make sense to their organizations. If you’re interested in learning more about how WRITER can partner with you in your generative AI governance and adoption journey, get in touch.