The agentic AI governance playbook

An enterprise framework for AI supervision, observability, and trust

Introduction

Enterprise AI has a new job description — taking action. Yesterday’s AI was a research assistant, built to answer questions. Today’s agentic AI is a system of action, designed to execute complex business processes. This fundamental shift from output to outcome changes everything about how you govern it. Where generative AI governance focused on controlling model inputs and outputs, agentic AI governance supervises the actions and outcomes of autonomous systems.

Agentic AI is a class of AI systems that autonomously plan and execute multi-step tasks to achieve a specific goal. Unlike passive models, these agents orchestrate complex workflows, interact with enterprise systems, and make decisions. When an AI can independently execute a process, the focus must shift from what a model might say to what an agent will do. This requires agentic AI governance, the framework of policies, tools, and processes for supervising autonomous AI systems to ensure their actions are safe, transparent, and aligned with business intent.

In this new world, governance isn’t just about avoiding risk or checking boxes for the compliance team. This is Governance-as-Enablement — a strategic function that unlocks speed, not a tax that slows it down. Think of it less like a brake pedal and more like the guardrails on a highway — it enables you to move fast, safely. An effective governance strategy builds the operational trust required for widespread business adoption, connecting agent performance to measurable value while always aligning with human mandates.

- Your AI is graduating from answering questions to taking action. This new agentic workforce can’t be managed with old rules, demanding a new playbook for oversight that you control.

- To govern this power, WRITER introduces the Agentic Compact. This is a new rulebook that gives you the framework to ensure every autonomous action is safe, transparent, and aligned with your business goals.

- You get safety by design, not by chance. The WRITER platform provides a secure playground with unbreakable guardrails and sandboxed environments, giving you the power to contain your AI’s actions and prevent unintended consequences.

- You can eliminate the “black box” problem. With WRITER, every AI action is backed by citable sources and clear documentation, allowing you to demand complete accountability and trace any decision back to its origin.

- You stay in the driver’s seat of business value. A live dashboard in WRITER AI HQ gives you real-time visibility, empowering you to monitor performance and prove your AI workforce is delivering tangible ROI, not just staying busy.

Download the ebook

The new governance imperative: From responsible AI to the Agentic Compact

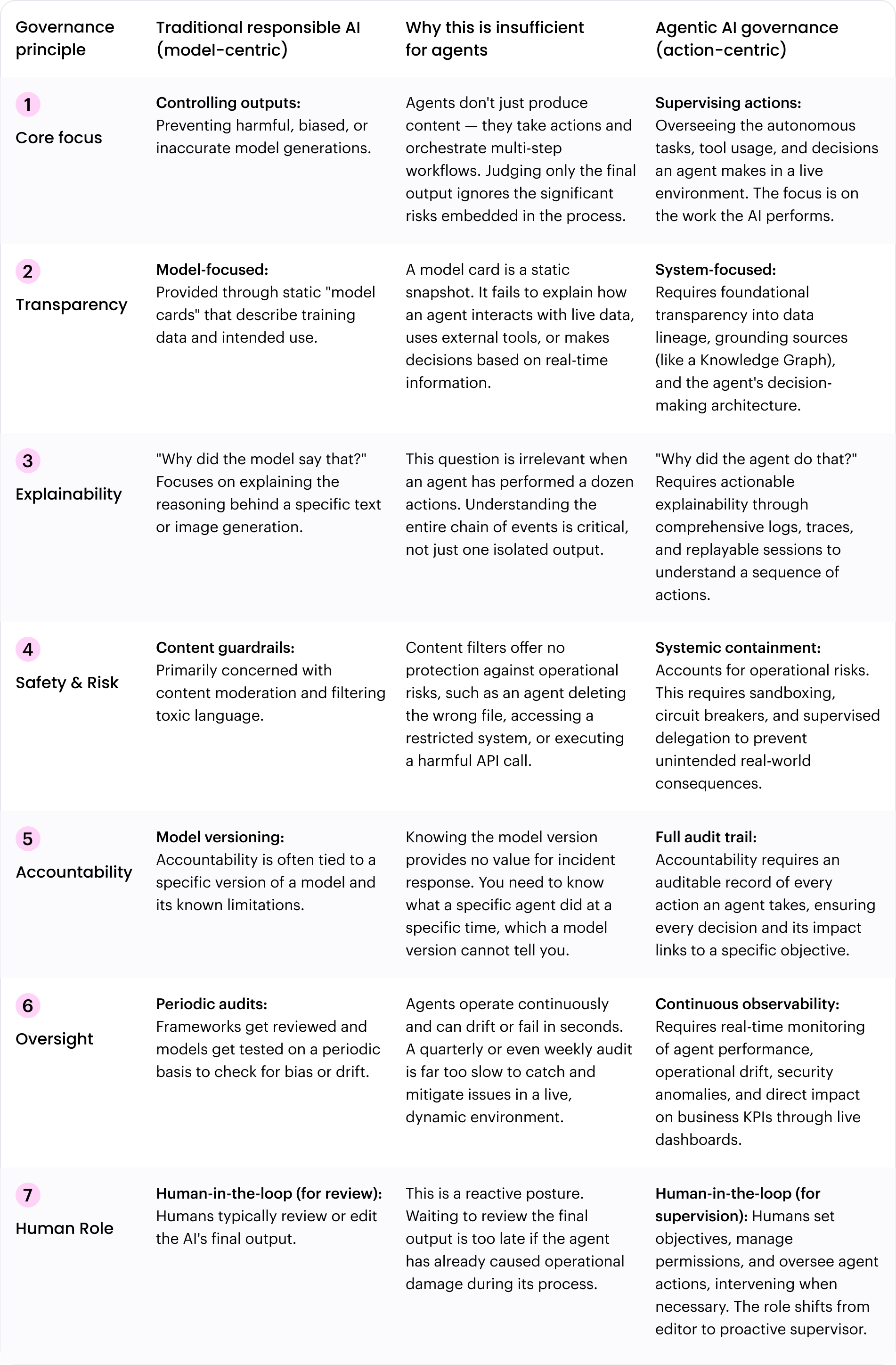

The established principles of responsible AI — fairness, accountability, privacy, and transparency — provided a critical foundation for governing passive AI. These pillars remain essential, but they were designed for a world where AI suggested and humans acted. They weren’t built to cross the Action Gap — the divide between what an AI says and what it does. Agentic AI closes that gap, and in doing so, creates new governance challenges that traditional frameworks cannot solve.

Why traditional responsible AI frameworks fall short for agentic AI

Agentic AI operates beyond the scope of these traditional frameworks. Its defining characteristics create distinct governance gaps that require attention. The autonomy of agents introduces the risk of unintended actions at scale. Their adaptive nature can lead to unpredictable behavior that’s difficult to forecast. The complexity of orchestrating tasks across multiple systems challenges clear lines of sight and control. You can’t close these gaps with policies alone. You need a new operational contract for your new digital workforce.

To bridge this divide, we at WRITER propose The Agentic Compact, a prescriptive framework for building and supervising a digital workforce safely. It’s a set of six guiding articles designed not to replace responsible AI, but to complete it. The Compact provides a clear path for achieving Operational AI Trust — a specific, measurable confidence in the reliability of your digital workforce — and implementing the supervision required for full-scale enterprise adoption. It ensures that as agents take on more responsibility, they do so safely and effectively.

But before detailing the solution, a leader must first diagnose the problem. The market is overflowing with high-risk shortcuts and dead-end solutions. The Enterprise AI Landscape model, below, provides a clear map of this terrain, allowing you to assess the strategic viability of any AI solution and understand the one true path to scalable success.

Many AI solutions operate as a “black box,” sending your sensitive data to third-party models with little transparency or control. This approach forces enterprises to choose between innovation and security — a compromise that’s no longer necessary.

WRITER’s architecture is fundamentally different. By owning the entire stack — from our family of Palmyra LLMs to the Knowledge Graph that grounds them in your enterprise data — we create a secure, closed-loop system.

This integration delivers trust and compliance for two critical reasons:

- Your data stays your data. The Knowledge Graph acts as a factual anchor, so that Palmyra doesn’t just invent answers (hallucinate) but generates content and takes actions based exclusively on your approved, up-to-date information. Your proprietary data never leaves the secure WRITER environment to be used for training third-party models. This guarantees that every output is not only accurate but also compliant with your brand, style, and regulatory guidelines.

- Complete, end-to-end auditability.Because we control every step of the process, we can offer a transparent, end-to-end trail for every agentic task. You can see the initial prompt, the specific data retrieved from the Knowledge Graph to inform the action, and the final output or decision. This provides a level of granular accountability that is impossible to achieve with external, non-auditable APIs.

Ultimately, WRITER’s full-stack approach transforms AI from a high-risk dependency into a secure, compliant, and fully integrated extension of the enterprise itself.

The enterprise AI landscape model

Deconstructing the four quadrants of the enterprise AI landscape

Quadrant 1 (bottom-left): Yesterday’s risk

This quadrant is populated by basic API wrappers and thin applications built on top of third-party consumer models. While easy to experiment with, these solutions represent an unacceptable enterprise risk. Lacking any meaningful governance, data control, or compliance features, they offer low strategic value and expose the organization to data leaks, hallucinations, and brand damage. This is the realm of shadow AI — a strategic dead-end.

Quadrant 2 (top-left): The unscalable gamble

Here reside the ambitious but flawed attempts to build agentic capabilities on top of a patchwork of third-party models and open-source tools. While these systems may demonstrate impressive agentic functions in a lab, they are a house of cards in an enterprise context. Without an integrated governance stack, there’s no end-to-end auditability, no unified safety control, and no way to guarantee compliance. Scaling these systems is a high-stakes gamble on models you don’t control and data pipelines you can’t secure.

Quadrant 3 (bottom-right): Limited potential

This quadrant represents secure, often on-premise systems that have strong governance but lack true agentic capabilities. These platforms may be excellent as passive knowledge bases or content generators, but they cannot take action or automate complex workflows. They are safe but not transformative. While they avoid the risks of the left-hand quadrants, they also fail to open the massive productivity gains of an agentic workforce, offering only incremental improvements.

Quadrant 4 (top-right): The agentic enterprise

This is the destination for any serious enterprise AI initiative. In this quadrant, transformative agentic capability fuses with the security and compliance of an integrated, full-stack governance platform. This is the only space where organizations can safely build, activate, and supervise a digital workforce at scale. WRITER is the only platform built natively for this quadrant. By owning the entire stack — from the Palmyra family of models to the Knowledge Graph and the AI HQ control panel — we provide the unified environment required to deploy powerful agents with complete confidence, turning the promise of AI into measurable business outcomes.

To be clear, the Landscape is a diagnostic tool to assess the market. The Compact is the actionable framework to lead it.

The articles of the Agentic Compact

The six articles of the Compact are the foundational pillars for building, activating, and supervising a true agentic enterprise.

Article I

Systemic safety and containment

Before you can trust an agent to act autonomously, you need complete certainty that its actions will remain within safe, predefined boundaries. This is the principle of systemic safety and containment: establishing a secure operational environment where agent autonomy is a privilege earned within a fortress of technical and procedural safeguards. Safety isn’t a feature — it’s a non-negotiable prerequisite for innovation.

This first article builds a trusted operational perimeter. It ensures that no matter how adaptive an agent becomes, its core permissions and boundaries are non-negotiable. This is how you move from theoretical safety to applied safety.

At WRITER, systemic safety is an engineering principle, not a policy. We deliver it through:

- Granular guardrails: Configure rules in our control panel to govern everything from voice and factual claims to API usage and data access, creating a non-negotiable operational perimeter.

- Enterprise role-based access control (RBAC): Ensure users and their agents only have the permissions required for their roles, preventing unauthorized actions.

- Supervised delegation: Guarantee that when a human delegates a task, the permissions are scoped and monitored — never absolute.

Article II

Foundational transparency

For generative AI, transparency often began and ended with a model card — a static summary of a model’s architecture and training data. This is no longer enough. For an autonomous agent that makes decisions and interacts with enterprise systems, we need foundational transparency. This principle demands a clear, accessible understanding of an agent’s core components, including its data lineage (where its knowledge comes from), its objectives (what it is programmed to achieve), and its decision architecture (the tools and logic it uses to execute tasks).

Foundational transparency isn’t understanding every single weight in a neural network. It’s having a complete blueprint of the agent as a system. If you can’t trace the data source of the information an agent uses or clearly state its operational goals, you cannot truly trust its outputs or its actions. This moves transparency from a passive disclosure to an active, auditable record of an agent’s design and purpose.

WRITER delivers foundational transparency by providing a complete, auditable blueprint for every agent. We achieve this with:

- Citable knowledge sources: Ground agents in your own single source of truth with WRITER Knowledge Graph, guaranteeing every action relies on company-verified data, not the open internet.

- Clear model documentation: Provide clear insight into our Palmyra family of LLMs so you understand their design, capabilities, and training.

- Centralized agent configuration: Manage and view every agent’s permissions, tools, and objectives in one place for an unambiguous, auditable decision-making framework.

Article III

Actionable explainability

With traditional AI, the central question of explainability was, “Why did the model say that?” The focus was on trying to understand the reasoning engine behind a specific output, like a classification or a sentence. But when an AI agent can execute a multi-step workflow, access a database, or update a CRM, the question becomes far more critical: “Why did the agent do that?” This is the principle of actionable explainability.

Actionable explainability reconstructs an agent’s entire decision-making journey, moving beyond interpreting a model’s internal state. It delivers a complete, step-by-step audit trail that shows not just the outcome, but the sequence of thoughts, tool uses, and actions that led to it, showcasing its multi-step nature. To have true accountability, you must be able to unwind an agent’s entire decision-making journey. Without this, you don’t have a “black box” — you have an unmanaged employee with access to your enterprise systems. That’s an unacceptable risk.

WRITER answers “Why did the agent do that?” with undeniable proof, not guesswork. We provide full accountability through:

- Human-readable logs: Track every step of Action Agent — our fully autonomous general-purpose agent for enterprises — in clear, understandable language, not cryptic code.

- Replayable sessions: Use our “flight recorder” for agent tasks to watch a complete, step-by-step replay of every tool used, decision made, and piece of data accessed.

Article IV

Continuous observability

Once an agent deploys, governance doesn’t stop. It becomes a continuous, active process. Traditional model monitoring focused on static metrics like accuracy and bias at a single point in time. But agents operate in dynamic environments where their behavior can drift, unforeseen issues can arise, and you constantly have to measure their impact on business outcomes. This is the principle of continuous observability.

Continuous observability provides real-time supervision of an agent’s performance, behavior, and business impact. It actively monitors for performance degradation, behavioral anomalies, and concept drift to ensure the agent continues to operate safely and effectively. More importantly, it connects the agent’s actions directly to business value, answering the critical question — “Is this agent delivering the expected ROI?” Without continuous observability, you’re managing a workforce with the lights off. You can’t scale what you can’t see.

With WRITER AI HQ, you get a single pane of glass to supervise your entire digital workforce in real time. We connect agent actions to business outcomes through:

- Live risk scores: Get an immediate pulse on operational safety and compliance, with instant alerts when an agent’s behavior deviates from established norms.

- Business KPI dashboards: Track agent performance against the metrics that matter to your business, providing clear, quantifiable proof of ROI.

Article V

Workforce enablement and education

Technology alone can’t transform an enterprise — people do. The most powerful AI agent is useless if your team isn’t ready to work with it. The shift to an agentic enterprise requires a corresponding evolution in roles, skills, and organizational structure. This is the principle of workforce enablement and education.

This isn’t just training users on a new interface. It’s creating a new operational model and fostering new roles to manage this digital workforce. This includes cultivating AI champions who advocate for adoption and identify new use cases, appointing program directors who oversee the agentic workforce strategy and ensure alignment with business goals, and empowering agent owners — subject matter experts who are responsible for the performance and behavior of specific agents. This principle ensures that as you scale your digital workforce, you are also scaling your human capacity to govern it effectively.

WRITER turns your business experts into agent builders, scaling your human capacity to govern a digital workforce. We achieve this with:

- No-code Agent Builder: Empower subject matter experts in marketing, sales, and support to directly build, test, and deploy the agents they need — no developers required.

- Comprehensive enterprise services: Partner with our team to establish governance structures, build centers of excellence, and train the AI champions who will lead your transformation.

Article VI

The human mandate

The five preceding articles establish the technical and organizational guardrails for agentic AI. But to what end? The final — and most important — principle is the human mandate, which dictates that all agentic systems must ultimately serve human-defined objectives. It’s the foundational principle ensuring that this new form of artificial intelligence operates not just for its own sake, but in direct alignment with our values, ethics, and strategic business goals.

The human mandate defines the entire Agentic Compact. It dictates that every action an agent takes must trace back to a clear human goal and a measurable business outcome. Autonomy without purpose is chaos — autonomy with purpose is transformation. This principle reframes agentic AI’s role from merely completing tasks to driving specific, quantifiable results, ensuring that this powerful technology always serves human-defined objectives.

This means that for every agent deployed, we answer not only what it did, but why it did it and how its actions contributed to the organization’s success. This guarantees that agent autonomy is never aimless. It’s always directed, purposeful, and accountable to the human stakeholders it serves, with human control.

WRITER makes sure every agent action serves a human-defined goal by tying performance to measurable business outcomes. We make the human mandate operational by:

- Evaluating agents on business KPIs: Move beyond uptime and task completion. Measure agents against the same metrics as human teams, such as time-to-resolution, compliance hit rates, or cost-to-serve.

- Integrated governance dashboards: Continuously measure, manage, and optimize the value of every agent, guaranteeing the entire digital workforce is always aligned with creating quantifiable business results.

The CIO’s five-step playbook for enterprise-wide adoption

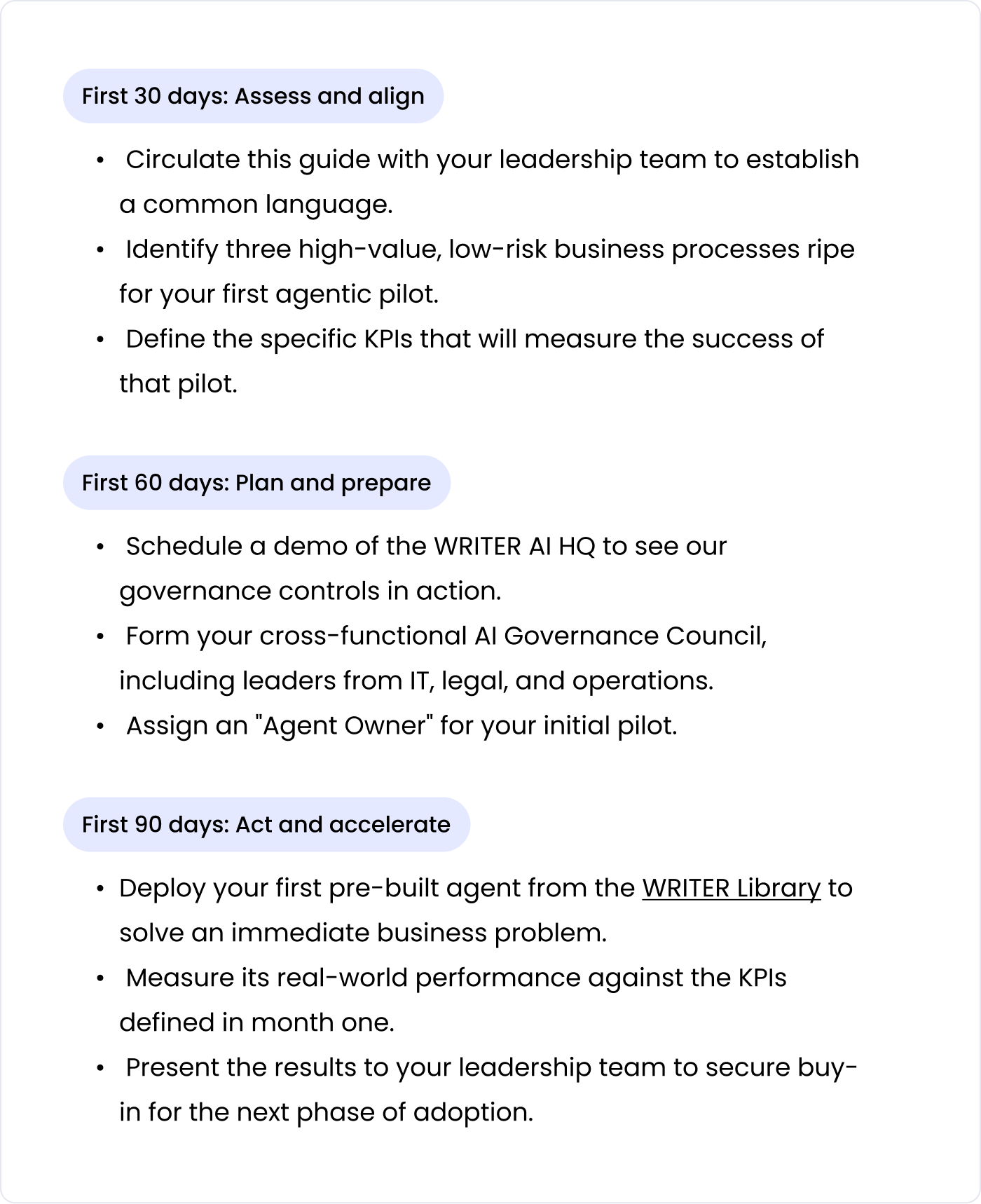

The Agentic Compact provides the principles — the “what” of safe and scalable agentic AI. But how do organizations translate these principles into a concrete, actionable plan? For CIOs and technology leaders, the journey from initial concept to a fully integrated agentic workforce requires a structured, phased approach. This playbook outlines a five-phase journey for operationalizing agentic AI governance, guaranteeing that innovation stays balanced with control at every step.

Phase 1: Define the mission

The first phase is foundational. Before building a single agent, leaders must define what success looks like. This involves identifying specific, high-value business processes that are ripe for agentic automation — tasks that are complex, repetitive, and have a clear impact on the bottom line. Success here comes down to defining clear goals and KPIs for your first pilots. You have to know what “good” looks like before you start. Just as crucial is securing stakeholder buy-in from across the organization. An agentic AI initiative is not just an IT project. It’s a business transformation initiative that requires alignment from leadership, legal, and the business units it will impact.

Phase 2: Secure early wins

With a clear strategy in place, execution begins with a phased rollout. The goal is to start small, prove value, and learn quickly. Early pilots should always incorporate strong human-in-the-loop (HITL) safeguards, where agents assist human experts or have their actions reviewed before finalization. This approach achieves two critical things at once. It minimizes risk by adding a layer of human oversight, and it builds organizational trust in the technology. As AI agents prove their reliability and value, their level of autonomy can gradually increase with minimal human intervention.

Phase 3: Build the governance council

As pilots move toward production, governance can’t remain siloed within the IT department. True enterprise-scale agentic AI requires a cross-functional governance council. This body should include leaders from IT, legal, compliance, operations, and HR, as each brings a unique and critical perspective. IT manages the technical infrastructure, legal and compliance ensure regulatory adherence, operations provides insight into business process impact, and HR addresses the implications for the human workforce. This collaborative oversight ensures a holistic view of risk and opportunity.

Phase 4: Harden the technical foundation

Successful agentic AI adoption relies on a strong technical foundation. This is where the enabling capabilities become paramount. It starts with powerful, fine-tuned models like Palmyra LLMs — designed for enterprise-grade accuracy and reliability. These models must be grounded in the company’s own data and business logic, which is the role of a Knowledge Graph. This curated repository of facts, processes, and policies ensures that agents operate based on verified information, not just the open internet. Finally, all of this requires support from a modern data governance infrastructure capable of handling the real-time demands of monitoring and logging agentic systems across the entire AI lifecycle.

Phase 5: Scale the innovation engine

With a mature governance framework and a solid technical foundation, the final phase is about fostering continuous improvement and innovation. This involves creating secure, sandboxed environments where developers and business users can safely experiment with new agents and use cases without putting the core business at risk. The governance council’s role shifts from gatekeeping to enabling, setting the policies for safe experimentation and creating a feedback loop where learnings from new pilots help refine the overall governance strategy.

This playbook provides a clear path from initial strategy to enterprise-wide innovation. To navigate this journey, organizations need more than just technology — they need a partner. WRITER’s enterprise services support CIOs and their teams at every phase, providing the expertise needed to define strategy, execute pilots, and ultimately scale a safe and effective agentic workforce.

How industry leaders are scaling AI agent governance

The shift to an agentic enterprise isn’t about flipping a single switch — it’s about building momentum. The crawl, walk, run, fly framework is the playbook for this transformation, showing how many organizations evolve from tackling simple, high-volume tasks (crawl) to opening their institutional knowledge (walk). The real results happen when agents begin automating complex workflows across different systems (run), ultimately leading to a fully orchestrated digital workforce that can reimagine entire business processes from end to end (fly). In the real world, this journey isn’t just theoretical — it’s delivering transformative results, as the following case studies from industry leaders reveal.

How Medisolv decodes healthcare reporting with generative AI

Learn more

Retail reimagined: Adore Me accelerates time to market with WRITER AI Studio

Learn more

A financial services giant’s unification: The run stage, driven by actionable explainability

For one of the world’s leading asset managers, a history of growth through acquisition had created a powerful but disconnected “house of brands.” With marketing operations and data trapped in silos, the firm faced a quintessential run stage challenge — how to unite its disparate parts. The answer was to deploy a suite of specialist action agents as a form of digital connective tissue. These agents are now being woven throughout the enterprise to automate cross-functional workflows, enforce brand consistency, and finally forge a single, integrated marketing strategy from a fragmented portfolio.

An ambitious transformation of this scale required buy-in at the highest levels, signaling a deep commitment to governed innovation. For a legacy organization in a highly regulated industry, the ability to build and deploy these agents hinged on a secure, compliant platform. By partnering with WRITER, the firm isn’t just adopting AI, it’s solving a decades-old structural problem. It’s a powerful demonstration of how agentic AI — guided by a strong governance framework — becomes the strategic lever for turning a collection of siloed companies into a unified marketing powerhouse.

A CPG giant’s competitive edge: From run to fly with workforce enablement

Inside a global consumer health giant, home to dozens of household-name brands, lay a vast, untapped reservoir of institutional knowledge. The company’s challenge wasn’t just creating new content — it was activating the intelligence already spread across its enterprise. Their journey quickly accelerated into a masterclass in the run stage. Pivoting from simple content automation to high-stakes knowledge work, their consumer insights team built a Knowledge Graph from nearly 100 market and retail trend documents. This “insights engine” slashed the time to produce a SWOT analysis from one week to just 1.5 days — an 80% time savings that immediately unlocked the capacity to explore new markets.

This initial success is now fueling their leap toward the fly stage. Empowered by WRITER’s no-code AI Studio, employees have become an army of agent builders, launching over 47 custom agents to tackle everything from regulatory submissions to supply chain analysis. By democratizing agent creation, the company is building an orchestrated workforce that turns buried institutional knowledge into a dynamic, enterprise-wide advantage.

The future is agentic: Leading with responsibility

The transition to an agentic enterprise is fundamentally shifting how we measure value. For generations, organizations measured strength by headcount. Today, organizations measure it based on the orchestrated value created by humans and agents working in concert. The most successful organizations will be those that seamlessly integrate this new digital workforce to amplify human creativity, accelerate core processes, and achieve unprecedented levels of productivity.

This future won’t build itself. It requires bold, visionary leadership. As leaders of technology and customer experience, CIOs and CMOs are in the driver’s seat. You’re the ones who can lead this change. The challenge — and the opportunity — is to move beyond reactive risk management and lead with proactive governance strategies that not only safeguard the enterprise but also create a framework for safe, scalable innovation. To build this foundation, we’ve detailed the complete framework in The Agentic Compact, available for download.

The path forward requires a new class of tools built for this new era. It requires a platform that understands the complexities of agentic systems from the ground up. With WRITER, enterprises get the end-to-end platform to build, activate, and supervise AI agents responsibly. From the foundational safety of the control panel to the transparency of Palmyra and the accountability provided by detailed logs and traces, WRITER provides the comprehensive capabilities needed to turn the promise of agentic AI into a reality.

For organizations looking for fast, compliant adoption, you can immediately explore pre-built agents in WRITER’s Agent Library and begin activating your new workforce today. To see how these controls and features come together to provide end-to-end governance, request a demo to see WRITER in action.

Frequently asked questions

How is agentic AI changing the role of AI in the workplace?

Agentic AI fundamentally transforms artificial intelligence from a passive tool that answers questions into a proactive digital workforce that takes action. Unlike previous AI systems that required step-by-step human guidance, these autonomous agents can independently plan and execute complex, multi-step workflows to achieve a specific business goal.

What are the primary risks of deploying autonomous AI, and how can businesses manage them?

The primary risks of deploying autonomous AI agents stem from their core capabilities: Their autonomy can lead to unintended actions at scale, their adaptive nature can result in unpredictable behavior, and their complexity makes it difficult to maintain clear oversight and control. To manage these risks, businesses must implement a governance framework that moves beyond simply checking model inputs and outputs.

As AI becomes more autonomous, what is the evolving role of the human workforce?

As AI agents take on more complex and autonomous tasks, the role of the human workforce shifts from direct execution to strategic oversight and management. Instead of performing repetitive processes, employees become “Agent Owners” or “Program Directors,” responsible for defining the goals, behavior, and performance of their digital counterparts. This new operational model empowers subject matter experts — those who know the business best — to build, deploy, and manage AI agents. The focus for humans moves toward higher-value work — innovation, strategy, and ensuring the entire agentic workforce is effectively aligned with overarching business goals, turning them into managers of a hybrid human-digital team.

What does it mean to build a “trustworthy” AI agent for an enterprise?

Building a trustworthy AI agent means creating a system that is safe, transparent, and accountable from the ground up. A trustworthy agent operates within a secure, contained environment where its permissions are strictly controlled and its actions cannot exceed predefined boundaries. Its design must be transparent, meaning the enterprise has a clear blueprint of its data sources, objectives, and decision-making logic.