Enterprise transformation

– 14 min read

What is generative AI? What’s hype and what’s real

Updated for 2025

The buzz around generative AI has evolved from a deafening roar to a strategic hum in boardrooms worldwide. In 2025, the conversation has shifted from “what is generative AI?” to “how are we deploying generative AI agents to drive measurable business results?” With enterprise adoption jumping from 55% in 2023 to 78% in 2025, and a projected market size of over $66 billion in 2025, the era of experimentation is over. The age of scaled, strategic implementation is here.

But while the technology has matured dramatically, the path to successful implementation is littered with unrealistic expectations and organizational friction that many leaders underestimate.

This article cuts through the noise, providing a clear-eyed view of the current landscape. We’ll explore how generative AI systems are being integrated into core business functions and how you can speak to its value with authority.

- The buzz around generative AI has evolved from initial hype to strategic adoption, with 78% of enterprises using it in 2025 and a market size projected over $66 billion.

- Generative AI creates new content like text, images, code, and synthetic data, while agentic AI manages workflows and makes autonomous decisions. They often work together to enhance business processes.

- Common misconceptions from early hype include viewing AI as a magic bullet, assuming one-size-fits-all solutions, and expecting plug-and-play ROI. This lead to organizational friction and AI fatigue.

- Successful generative AI adoption involves clear planning, stakeholder involvement, specialized enterprise solutions, and integrated workflows.

- Despite challenges, generative AI is delivering measurable business results, with 97% of executives and 88% of employees reporting benefits in productivity, decision-making, and work satisfaction.

Table of contents

- Quick definitions: Generative AI, large language models, and foundation models

- Understanding the difference between generative AI and agentic AI

- What’s hype

- Addressing real implementation challenges

- What’s real

- Survey the landscape of business-ready generative AI

- Bonus glossary: AI terminology

Quick definitions: Generative AI, large language models, and foundation models

To lead effectively in today’s AI-driven world, a solid grasp of generative AI is a must. Here are the core concepts that matter most for business leaders.

Generative AI is artificial intelligence that uses natural language processing (NLP) and machine learning models to create new content that mimics human-generated output. Unlike traditional AI systems that simply recognize and classify data, generative AI models create something new:

- Text and written content

- Images and visual assets

- Software code

- Audio and video content

- Synthetic data for training other AI models

In business contexts, generative AI solutions function like sophisticated content creation partners. These AI systems can handle repetitive content tasks, generate first drafts, and provide creative starting points that your teams can refine and customize.

Large language models (LLMs) are the foundational engine behind most modern generative AI tools that deal with natural language. These are massive deep learning models, trained on vast quantities of text and code, that excel at understanding and generating human-like language.

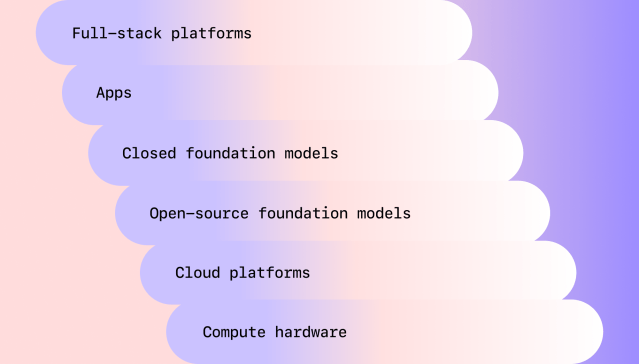

The term “foundation model” represents a broader concept. These are large-scale machine learning models trained on immense, diverse datasets that can be adapted, often through a process called fine-tuning, to a wide range of downstream tasks. An LLM is a type of foundation model, but foundation models can also be trained on images, audio, and other data types.

For enterprise use, the source and quality of training data significantly impact both output quality and business risk. If generative AI systems are trained on biased, toxic, or copyrighted materials, businesses risk publishing inappropriate content. Similarly, if generative adversarial networks or other AI models incorporate sensitive data from user inputs into their training corpus, organizations may face privacy violations or intellectual property concerns. The quality and type of training data have a major influence on the accuracy and quality of output and the ways you can use it.

That’s why enterprise-grade models are built differently. For example, Palmyra LLMs power the WRITER enterprise platform for AI-powered work. With a curated, foundational training set of business writing and marketing data, Palmyra LLMs are geared towards use cases across a company, from marketing and sales to operations and product.

Understanding the difference between generative AI and agentic AI

You’ve probably heard “generative AI” and “agentic AI” thrown around, maybe even in the same sentence. It’s easy to get them mixed up, but they play two very different — and equally important — roles in the business world.

Think of it this way — one is the creator, and the other is the doer.

Generative AI is the creator. These tools focus on creation. Generative AI excels at producing new text, images, code, or other media based on prompts and training data. Most generative AI models require human feedback, prompting, and oversight for each task.

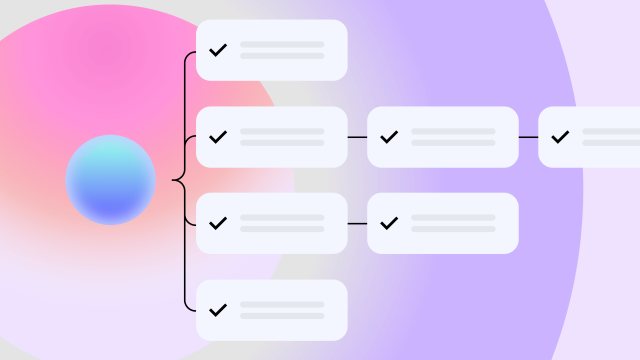

Agentic AI is the doer. These AI systems go beyond content creation to autonomous decision-making and action-taking. AI agents can perceive their environment, set goals, make decisions, and execute tasks with minimal human intervention. Agents can manage an entire multi-step workflow, like processing an invoice from start to finish, or handling a customer support ticket without needing you to guide it every step of the way.

The real magic, of course, is when they work together. An AI agent (the doer) can manage the entire process of onboarding a new customer. As part of that workflow, it might use generative AI (the creator) to draft a personalized welcome email.

So, while generative AI provides the creative spark, agentic AI provides the horsepower to automate complex business processes from end to end.

AI agents in the enterprise

Learn more

What’s hype

Remember the gold rush feeling around generative AI back in 2022? Fast forward to today, and the reality of using it in business is a lot more complicated. The early buzz was all about chatbot agents, but the real story is what’s happening inside companies today — and it’s not always a smooth ride.

The early hype cycle created several persistent misconceptions that continue to derail AI initiatives today:

- AI agents as magic bullets: The promise that dropping an AI tool into any workflow would instantly optimize everything led many organizations to deploy solutions without proper process mapping or change management. The result? AI technology that works against existing workflows rather than enhancing them.

- One-size-fits-all solutions: Generic generative AI tools were marketed as universal solutions, but they often lack the specialized training and fine-tuning needed for specific enterprise use cases. A customer service chatbot trained on general internet data won’t understand your company’s specific products, policies, or voice.

- Plug-and-play ROI: The idea that AI will deliver instant returns without significant investment in strategic planning, infrastructure, change management, and workforce training is a common myth. In reality, successful AI adoption requires investment in these programs that drive adoption and transformation.

According to our 2025 enterprise adoption report, this hype-driven approach has real consequences. Nearly two-thirds of C-suite executives say generative AI adoption has sparked division within their organizations, with 42% stating that it’s causing significant disruption. This reflects the reality that many organizations jumped into AI initiatives without a proper strategy or realistic expectations.

The disconnect between hype and reality has created what many IT leaders call “AI fatigue” — where teams become skeptical of any AI initiative because previous implementations failed to deliver on inflated promises.

Addressing real implementation challenges

Moving beyond the hype requires acknowledging the genuine obstacles that organizations face when deploying generative AI at scale. Understanding these challenges upfront allows for better planning and more realistic expectations.

Organizational and cultural hurdles

Unlike traditional software rollouts, AI implementation often changes how people think about their work, not just what tools they use. Sales teams may resist AI-generated content because they’re proud of their communication skills, while legal teams may be hesitant to use AI for document review due to liability concerns.

Most employees need training not just on how to use AI tools, but on how to prompt them effectively, interpret their outputs, and integrate them into existing workflows. People managers and strategic leaders that learn to develop an “agentic mindset” will thrive. This is the ability to recognize and experiment with AI use cases, and it can help their teams scale their best work and business results.

But this requires more than just individual skill-building. Organizations also need clear policies around AI decision-making authority, human oversight requirements, and error handling procedures, because when an AI agent makes a mistake, questions of responsibility and accountability become complex.

Technical and operational challenges

Generative AI is only as good as the data it can access, and many organizations discover their data is siloed, inconsistent, or outdated only after beginning implementation. Connecting AI tools to internal knowledge and existing business systems often reveals technical debt and integration complexity that wasn’t apparent before. Unlike traditional software, AI systems need constant monitoring that goes beyond just checking if they’re running. This monitoring needs to track accuracy, bias, and business impact — areas where many teams lack the right measurement tools.

Security and compliance obstacles

When AI systems process sensitive data, organizations must ensure compliance with regulations like GDPR and industry-specific requirements, often requiring new data handling processes and audit capabilities. As AI models are updated and fine-tuned, organizations need governance frameworks to track changes and manage version control.

Resource allocation and ROI measurement

AI implementation costs extend far beyond software licensing to include additional infrastructure, specialized personnel, ongoing training, and process redesign. Determining whether AI initiatives deliver value requires new measurement frameworks, since traditional ROI calculations may not capture the full impact on productivity, customer satisfaction, or innovation capacity. Organizations also often underestimate the infrastructure and governance requirements needed to scale AI initiatives from pilot projects to enterprise-wide deployment.

The companies successfully navigating these challenges share common characteristics. They invest time in upfront planning, involve stakeholders in the design process, start with clearly defined use cases, and build measurement frameworks before implementation begins.

What’s real

Despite the implementation challenges, generative AI has moved well beyond proof-of-concept stage into practical business implementation that’s delivering measurable results. The organizations succeeding with AI are those that combine strategic investment with a measured approach to deployment.

Mature enterprise adoption with real results

With the right approach, the transformation in enterprise AI adoption has been remarkable. 79% of senior executives say AI agents are already being adopted in their companies, and 88% say their team plans to increase AI-related budgets in the next year. But more importantly, these aren’t just experimental deployments — they’re integrated into core business operations.

Organizations are seeing real results from generative AI work, but success correlates directly with investment level and strategic approach. Our generative AI adoption in the enterprise report finds a 40 percentage-point gap in success rates between companies that invest the most in AI and those that invest the least. The companies seeing nirvana levels of ROI are the ones treating AI as a strategic initiative rather than a tactical tool.

Specialized enterprise solutions replacing generic tools

Business-ready generative AI platforms now offer enterprise-grade security, compliance features, and domain-specific training. These aren’t general-purpose customer service chatbots, but purpose-built AI systems and LLMS designed for specific business functions. For example, WRITER offers highly specialized models — Palmyra Med for healthcare and Palmyra Fin for financial services, which are trained on curated, high-quality domain data.

AI agent orchestration and workflow integration

The most significant development has been the evolution from standalone AI tools to coordinated AI agent workflows. Agentic workflows are designed to connect multiple specialized AI agents to facilitate complex business processes and handle multilingual and multimedia data. Instead of employees switching between multiple AI tools, they interact with integrated agent workflows that handle complex, multi-step business processes from start to finish.

Measurable business impact and growing optimism

The data on AI satisfaction tells a compelling story. Our AI adoption report finds that generative AI is benefiting 97% of executives and 88% of employees. Optimism around AI is at an all-time high, driven by tangible improvements in productivity, decision-making speed, and work satisfaction.

Survey the landscape of business-ready generative AI

In 2025, the question isn’t if you should invest in generative AI, but how to integrate it strategically to solve specific business challenges. A thoughtful and purposeful approach is critical. Before evaluating vendors, your organization must define clear requirements and expectations, focusing on ROI, security, and scalability.

When you assess different generative AI solutions, consider what truly makes a platform trustworthy and enterprise-grade:

- Advanced security and data privacy

- Integration with existing AI systems

- Customization and brand control

- Compliance and regulatory adherence

Solutions like WRITER are designed from the ground up to address these critical business needs, providing a secure and cohesive environment for generative AI adoption.

Bonus glossary: AI terminology

Here’s a quick, simple glossary of key terms you’ll need to know when it comes to AI tools.

Artificial Intelligence (AI)

A general term for the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.

AI Agent

An autonomous system that uses AI, particularly generative AI models, to perceive its environment, make decisions, and perform complex tasks to achieve specific goals. In an enterprise context, agents are specialized tools for business workflows.

Machine learning (ML)

A sub-type of AI where machines don’t need to be explicitly programmed. They use algorithms to identify and learn patterns in data, apply that learning, and improve themselves to make better and better decisions.

Deep learning models

A subset of machine learning using neural networks with many layers (deep neural networks) to learn complex patterns from large datasets. The engine behind foundation models.

Diffusion model

A type of generative model that creates high-quality images by starting with random noise and progressively refining it into a coherent picture, based on text prompts.

Foundation model

A large-scale machine learning model trained on a vast quantity of broad data, designed to be adapted or fine-tuned for a wide range of downstream tasks.

Generative adversarial network (GAN)

An AI architecture using two competing neural networks — a “generator” and a “discriminator” — to create realistic, synthetic data, often images.

Large language model (LLM)

A model trained on a massive quantity of text data for performing natural language tasks, like answering questions, generating text, summarizing documents, and more. For example, Palmyra Large from WRITER is an LLM that’s trained on about 20TB of data.

Proprietary data

A company’s own unique data, such as internal documents, customer lists, and operational metrics. It’s a key asset for fine-tuning AI models for a specific business context.

Synthetic data

Artificially generated data that mimics the statistical properties of real-world data. Used for training AI models without exposing sensitive information, or for creating training data that’s otherwise scarce.

Retrieval-augmented generation (RAG)

A technique that improves the accuracy of LLMs by connecting them to an external, authoritative knowledge base. The model “retrieves” relevant information first, then uses it to “generate” a more factual and context-aware response.

Natural Language Processing (NLP)

A method of teaching computers to understand the way humans use language. It’s NLP that allows Google to make sense of what you type into the search bar.

Natural language understanding (NLU)

A branch of NLP where computers figure out what human-generated text means.

Natural language generation (NLG)

A branch of NLP where computers generate human-like text.

Frequently asked questions

How is agentic AI different from generative AI?

Agentic AI is a type of AI that can perceive its environment, make decisions, and take actions with minimal human intervention. Generative AI can create new content based on patterns and data it has learned from. The key difference between the two lies in their level of autonomy and decision-making capabilities.

What are some of the most common use cases for generative AI in the enterprise?

Generative AI has a wide range of use cases in the enterprise, from content creation and customer service to predictive maintenance and supply chain optimization. To identify new opportunities for adoption, organizations can conduct a thorough needs assessment, engage with stakeholders, and explore emerging trends and technologies.

What are some of the potential risks and challenges associated with using generative AI in regulated industries?

Generative AI can bring significant benefits to regulated industries, such as healthcare and finance, but it also poses unique risks and challenges. Organizations must ensure that their generative AI models are designed and trained with regulatory compliance in mind, and that they have controls in place to detect and prevent any potential data breaches or other security incidents.

Does generative AI replace human workers in the enterprise?

No, generative AI is designed to augment human capabilities, not replace them. While AI can automate certain tasks, it lacks the creativity, empathy, and critical thinking skills that humans bring to the table. Generative AI is best used as a tool to assist humans in their work, freeing them up to focus on higher-value tasks that require human judgment and creativity.